Four days after the Jan. 6 insurrection on Capitol Hill, a member of the “Not My President” Facebook group wrote in a post, “remember, our founding fathers were seen as terrorist [sic] and traitors.”

A fellow group member commented, “I’ll fight for what’s right, this corruption has to be stopped immediately.”

See our data here.

Three months later, Facebook recommended the group to at least three people, despite Facebook CEO Mark Zuckerberg’s repeated promise to permanently end political group recommendations on the social network specifically to stop amplifying divisive content.

The group was one of hundreds of political groups the company recommended to its users in The Markup’s Citizen Browser project over the past five months, several of which promoted unfounded election fraud claims in their descriptions or through posts on their pages.

Citizen Browser consists of a paid nationwide panel of Facebook users who automatically send us data from their Facebook feeds.

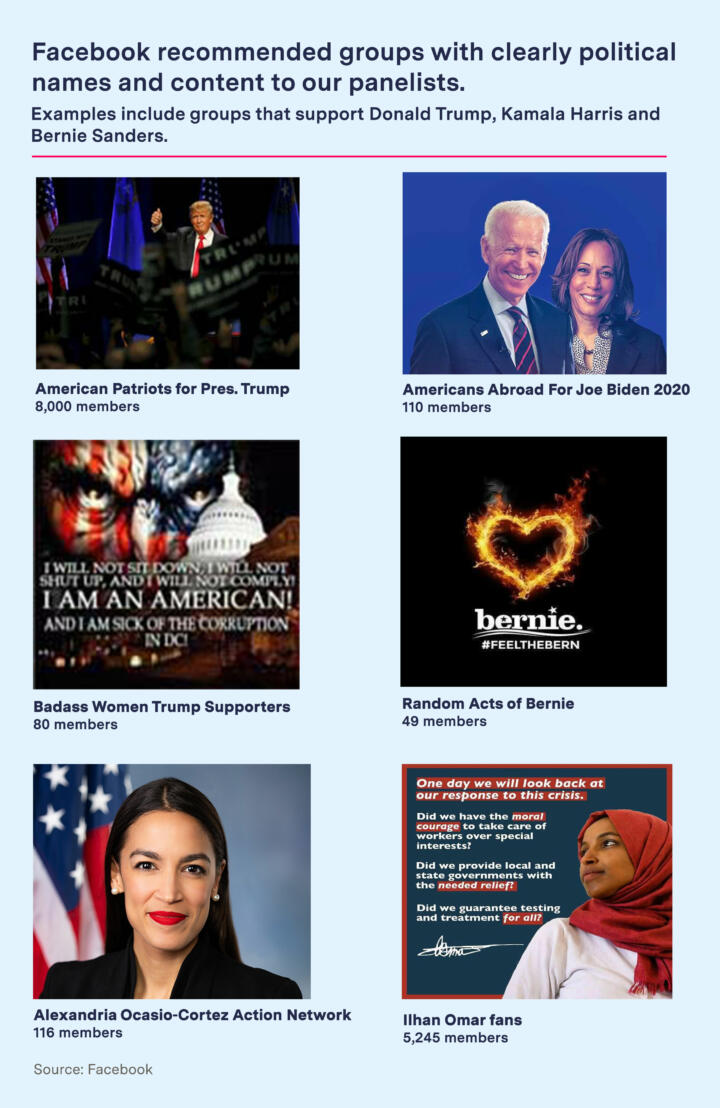

In a four month period, from Feb. 1 to June 1, the 2,315 members of the Citizen Browser panel received hundreds of recommendations for groups that promoted political organizations (e.g., “Progressive Democrats of Nevada,” “Michigan Republicans”) or supported individual political figures (e.g., “Bernie Sanders for President 2020,” “Liberty lovers for Ted Cruz,” “Philly for Elizabeth Warren”). In total, just under one-third of all panelists received a recommendation to join at least one group in this category.

Of the more than 460,000 groups recommended to our panel in this period, we used keyword-based classification to assess whether they contained support for politicians, movements, parties, or ideologies—content that would be classed as political under Facebook’s guidelines to advertisers on the platform. We conducted our assessment by building a keyword list containing the names of the president, vice president, and all serving members of Congress, plus two high-profile formerly serving politicians (Hillary Clinton and Donald Trump), and searching group names for the presence of any of these keywords. Results were manually reviewed to remove groups related to non-political figures sharing names with politicians, such as the musician Al Green.

We also counted recommendations for groups supporting local or national branches of the Republican and Democratic parties, defined by searching for the keywords “Democrat” or “Republican” and manually filtering out groups unconnected to U.S. politics, e.g. the United Kingdom Democratic Socialist Movement. (For a full list of groups and methodology, see our data on GitHub).

Facebook has not said how it defines a political group.

“We use automated systems to detect civic-related groups and do not recommend them to people when we detect them, and are investigating why some were recommended in the first place,” said Facebook spokesperson Kevin McAlister by email. “Over 75% of the groups the Markup identified were only recommended to one single person. And even if every group they flagged should not have been recommended, it would represent just 0.2% of the total groups recommended to ‘Citizen Browser’ panelists.”

He added that Facebook determines if a group is civic through factors including its title, description, and content.

The list of political groups flagged by The Markup almost certainly represents an undercount of the total number of political groups in the dataset, as it is based on a narrowly defined set of keywords rather than an attempt to comprehensively identify all possible political terms and phrases.

We also searched for groups with “militia” in the name and identified one recommended to our panelists that appeared to be political. The “Northern New York militia,” according to its about page, promotes anti-government revolutionary rhetoric to its members: “We the people are tired of slimy politicians killing our country. We need to stand up and push back. A revolution is on the way. Let’s be ready when it happens.”

The group, formed in December, is private and relatively small (57 members) but is still active, with four posts in the last month. We attempted to reach the administrator by email but did not receive a response.

It’s hardly the first time Facebook has struggled to uphold its promise, made in the run-up to the 2020 presidential election, to stop promoting divisive and potentially dangerous content. The pledge followed criticism from lawmakers and its own internal research finding that the suggestions push people toward extremist groups.

A Markup investigation in January found that the company was still pushing partisan political groups to its users, with several of those groups promoting conspiracy theories and calls for violence against lawmakers.

Facebook blamed the mistake on technical issues in a letter to Sen. Ed Markey (D-MA), who had demanded an explanation for the broken promise.

In an earnings call on Jan. 27, Zuckerberg assured investors that this time—really—Facebook would permanently stop recommending political groups.

Citizen Browser

Facebook Said It Would Stop Pushing Users to Join Partisan Political Groups. It Didn’t

According to Citizen Browser data, the platform especially peppered Trump voters with political group recommendations

“I was pleased when Facebook pledged to permanently stop recommending political groups to its users, but once again, Facebook appears to have failed to keep its word,” Markey told The Markup after learning of our latest findings. “It’s clear that we cannot trust these companies to honor their promises to users and self-regulate.”

Political group recommendations have slowed among our panelists since our January investigation, though they have not, as was promised, been eliminated. In January, our reporting found that 12 of the top 100 groups recommended to our panelists were political. In our most recent data, from Feb. 1 to June 1, only one of the top 100 groups recommended to panelists was political. We assessed whether groups in the top 100 were political by looking at the group name, “About” page, and rules (if posted), as well as whether posts in the discussion feed mentioned political figures, parties, or ideologies.

The Markup also found 15 political groups recommended by Facebook to our Citizen Browser panelists that had “Joe Biden Is Not My President” as the group name, or some variation of it.

Two of the groups, “Not my President” and “Biden Is Not My President,” had previously been flagged by Facebook for containing troubling content—but that didn’t stop Facebook from suggesting the groups to our panelists.

The groups contained posts and memes claiming that Biden didn’t legitimately win the election, a conspiracy theory tied to Trump’s discredited claims about fraudulent voters and mishandled vote counting. In total, the groups were recommended to 14 panelists between March and April, with some groups recommended to multiple panelists.

“If Joe Biden gets in office by this cheating voter fraud, good bye America, good bye country because the Democratic party will destroy our country for good,” one commenter in the “Not my President” group wrote in December.

The memes in the “Biden is Not My President” group included an image of an empty coffin with a caption claiming that the occupant had come back to life to vote for Biden. A post in the “Not my President” group showed a screen capture of the protagonists from the movie Ghostbusters captioned to suggest they were there in case “all the dead people that voted for Biden become violent.”

The group’s “About” description includes the sentence, “Let’s see how many people we can get to really show them that President Trump won the election.” Facebook recommended the group to three Citizen Browser panelists. As of June 10, the group had 255 members.

In another “Joe Biden is Not My President” group, the admin posted a photo of a rifle last December, writing, “I won’t put up with people destorying [sic] my family’s or friend’s property. I have the right to defend myself and others.”

The group admins did not respond to requests for comment.

The memes can spread disinformation, said Nina Jankowicz, a Global Fellow in the Science and Technology Innovation Program at Wilson Center for Public Policy and author of “How to Lose the Information War.”

“I’d hope people browsing their Facebook feed and seeing a dank meme recognize it’s not an authoritative source of information,” Jankowicz said. “But when you see meme after meme after meme saying dead people are voting for Biden, over time it’s that drip-drip-drip that changes your perception of reality.”

[O]ver time it’s that drip-drip-drip that changes your perception of reality.

Nina Jankowicz, Wilson Center for Public Policy

In 2016, Facebook’s researchers found that 64 percent of people who joined extremist groups were there because of the social network’s own recommendations, according to The Wall Street Journal. The Markup found several groups recommended by Facebook to Trump voters that organized travel logistics to Washington, D.C., for Jan. 6.

During the 2020 election, Open Source Election Technology Institute co-founder Gregory Miller said his organization had a significant amount of discussion with election administrators on how to get their messaging across about how they were keeping the vote secure. But election officials haven’t been able to fight off the wave of misinformation flooding social media, including in Facebook groups, Miller said.

He said he’s received death threats from people for debunking election fraud claims and knows many election administrators who have had their lives threatened.

“We know that election administrators have been flummoxed by the impact of social media, just for trying to do their jobs,” Miller said. “In our professional opinion, Facebook in its current form and conduct represents a clear and present danger to the safety of election administrators and the integrity of election administration itself.”

A survey from the Brennan Center for Justice found that 78 percent of election officials said that misinformation on social media made their jobs more difficult, while 54 percent of respondents believed it made their jobs more dangerous.

In June, an advocacy group called for Facebook to investigate whether the social network contributed to spreading election fraud claims that fueled the Jan. 6 riot in Washington, D.C.

“In a lot of cases, groups that were tangentially political led people to groups that were much more violent over time,” Jankowicz said. “Facebook unfortunately either does not have the capacity in terms of subject matter experts who can be on this all the time to update their classifiers, or perhaps—I’ve heard them say this over and over that they’re not going to be 100 percent successful all the time.”