Subscribe to Hello World

Hello World is a weekly newsletter—delivered every Saturday morning—that goes deep into our original reporting and the questions we put to big thinkers in the field. Browse the archive here.

Hello, friends,

It’s Nabiha again. Data fuels AI. We know this—and it’s why the fights around copyright and AI are particularly fascinating right now. But it’s worth thinking about how our recent views on data and data governance are getting in the way of truly understanding AI’s impact on humanity—and what an alternative vision might look like.

Often, our data is extracted invisibly, harvested in the background while I go about my day reading, clicking on things, making purchases, and running to appointments. And so we talk about data often through the lens of individual choice and individual consent. Do I want that disclosed? Do I click “accept all cookies”? Do I care?

AI raises the stakes because now that data is not only used to make decisions about you, but rather to make deeply powerful inferences about people and communities. That data is training models that can be deployed, mobilized through automated systems that affect our fundamental rights and our access to whether you get a mortgage, a job interview, or even how much you’re paid. Thinking individually is only part of the equation now; you really need to think in terms of collective harm. Do I want to give up this data and have it be used to make decisions about people like me—a woman, a mother, a person with particular political beliefs?

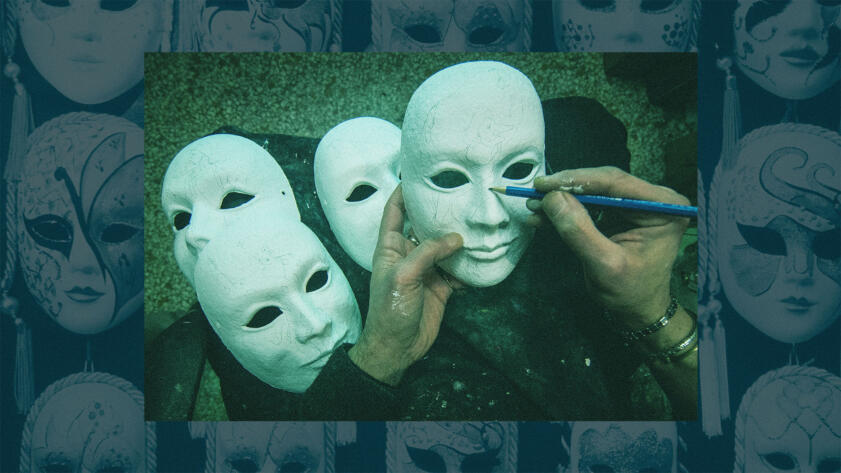

Dr. Joy Buolamwini is someone who has been thinking about collective harm and AI for years, and that’s why I so appreciate her work as a fellow traveler when it comes to algorithmic accountability and justice. Her new book, “Unmasking AI: My Mission to Protect What Is Human in a World of Machines,” is a must-read exploration of how broad swaths of humanity are vulnerable in a world that is rapidly adopting AI tools. We, like Buolamwini, are optimists: We can demand a better path than the one we’re on, but that requires us thinking collectively, participating, and innovating in a different way than we have in the past.

Keep reading to learn more about “the excoded,” facial recognition at the airport, and whether we are “living in the age of the last masters.”

(This interview has been edited for brevity and clarity.)

Nabiha Syed: I’ve taken eight flights in the last two weeks, and each and every one has asked me to scan my face as I board. Opting out was, um, chaotic. How did we get here, and what’s going on?

Hello World

Face Scanning and the Freedom to "Be Stupid In Public": A Conversation with Kashmir Hill

The longtime privacy journalist on how investigating Clearview AI helped her appreciate facial recognition—and envision a chaotic future

Dr. Joy Buolamwini: We continue to see increased adoption of facial recognition technology by government agencies despite ample evidence of discrimination and privacy risks, often under the logic of efficiency and customer service. One of the reasons I continue to resist biometric surveillance technologies is because of how easily face data can be incorporated into weapons of policing, weapons of war. A few months ago, I came across a video of a quadruped robot with a gun mounted to its back. These systems can be equipped with facial recognition and other biometric technologies, raising grave questions. What happens when civilians are confused for combatants? Which civilians are more likely to be labeled suspicious? What new class of war crimes is introduced with digital dehumanization? Will police brutality be augmented by robot brutality? History rhymes. It is not lost on me the ways in which dogs were used to brutalize enslaved individuals. The quadrupeds I see recall police dogs being used on protesters fighting for their dignity. Even if there wasn’t any racial, gender, or age bias, these systems by construction are extremely dangerous. The known propensity for facial recognition misidentification by law enforcement only heightens the risks.

When I see the rollout of facial recognition at airports and increased integration into government services, the alarm bells sound, because as a researcher I think about the trajectory of this moving puck. Yes, these are vastly different use cases, but the more people are exposed to facial recognition, the more government officials attempt to weave a narrative that people are used to (and thus accepting of) these systems.

Normalizing biometric-identity systems marches us closer to state surveillance that would be the dream of authoritarian regimes. If you are flying this summer, I highly encourage people to opt out of face scans not just because it is discriminatory and invasive, but also because it sends a message that we value our biometric rights and our biometric data.

Syed: The only upside of my airport adventures is that I get a “teachable moment” about facial recognition technology. Protests are a similar opportunity. But it underscores how much awareness of surveillance tech has evolved since your landmark study, Gender Shades. What ground have we gained, and what’s the next frontier?

Buolamwini: As I write about in my book “Unmasking AI: My Mission to Protect What Is Human in a World of Machines,” since the Gender Shades research I published with Dr. Timnit Gebrue was released and subsequently the Actionable Auditing paper co-authored with Deb Raji, we have seen changes in industry practice. All of the U.S. companies we audited have stepped back from selling facial recognition to law enforcement in varying capacities. We have seen legislative changes with over half a dozen cities adopting restrictions on police use of facial recognition. Such progress is commendable, because without these safeguards we will have the kind of nightmares we continue to see played out by the Detroit Police Department. The nightmares of AI discrimination and exploitation are the lived reality of those I call the excoded—people like Porcha Woodruff.

AI-powered facial recognition misidentification led to her false arrest. She was eight months pregnant, sitting in a holding cell and having contractions. She is not alone in facing algorithms of discrimination. Three years before Porcha’s arrest, Robert Williams was falsely arrested in front of his two young daughters by the same Detroit Police Department. Despite ample evidence of racial bias in facial recognition technologies, we still live in a world where preventable AI discrimination is allowed. We must stop this irresponsible use of AI and make sure no one suffers the nightmares experienced by Porcha, Robert, and their families.

The excoded include even more people falsely arrested due to AI: Michael Oliver, Nijeer Parks, Randal Reid, and others whose names may never make headlines, but whose lives matter all the same. Keep in mind no one is immune from AI harm. We can all be excoded. AI-powered facial recognition is entering more areas of our lives from employment to healthcare and even transportation. The Transportation Security Administration plans to make face scans the default way to verify your ID to travel in over 400 airports in the United States, potentially leaving you at risk for facial recognition failure and laying down the infrastructure for algorithms of surveillance.

How will people build professional callouses if the early work that may be viewed as mundane essentials are taken over by AI systems?

Dr. Joy Buolamwini

What has changed since Gender Shades came out is that generative AI systems allow further exploitation of our biometric data that goes beyond detecting, classifying, and recognizing faces. Generative AI systems that allow for biometric clones can easily exploit our likeness through the creation of synthetic media that propagate deep fakes. We need biometric rights that protect our faces and voices from algorithms of exploitation. I stand with artists and writers who are protesting against the algorithmic exploitation of their creative work. Companies have received billions of dollars of investment while using copyrighted work taken without permission or compensation. This is not fair. The excoded includes those in endangered professions. Your profession could be next. When I think about the next frontier, I think about the exploitation of our biometrics, and I also think about the apprentice gap. What happens when entry-level jobs are automated away? Even celebrity cannot shield you from being excoded, as Tom Hanks discovered when his digital twin made with generative AI was used to promote a service he did not endorse.

Let me take a tangent for a second that reconnects. Earlier this year I was featured in Rolling Stone along with other badass women who have been learning about the dangers of AI for years. During the photoshoot, I was wearing a yellow dress which served as inspiration for me to buy a yellow electric guitar. When I started playing the guitar again, I realized I still had my callouses in places. I could thank my younger self for putting in the work years earlier.

How will people build professional callouses if the early work that may be viewed as mundane essentials are taken over by AI systems? Do we risk living in the age of the last masters, the age of the last experts?

Syed: So many in industry, including VCs and startups, are calling for equitable AI or responsible innovation. I know you’ve been engaging directly with tech companies, including a recent conversation with former OpenAI CEO Sam Altman. What can companies do today to mitigate harm?

Buolamwini: As I shared with Sam, companies claiming to be responsible must contend squarely with creative rights. As a new member of the Authors Guild and the National Association of Voice Actors having just recorded my first audiobook, I am even more attuned to creative rights. We can address algorithms of exploitation by establishing creative rights that uphold the four C’s: consent, compensation, control, and credit. Artists should be paid fairly for their valuable content and control whether or how their work is used from the beginning, not as an afterthought. Too often the data collection and classification processes behind AI systems are unethical. VCs and startups should adopt “know your data” policies that examine the provenance of the data that could potentially power their AI systems. What would it look like to build ethical data pipelines? What mechanisms could be put in place to have fair trade data? When we think about the environmental cost of training AI systems, what alternatives would put us on a path to green AI?

If you are using a vendor or building on top of existing AI models, VCs and startups must be aware of context collapse, which occurs when a system developed for one use case is ported over to another. In the book I write about a Canadian startup that wanted to use voice recognition technology to detect early signs of Alzheimer’s. The intentions were good. They trained their system with Canadians who spoke English as a first language. When they tested the system on Canadians who spoke French as their first language, some of these French-first speakers were misclassified as having Alzheimer’s.

At the Algorithmic Justice League, we have started building a reporting platform that serves as an early warning system of emerging AI harms. The process has underscored the importance of making sure there are mechanisms for people to surface issues with AI systems once they have been deployed. Having these types of feedback mechanisms in place can help companies continuously monitor their AI systems for known and unknown harms.

News

The Problems Biden’s AI Order Must Address

The Markup gives a section-by-section breakdown of the summarized executive order on artificial intelligence

Syed: And of course, we can’t leave out the rapidly changing regulatory environment—between the U.K. AI Safety Summit, the U.S. Executive Order, and the EU “trilogue” discussion on AI regulation, there’s a lot of moving parts. Where do you think governments are getting it right, and what are they missing?

Buolamwini: I commend the EU AI Act for setting out explicit redlines, including the provision that live facial recognition cannot be used in public spaces. I remember a time when I would mention AI bias or algorithmic discrimination and eyes would glaze over. For AI governance to be at the top of federal and international priorities the way we see today is a far cry from 2015, when I had my experience of coding in a white mask to have my dark skin face detected, which is what started the work that led to the creation of the Algorithmic Justice League. It is encouraging to see the EO build on the values of the Blueprint for an AI Bill of Rights including having protection from algorithmic discrimination. That being said, the EO most impacts government agencies and areas where there are federal funding dollars that create incentives to follow the EO. We ultimately need legislation that goes beyond voluntary company commitments. What I see missing from most conversations around AI governance is the need for redress. Try as we might to prevent AI harms, what happens when something goes wrong, and what about those who have already been exceeded?

Syed: Something that keeps me up at night is “automation bias”—the idea that humans favor suggestions from automated decision-making systems, ignoring contradictory information made without automation, even if it is correct. A subcategory of this is what I call the Homer Simpson problem: Just because you have a human in the loop doesn’t mean they’re paying attention or care. I worry that blunting human engagement and human oversight will be devastating for our dignity. What keeps you up at night?

The quadrupeds with guns haunt my sleep.

Dr. Joy Buolamwini

Buolamwini: Yes, humans in the last-minute loop are tokenistic at best. What keeps me up at night is thinking about the excoded and the ways in which we can be excoded. Those who never have a real chance at job or educational opportunities because AI systems unfairly screen them out. Those who do not receive vital organs or adequate insurance because of algorithmic discrimination in healthcare AI tools and those who face medical apartheid turbocharged by AI. I worry about the ways in which AI systems can kill us, slowly building on the notion of structural violence. AI systems that deny tenants housing or relegate them to properties that are in more polluted areas or embedded in food deserts keep me up at night. Your quality of life and life chances lessened due to a series of algorithmic decisions that reinforce structural inequalities under the guise of machine neutrality. The quadrupeds with guns haunt my sleep.

Syed: Of course, the opportunity of this moment is that nothing is inevitable if we fight for better. How do we get to a world with algorithmic justice?

Buolamwini: I truly believe if you have a face, you have a place in the conversation about AI and the technologies that shape our lives. We get to a world of algorithmic justice where data does not destine you to discrimination and where your hue is not a cue to dismiss your humanity by telling our stories. The stories of the Porchas and the Roberts. We hear so many stories from everyday people via report.ajl.org. We get to algorithmic justice by exercising our right to refusal, which can mean saying no to face scans at airports. We continue to ask questions that go beyond hype or doom and ask if the AI systems being adopted in our schools, workplaces, and hospitals actually deliver what they promise. We get to algorithmic justice by pushing for laws that protect our humanity, our creative rights, our biometric rights, and our civil rights.

I’m thankful to folks like Dr. Joy and countless others who have been doing the work to make sure our algorithmic systems serve all of us, not some of us.

Thanks for reading!

Nabiha Syed

Chief Executive Officer

The Markup

P.S. Hello World is taking a break next week for the Thanksgiving holiday. We’ll be back in your inboxes on Saturday, Dec. 2.