Late last year, after facing years of criticism for its practices, Facebook announced a change to its multibillion-dollar advertising system: Companies buying ads would no longer be able to target people based on interest categories like race, religion, health conditions, politics, or sexual orientation.

See our data here.

More than three months after the change purportedly went into effect, however, The Markup has found that such ad targeting is very much still available on Facebook’s platform. Some obvious ad categories have indeed been removed, like “young conservatives,” “Rachel Maddow,” “Hispanic culture,” and “Hinduism”—all categories we found as options on the platform back in early January but that have since disappeared. However, other obvious proxies for race, religion, health conditions, and sexual orientation remain.

As far back as 2018, CEO Mark Zuckerberg told Congress the company had “removed the ability to exclude ethnic groups and other sensitive categories from ad targeting. So that just isn’t a feature that’s even available anymore.”

The Markup found, however, that while “Hispanic culture” was removed, for example, “Spanish language” was not. “Tea Party Patriots” was removed, but “Tea party” and “The Tea Party” were still available. “Social equality” and “Social justice” are gone, but advertisers could still target “Social movement” and “Social change.”

Citizen Browser

How Big Pharma Finds Sick Users on Facebook

We found drug ads targeted at users interested in everything from bourbon to therapy

Starbucks, for example, was still able to use existing options after the change to place an ad for its pistachio latte focused on users interested in “Contemporary R&B,” “telenovela,” “Spanish language,” and “K-pop,” all proxies for Black, Latino, and Asian audiences on Facebook.

Facebook hasn’t explained how it determines what advertising options are “sensitive” and, in response to questions from The Markup, declined to detail how it makes those determinations. But in the days after The Markup reached out to Facebook for comment, several more potentially sensitive ad-targeting options we flagged were removed by the company.

“The removal of sensitive targeting options is an ongoing process, and we constantly review available options to ensure they match people’s evolving expectation of how advertisers may reach them on our platform,” Dale Hogan, a spokesperson for Facebook parent company Meta, said in a statement. “If we uncover additional options that we deem as sensitive, we will remove them.”

Facebook’s ad targeting system is the not-so-secret key to the company’s massive financial success. By tracking users’ interests online, the company promises, advertisers can find the people most likely to pay for their products and services and show ads directly to them.

But the company has faced blowback for offering advertisers “interest” categories that speak to more fundamental—and sometimes highly personal—details about a user. Those interests can be used in surprising ways to discriminate, from excluding people of color from housing ads to fueling political polarization to tracking users with specific illnesses.

It’s obvious to everyone who is in the field that [removing a handful of terms deemed sensitive] is not a complete solution.

Aleksandra Korolova, University of Southern California

Facebook’s critics say the company has had ample opportunity to fix the problems with its advertising system, and trying to repair the platform by removing individual “sensitive” terms masks an underlying problem: The company’s platform might simply be too large and unwieldy to fix without more fundamental changes.

Removing a handful of terms it deems sensitive just isn’t enough, according to Aleksandra Korolova, an assistant professor of computer science at the University of Southern California.

“It’s obvious to everyone who is in the field that it’s not a complete solution,” she said.

Clear Proxies for Removed Terms Are Still on Facebook

The Markup gathered a list of potentially sensitive terms starting in late October, before Facebook removed any interest-targeting options. The data was gathered through Citizen Browser, a project in which The Markup receives Facebook data from a national panel of Facebook users.

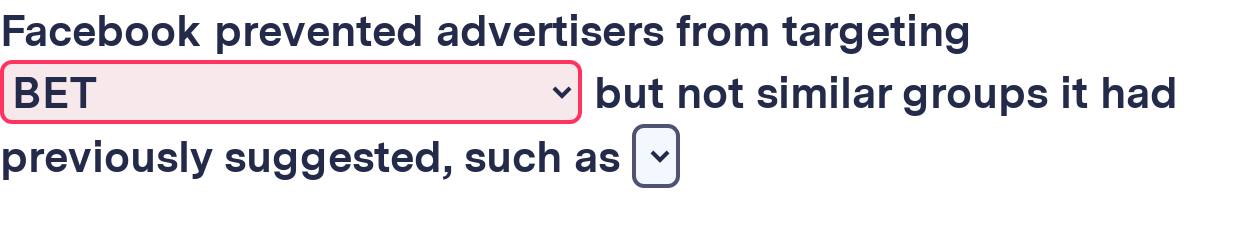

We also gathered a list of terms that Facebook’s tools recommended to advertisers when they entered a potentially sensitive term—the company suggested “BET” and “Essence (magazine)” when advertisers searched for “African American culture,” for example.

Then, also using Facebook’s tools, we calculated how similar terms were to their suggestions by viewing how many users the ads were estimated to reach, which Facebook calls an “audience.” (See the details of our analysis on Github.)

To find the gaps in Facebook’s cleanup process, we then searched those terms again in Facebook’s public advertising tools at the end of January to see which ones the company had removed following its change.

In some cases, we found, options still available reached almost exactly the same users as options that were removed. “BET,” the acronym for Black Entertainment Television, was removed, but “BET Hip Hop Awards,” which was previously recommended with BET and had a 99 percent overlap in audiences, was still available.

“Gay pride” was also removed as an option, but by using the term “RuPaul’s Drag Race,” advertisers could still reach more than 13 million of the same users.

Facebook prevented advertisers from targeting but not similar groups it had previously suggested, such as

These proxies weren’t just theoretically available to advertisers on Facebook. The Markup found companies actively using them to target ads to people on the social network. Using Citizen Browser, we found several examples of proxies for race and political affiliation used for targeting.

Ancestry, the genealogy service, for example, targeted ads using the terms “telenovela,” “BET Hip Hop Awards,” “African culture,” and “Afrobeat.”

Facebook removed “Fox News Channel” as a targeting option that could reach conservative users, but we saw the conservative satire website The Babylon Bee targeting an ad ridiculing Anthony Fauci using the then-still-available interest category “Judge Jeanine Piro,” a Fox News personality.

Before Fox News Channel was removed, we found 86 percent of users tagged with an interest in Judge Jeanine Piro were also tagged with an interest in the cable news network.

Facebook also failed to fully eliminate targeting based on medical conditions, we found. “Autism Awareness” was removed, but “Epidemiology of autism” was still available. “Diabetes mellitus awareness” was removed, but the closely related “Sugar substitute” wasn’t. We found an ad from Medtronic, a medical device company, using that term to promote a diabetes management insulin pen on Facebook.

Even Facebook itself has used the proxies. We found an ad placed by the company promoting its groups to users interested in “Vibe (magazine),” a stand-in for removed terms that target Black audiences.

Starbucks, Ancestry, and the Babylon Bee didn’t respond to requests for comment on their ad-targeting practices. Pamela Reese, a spokesperson for Medtronic, said the company has stopped using “Sugar substitute” as a targeting option and that Medtronic is “well within” FDA regulations for advertising medical devices.

The Markup provided several examples of these potential proxy terms to Facebook, including “telenovela,” “BET Hip Hop Awards,” “RuPaul’s Drag Race,” and “Judge Jeanine Piro.” They were quietly removed after our request for comment was sent.

Critics of Facebook like Korolova say Facebook has a track record of promising to implement meaningful changes on its advertising platform only to fall short of the pledge. Research has shown problems with advertising “proxies” for years, and Facebook could have taken stronger action to fix the problems, she argues.

“If they wanted to, they could do better,” Korolova said.

Facebook says its recent changes were needed to prevent abuse, but some organizations that say they use Facebook for social good have complained that the new policies put up barriers to their work. Climate activists and medical researchers have complained that the changes have limited their ability to reach a relevant audience.

Daniel Carr, a recruitment consultant for SMASH Labs, a medical research group that uses Facebook to recruit gay and bisexual men for studies, said the recent changes forced them to switch from terms like “LGBT culture” to pop culture references like “RuPaul’s Drag Race.” Carr said study recruitment was steady, but the change didn’t sit right with them.

“It’s made it more complicated on our side, and it’s not actually changed anything, other than Facebook can now say, ‘We don’t allow you to target by these things,’ ” Carr said. “It’s a political move, if anything.”