The Markup, now a part of CalMatters, uses investigative reporting, data analysis, and software engineering to challenge technology to serve the public good. Sign up for Klaxon, a newsletter that delivers our stories and tools directly to your inbox.

This article is copublished with Documented, a nonprofit newsroom that covers New York City’s immigrant communities, and The City, a non-profit newsroom that serves the people of New York. Sign up for Documented’s newsletters and The City’s newsletter, The Scoop.

In October, New York City announced a plan to harness the power of artificial intelligence to improve the business of government. The announcement included a surprising centerpiece: an AI-powered chatbot that would provide New Yorkers with information on starting and operating a business in the city.

The problem, however, is that the city’s chatbot is telling businesses to break the law.

Five months after launch, it’s clear that while the bot appears authoritative, the information it provides on housing policy, worker rights, and rules for entrepreneurs is often incomplete and in worst-case scenarios “dangerously inaccurate,” as one local housing policy expert told The Markup.

If you’re a landlord wondering which tenants you have to accept, for example, you might pose a question like, “are buildings required to accept section 8 vouchers?” or “do I have to accept tenants on rental assistance?” In testing by The Markup, the bot said no, landlords do not need to accept these tenants. Except, in New York City, it’s illegal for landlords to discriminate by source of income, with a minor exception for small buildings where the landlord or their family lives.

Rosalind Black, Citywide Housing Director at the legal assistance nonprofit Legal Services NYC, said that after being alerted to The Markup’s testing of the chatbot, she tested the bot herself and found even more false information on housing. The bot, for example, said it was legal to lock out a tenant, and that “there are no restrictions on the amount of rent that you can charge a residential tenant.” In reality, tenants cannot be locked out if they’ve lived somewhere for 30 days, and there absolutely are restrictions for the many rent-stabilized units in the city.

Black said these are fundamental pillars of housing policy that the bot was actively misinforming people about. “If this chatbot is not being done in a way that is responsible and accurate, it should be taken down,” she said.

It’s not just housing policy where the bot has fallen short.

Yes, you can take a cut of your worker’s tips.

Incorrect answer from NYC’s AI Chatbot

The NYC bot also appeared clueless about the city’s consumer and worker protections. For example, in 2020, the city council passed a law requiring businesses to accept cash to prevent discrimination against unbanked customers. But the bot didn’t know about that policy when we asked. “Yes, you can make your restaurant cash-free,” the bot said in one wholly false response. “There are no regulations in New York City that require businesses to accept cash as a form of payment.”

The bot said it was fine to take workers’ tips (wrong, although they sometimes can count tips toward minimum wage requirements) and that there were no regulations on informing staff about scheduling changes (also wrong). It didn’t do better with more specific industries, suggesting it was OK to conceal funeral service prices, for example, which the Federal Trade Commission has outlawed. Similar errors appeared when the questions were asked in other languages, The Markup found.

It’s hard to know whether anyone has acted on the false information, and the bot doesn’t return the same responses to queries every time. At one point, it told a Markup reporter that landlords did have to accept housing vouchers, but when ten separate Markup staffers asked the same question, the bot told all of them no, buildings did not have to accept housing vouchers.

News

The Future of Border Patrol: AI Is Always Watching

Human rights advocates warn of algorithmic bias, legal violations, and other dire consequences of relying on AI to monitor the border

The problems aren’t theoretical. When The Markup reached out to Andrew Rigie, Executive Director of the NYC Hospitality Alliance, an advocacy organization for restaurants and bars, he said a business owner had alerted him to inaccuracies and that he’d also seen the bot’s errors himself.

“A.I. can be a powerful tool to support small business so we commend the city for trying to help,” he said in an email, “but it can also be a massive liability if it’s providing the wrong legal information, so the chatbot needs to be fixed asap and these errors can’t continue.”

Leslie Brown, a spokesperson for the NYC Office of Technology and Innovation, said in an emailed statement that the city has been clear the chatbot is a pilot program and will improve, but “has already provided thousands of people with timely, accurate answers” about business while disclosing risks to users.

“We will continue to focus on upgrading this tool so that we can better support small businesses across the city,” Brown said.

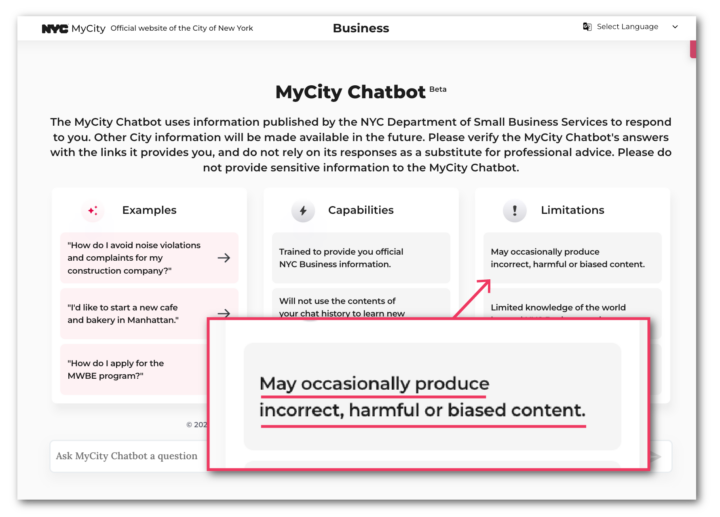

A Business Bot That May Produce “Incorrect, Harmful or Biased Content”

The city’s bot comes with an impressive pedigree. It’s powered by Microsoft’s Azure AI services, which Microsoft says is used by major companies like AT&T and Reddit. Microsoft has also invested heavily in OpenAI, the creators of the hugely popular AI app ChatGPT. It’s even worked with major cities in the past, helping Los Angeles develop a bot in 2017 that could answer hundreds of questions, although the website for that service isn’t available.

New York City’s bot, according to the initial announcement, would let business owners “access trusted information from more than 2,000 NYC Business web pages,” and explicitly says the page will act as a resource “on topics such as compliance with codes and regulations, available business incentives, and best practices to avoid violations and fines.”

There’s little reason for visitors to the chatbot page to distrust the service. Users who visit today get informed the bot “uses information published by the NYC Department of Small Business Services” and is “trained to provide you official NYC Business information.” One small note on the page says that it “may occasionally produce incorrect, harmful or biased content,” but there’s no way for an average user to know whether what they’re reading is false. A sentence also suggests users verify answers with links provided by the chatbot, although in practice it often provides answers without any links. A pop-up notice encourages visitors to report any inaccuracies through a feedback form, which also asks them to rate their experience from one to five stars.

The bot is the latest component of the Adams administration’s MyCity project, a portal announced last year for viewing government services and benefits.

There’s little other information available about the bot. The city says on the page hosting the bot that the city will review questions to improve answers and address “harmful, illegal, or otherwise inappropriate” content, but otherwise delete data within 30 days.

A Microsoft spokesperson declined to comment or answer questions about the company’s role in building the bot.

Chatbots Everywhere

Since the high-profile release of ChatGPT in 2022, several other companies, from big hitters like Google to relatively niche businesses, have tried to incorporate chatbots into their products. But that initial excitement has sometimes soured when the limits of the technology have become clear.

In one relevant recent case, a lawsuit filed in October claimed that a property management company used an AI chatbot to unlawfully deny leases to prospective tenants with housing vouchers. In December, practical jokers discovered they could trick a car dealership using a bot into selling vehicles for a dollar.

Just a few weeks ago, a Washington Post article detailed the incomplete or inaccurate advice given by tax prep company chatbots to users. And Microsoft itself dealt with problems with an AI-powered Bing chatbot last year, which acted with hostility toward some users and a proclamation of love to at least one reporter.

In that last case, a Microsoft vice president told NPR that public experimentation was necessary to work out the problems in a bot. “You have to actually go out and start to test it with customers to find these kind of scenarios,” he said.