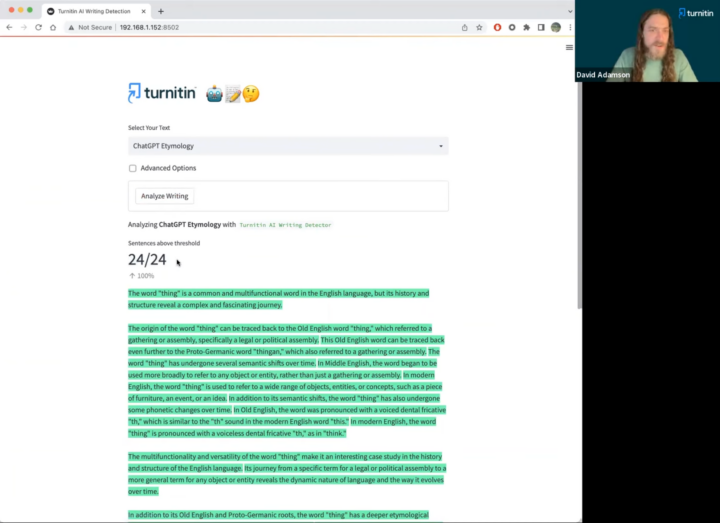

Taylor Hahn, who teaches at Johns Hopkins University, got an alert while grading a student paper this past spring for a communications course. He had uploaded the assignment to Turnitin, software used by over 16,000 academic institutions across the globe to spot plagiarized text and, since April, to flag AI-generated writing.

Turnitin labeled more than 90 percent of the student’s paper as AI-generated. Hahn set up a Zoom meeting with the student and explained the finding, asking to see notes and other materials used to write the paper.

The BreakdownMachine Learning

How to Buy Ed Tech That Isn’t Evil

Four critical questions parents and educators should be asking

“This student, immediately, without prior notice that this was an AI concern, they showed me drafts, PDFs with highlighter over them,” Hahn said. He was convinced Turnitin’s tool had made a mistake.

In another case, Hahn worked directly with a student on an outline and drafts of a paper, only to have the majority of the submitted paper flagged by Turnitin as AI-generated.

Over the course of the spring semester, Hahn noticed a pattern of these false positives. Turnitin’s tool was much more likely to flag international students’ writing as AI-generated. As Hahn started to see this trend, a group of Stanford computer scientists designed an experiment to better understand the reliability of AI detectors on writing by non-native English speakers. They published a paper last month, finding a clear bias. While they didn’t run their experiment with Turnitin, they found that seven other AI detectors flagged writing by non-native speakers as AI-generated 61 percent of the time. On about 20 percent of papers, that incorrect assessment was unanimous. Meanwhile, the detectors almost never made such mistakes when assessing the writing of native English speakers.

AI detectors tend to be programmed to flag writing as AI-generated when the word choice is predictable and the sentences are more simple. As it turns out, writing by non-native English speakers often fits this pattern, and therein lies the problem.

People typically have bigger vocabularies and a better grasp of complex grammar in their first languages. This means non-native English speakers tend to write more simply in English. So does ChatGPT. In fact, it mimics human writing by parsing everything it has ever processed and crafting sentences using the most common words and phrases. Even if AI detectors aren’t specifically trained to flag less complex writing, the tools learn to do so by seeing over and over that AI-generated writing is less complex.

Weixin Liang, one of the authors of the Stanford study, learned Cantonese and Mandarin before English. He was skeptical about claims of near-perfect accuracy with AI detectors and wanted to look more closely at how they worked for students with linguistic backgrounds like his.

The design of many GPT detectors inherently discriminates against non-native authors, particularly those exhibiting restricted linguistic diversity and word choice.

Weixin Liang, co-author of Stanford study on AI misclassification

“The design of many GPT detectors inherently discriminates against non-native authors, particularly those exhibiting restricted linguistic diversity and word choice,” Liang said via email.

After ChatGPT debuted in November of last year, many of the nation’s nearly 950,000 international students across the country, like their peers, considered the implications. Educators were panicking about the prospect of students using generative AI to complete assignments. And international students, allowed to study here with education-specific visas, quickly realized their vulnerability in the arms race that sprang up between AI generators and detectors.

Hai Long Do, a rising junior at Miami University in Oxford, Ohio, said it’s scary to think that the hours he spends researching, drafting, and revising his papers could be called into question because of unreliable AI detectors. To him, a native of Vietnam, biased detectors represent a threat to his grades, and therefore his merit scholarship.

“Much worse,” Do said, “is that an AI flag can affect my reputation overall.”

Some international students see additional risks. Colleges and universities routinely advise their international students that charges of academic misconduct can lead to a suspension or expulsion that would undermine their visa status. The threat of deportation can feel like a legitimate fear.

Shyam Sharma is an associate professor at Stony Brook University writing a book about the United States’ approach to educating international students. He says universities routinely fail to support this subgroup on their campuses, and professors often don’t understand their unique circumstances. Sharma sees the continued use of faulty AI detectors as an example of how institutions disregard the nation’s international students.

“Because the victim, right here, is less important,” Sharma said. “The victim here is less worthy of a second thought, or questioning the tool.”

There have been educators, however, who have questioned the tool, discovering, like Hahn, the fallibility of AI detectors and noting the serious consequences of unfounded accusations. As campuses reopen for the fall semester, faculty must consider whether the latest research makes a clearer case for scrapping AI detectors altogether.

In Liang’s paper, his team pointed out that false accusations of cheating can be detrimental to a student’s academic career and psychological well-being. The accusations force students to prove their own innocence.

“Given the potential for mistrust and anxiety provoked by the deployment of GPT detectors, it raises questions about whether the negative impact on the learning environment outweighs the perceived benefits,” they wrote.

If it’s the AI picking up on our language patterns and automatically deciding, I don’t know how I can prevent that.

Heewon Yang, NYU senior from South Korea

Diane Larryeu, a native of France, is studying at Cardozo School of Law this year in New York City. Last year, in a common law master’s program near Paris, her friend’s English essay was flagged as being AI-generated, she said. When asked if she was concerned the same might happen to her because, like her friend, English is her second language, her answer was direct: “Of course.” All she can do is hope it can be resolved quickly. “I would just explain to my teacher and hope they understand,” Larryeu said.

OpenAI shut down its AI detector at the end of July because of low accuracy, and Quill.org and CommonLit did the same with their AI Writing Check, saying generative AI tools are too sophisticated for detection. Turnitin, however, has only doubled down on its claims of high accuracy.

Annie Chechitelli, chief product officer for Turnitin, said the company’s tool was trained on writing by English speakers in the U.S. and abroad as well as multilingual students, so shouldn’t have the bias Liang’s paper identified. The company is conducting its own research into whether the tool is less accurate when assessing the writing of non-native English speakers. While that research hasn’t been published yet, Chechitelli said so far it looks like the answer is no.

Still, she admitted the tool ends up learning that more complex writing is more likely to be human, given the patterns across training essays.

Heewon Yang, a rising senior at New York University and a native of South Korea, is frustrated by AI detectors and her vulnerability to them. “If it’s the AI picking up on our language patterns and automatically deciding, I don’t know how I can prevent that,” she said.

That’s why Liang said he is skeptical Turnitin’s detector can avoid the biases his team identified in their paper.

“While Turnitin’s approach seems well-intentioned,” he said by email, “it’s vital to see the results of their ongoing tests and any third-party evaluations to form a comprehensive understanding of their tool’s performance in real-world scenarios.”

In June, Turnitin updated its software to allow institutions to disable the AI writing indicator, so even though the software will continue to assess the writing for AI, its conclusion won’t be displayed for instructors. As of the end of July, only two percent of Turnitin customer institutions had taken advantage of that option, according to the company.

The University of Pittsburgh was one. In a note to faculty at the end of June, the university’s teaching center said it didn’t support the use of any AI detectors, citing the fact that false positives “carry the risk of loss of student trust, confidence and motivation, bad publicity, and potential legal sanctions.”

While the experience of international students wasn’t at the center of their decision-making, John Radziłowicz, interim director of teaching support at the University of Pittsburgh, said his team tested a handful of available AI detectors and decided false positives were too common to justify their use. He knows faculty are overwhelmed with the idea of students using AI to cheat, but said he has been encouraging them to focus on the potential benefits of AI instead.

“We think that the focus on cheating and plagiarism is a little exaggerated and hyperbolic,” Radziłowicz said. In his view, using AI detectors as a countermeasure creates too much potential to do harm.