Subscribe to Hello World

Hello World is a weekly newsletter—delivered every Saturday morning—that goes deep into our original reporting and the questions we put to big thinkers in the field. Browse the archive here.

Hi there,

It’s me, Tara García Mathewson, back in the Hello World rotation to get you thinking about civil rights in education.

Earlier this week, the Center for Democracy & Technology (CDT) put out a report arguing that decades-old civil rights laws should be protecting students from discrimination based on their race—but they’re not. Reporting from The Markup was at the heart of one of its examples.

Machine Learning

False Alarm: How Wisconsin Uses Race and Income to Label Students “High Risk”

The Markup found the state’s decade-old dropout prediction algorithms don’t work and may be negatively influencing how educators perceive students of color

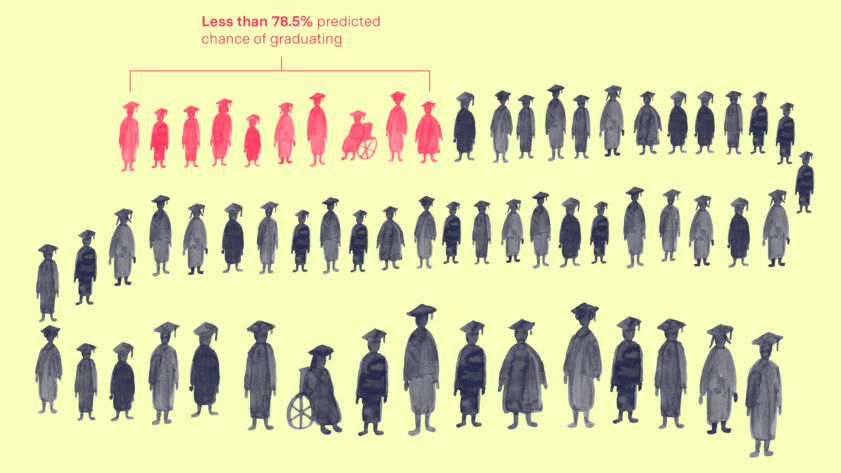

Did you see the story Todd Feathers wrote in April about an early warning system in Wisconsin designed to identify students at risk of dropping out? Well, the point of systems like these is to help schools figure out where to focus limited resources. They use past data about individual students, their schools, and districts to make predictions about current students. In Wisconsin, the system tends to be used by high schools considering how to serve incoming freshmen. But Todd cited some research out of the University of California, Berkeley in his story that looked at 10 years’ worth of data from Wisconsin’s system and found being labeled “high risk”—and therefore targeted for additional services—may not have made a difference.

Kristin Woelfel, policy counsel at the Center for Democracy & Technology and lead author of this week’s report, said those findings prompted alarm bells. Government entities have to be careful about where they take race into account when treating people differently. Woelfel told me there’s a basic standard in civil rights law: Use of race must be “narrowly tailored to achieve a compelling government interest.”

The Wisconsin system ostensibly used race to help prevent dropouts. That sounds like a compelling government interest! But not if it didn’t work. The UC Berkeley researchers looked at students just above and below the cutoff for being labeled high risk, and expected to see that students labeled high risk got relatively more services and support, and therefore graduated at relatively higher rates—but they didn’t find that. “The “high risk” label may not have helped students at all: the researchers couldn’t rule out a 0 percent increase in graduation rates as a result of the label.

I discussed this with Cody Venzke, a senior policy counsel at the ACLU, which co-signed a letter the CDT sent to the U.S. Department of Education and the White House this week. The coalition urged the federal government to follow up its 2022 Blueprint for an AI Bill of Rights with more emphasis on civil rights.

Venzke said it’s always worth being suspicious when race is used to make decisions about how to treat individuals, given historical discrimination. In Wisconsin, The Markup’s reporting made clear educators were not properly trained to understand the dropout risk labels and intervene accordingly. The investigation features students who said they found the labels stigmatizing. And if that stigma isn’t offset by demonstrable benefits for students—in higher graduation rates, for example—Venzke sees the potential for a civil rights violation.

But maybe it doesn’t have to be that way. Venzke points to schools’ obligation to protect students’ civil rights, ensure algorithmic systems are fair, and protect student privacy.

“One of the ways you can achieve all three of those is by using aggregate data where aggregate data will fulfill the purpose,” Venzke said.

One of the ways you can achieve all three of those is by using aggregate data where aggregate data will fulfill the purpose.

Cody Venzke, senior policy counsel at the ACLU

As it turns out, the UC Berkeley researchers found it would. In their paper, currently under peer review, they recommend Wisconsin scrap its use of individual factors like a student’s race or even test scores in determining that student’s dropout risk. Juan Perdomo, lead author on the paper and now a postdoctoral fellow at Harvard, told me that in highly segregated Wisconsin, building an early warning system with data from entire schools or districts offers equally reliable predictions about which students are likely to drop out.

“It also provides a much more actionable way of improving graduation outcomes,” Perdomo told me.

And isn’t that the point? Early warning systems aren’t supposed to just be statistical exercises in identifying students at risk. They’re supposed to help better serve those students. If an early warning system identifies entire schools as high risk—because it doesn’t factor in individual characteristics—maybe it would drive statewide spending or districtwide training toward those schools and create a clearer path toward making a difference.

For its part, the Wisconsin Department of Public Instruction is studying its early warning systems and considering changes. Communications Officer Chris Bucher told me the department has heard about concerns for some time now. So, we’ll see.

In the meantime, we at The Markup will continue to report on algorithms that fuel discrimination and what that means for people’s lives.

Thanks for reading!

Tara García Mathewson

Reporter

The Markup