In the lead-up to the presidential election, the major social media platforms spent weeks making promises about how they’d handle the incoming results and any misinformation related to them.

As of this writing, Election Day results were still coming in, and neither Joe Biden nor Donald Trump had won. The ongoing uncertainty has provided plenty of opportunity for online conspiracies, premature declarations of winners, and general vitriol. So how well did the platforms stick to their promises?

Results have been mixed. While the major platforms made use of their enforcement powers, experts are questioning whether they’re doing enough—and whether their initial promises really had a shot at mitigating the misinformation train to begin with.

“How are we supposed to be impressed when there are so many obvious failures?” said Jennifer Grygiel, an assistant professor of communications at Syracuse University, who studies social media and content moderation.

What Was Promised?

The biggest promises focused on labeling and removing problematic election-related content.

Twitter’s, Facebook’s, and YouTube’s election policies all called for their platforms to place labels on election-related information noting that the count was ongoing. Facebook and Facebook-owned Instagram said they’d post a note at the top of users’ feeds that “votes are still being counted.” YouTube said it would append a label to election results videos and searches saying that “election results may not be final” while linking to Google’s election results page.

The platforms also pledged to label or remove misinformation, including verifiably false claims or premature declarations of victory. Tech companies put in place similar processes around labeling and removing information intended to mislead or discourage voters, or that encouraged violence.

Those companies also promised to make changes to political advertising. Twitter stopped selling any ads used for political purposes last year, and Facebook, as well as Google, said they would institute similar bans around election day. In both cases, the changes will be indefinite.

What Happened?

Platforms made good on their promises to release broad, platform-wide labels about votes still being counted, and there were no especially high-profile incidents this week related to political advertising. Facebook, in one notable incident on Thursday, shut down a group of more than 300,000 members in which there were calls for election-related violence.

But the companies’ actions around individual posts have been more contentious, in particular posts to President Donald Trump’s Twitter account, which over the course of Tuesday and Wednesday turned into a minefield of labeled tweets.

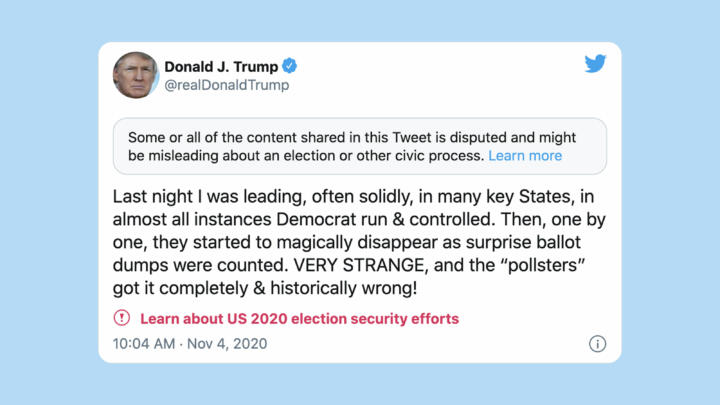

Shortly after the polls closed, the president hit Twitter, variously claiming that Democrats “are trying to STEAL the Election,” that ballots had “started to magically disappear,” and that forces were “working hard to make up 500,000 vote advantage in Pennsylvania disappear — ASAP.”

Twitter slapped those, and other tweets, with a label noting that the information was “disputed and might be misleading about an election or other civic process.”

But Twitter’s biggest test so far came as the Trump campaign claimed victory in Pennsylvania on Wednesday afternoon—although that state, as of Thursday afternoon, had still not been called by any television network, mainstream newspaper, or the Associated Press. Trump, his son Eric Trump, and press secretary Kayleigh McEnany repeated the claim in tweets, to which Twitter attached a label noting that the race may not have been called when the tweets were sent.

“Twitter continues to take enforcement action on Tweets that prematurely declare victory or contain misleading information about the election broadly, in line with our Civic Integrity Policy and recent guidance on labeling election results, and including by public figures,” Twitter spokesperson Trenton Kennedy said in an emailed statement.

Election 2020

What Will the Internet Look Like on Nov. 3?

The end of the election is likely to inspire a tsunami of misinformation and anger online. Here's what the major platforms say they're doing about it

Facebook has been a more complicated story. The company had previously told The Wall Street Journal that it would only label posts that prematurely declared victory nationally, not in individual states. The company quickly reversed course after the Trump campaign’s Pennsylvania posts were sent out, and moved to label posts claiming victory in state races.

Adam Mosseri, the head of Instagram, said in a tweet that it was “critical that people have accurate information about elections results,” and so both Facebook and Facebook-owned Instagram would label premature or inaccurate state-level declarations of victory as well. Facebook did not respond to a request to detail its original rule, or the change.

Video media platforms like YouTube made controversial decisions as well. As CNBC reported on Wednesday, a video from the Trump-friendly One America News Network claimed “Trump won” but was not removed for violations. A second video later released made similar claims and was not removed either.

A YouTube spokesperson, Ivy Choi, said the company had removed ads from both of the videos, but they otherwise didn’t meet the company’s criteria for removal.

“Our Community Guidelines prohibit content misleading viewers about voting, for example content aiming to mislead voters about the time, place, means or eligibility requirements for voting, or false claims that could materially discourage voting,” Choi said. “The content of this video doesn’t rise to that level.”

Choi said the company removed several livestreams on Election Day that violated spam policies.

They’re just making it up as they go along.

Jennifer Grygiel, Syracuse University

“They’re just making this up as they go along,” Grygiel said of the platforms. “This doesn’t show strategic planning; it doesn’t show any type of risk analysis.”

Notably, the enforcement action didn’t fall on just one side of the political divide. When some Twitter users tweeted, prematurely, that Wisconsin had gone to Biden on Wednesday, slightly before media outlets called the state for the former vice president, Twitter labeled the posts as not being corroborated by official sources.

Have the Tech Companies Done Enough?

Looming over the conversation are related questions about whether the platforms’ plans would ever be enough to properly stem the tide of information.

Take the Trump campaign’s tweets about winning Pennsylvania. Eric Trump’s tweet declaring a win was up for more than 20 minutes, reportedly gathering about 14,000 retweets during that time. No matter how effective a label is at combating the misinformation, many users saw the tweet before the label ever appeared.

“Applying a label after the fact is simply not enough because Twitter is a real-time platform,” said Grygiel, who has advocated moderating the president’s feed before he posts and suggests that deleting the false tweets would be more effective.

Grygiel said the problems with policy enforcement point to broader issues around content moderation for political figures, as companies like Facebook argue that keeping up disputed information from high-profile government officials is in the public interest.

“When did that become the cultural norm?” Grygiel said. “It’s a bad argument.”

And as vote-counting and litigation around that counting drag on, more flaws in the platforms’ systems could be exposed.