Facebook broadcast a sweeping pledge in a Feb. 8 blog post: It would remove false claims about vaccines from its platform. The declaration followed a series of promises over the preceding months to curb bogus health information on its site, particularly misinformation surrounding the pandemic. This included a commitment in September to stop recommending “health groups” in order to halt the practice of people turning to fellow Facebook users instead of doctors for medical advice.

See Our Data Here.

But data from The Markup’s Citizen Browser project shows that not only is COVID-19 misinformation still easy to find on Facebook, the company has also continued to recommend health groups to its users, including blatantly anti-vaccine groups and pages explicitly founded to propagate lies about the pandemic.

Citizen Browser consists of a nationwide panel of Facebook users who automatically share their news feed data with The Markup, including which groups the platform recommends they join.

Even in this relatively small subset of 3,156 Facebook users, we found hundreds of health groups recommended to our panelists, including 31 groups or pages that contained anti-vaccine or anti-mask propaganda and misinformation about the pandemic. The recommendations we tracked occurred between Nov. 15 and May 1, including at least seven after Facebook’s latest announced crackdown in February.

For “health” groups, we searched for any group recommended to our panelists with a name that included the word “health” or any one of 12 health- and illness-related terms, and eliminated groups containing any of a list of keywords suggesting the groups were not related to bodily human health (e.g., “dog,” “cat,” “relationship”). To find pandemic-related information, we reviewed those health groups, along with additional searches for groups and pages with terms like “health freedom” and “medical freedom” and then examined the posts and descriptions to confirm the nature of their content. In some cases, the group’s titles themselves overtly pointed to misinformation, despite Facebook’s stated policies. See more on our data here.

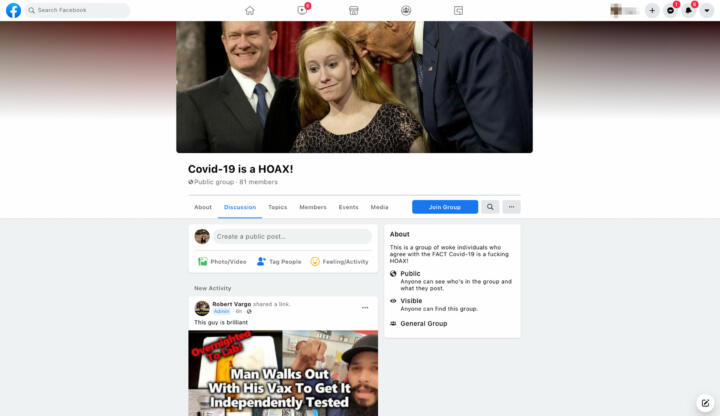

On March 26, for instance, Facebook suggested that one of our panelists join a group called “Covid-19 is a HOAX!” The public group, with 81 members, describes itself as “a group of woke individuals who agree with the FACT Covid-19 is a fucking HOAX!” The group’s discussion feed is mostly filled with anti-vaccine video testimonials from the far-right site BitChute. Popular hashtags within the group include #ExposeCNN, #justsayno, and #wewillsupportyou.

Even more recently, on April 27, another Citizen Browser panelist received a suggestion to follow the “Worldwide Health Freedom” page. One of the latest posts on this page links to a blog about a gym that offers free memberships to anyone refusing to be vaccinated.

“We have a policy against recommending health-related groups on our platforms,” Kevin McAlister, a Facebook spokesperson, said in an email. “And while some of these groups were already removed for violating our policies or not being recommended, we are investigating why others were recommended in the first place.”

Other groups recommended to Citizen Browser panelists over the past six months included “Pennsylvania for Medical Freedom” and “VACCINES: LEARN THE RISKS.” Facebook also recommended “Health Freedom Group,” which had more than 73,000 members and was run by prominent anti-vaccine advocate Erin Elizabeth. Facebook shuttered the group last week after it had reached this size in just under a year of operation. In all, a total of 16 groups and pages filled with vaccine misinformation were recommended to our panelists.

In terms of the recommendations, there’s something in Facebook’s policy that’s broken.

Fadi Quran, Avaaz

We also found 15 recommendations for groups or pages that explicitly encouraged members to flout mask-wearing safety guidelines, including “Ohio against masks!,” “Christians Against Facemasks,” and “Do not support PA businesses that require masks”—the latter of which was recommended to four panelists.

Some of the anti-vaccination pages have been dormant for months or years—but Facebook nevertheless pushed them to our panelists in their group recommendations. “Southern California Proud Parents of Unvaccinated Children,” for example, hasn’t posted since April 2015, and the last post for “Ann Arbor for Medical Freedom” was in July 2020. “Worldwide Health Freedom” posted once in March, but before that its most recent post was in October.

These pages have from around 200 to 500 followers and have posts like “No shots, no school? Not true” and “for every human illness there exists a plant, which is the cure.” In all of the pages suggested to our panelists, Facebook shows users a recommendation box that offers “Related Pages” to explore. Those related pages are also filled with vaccine misinformation and have names like “Vaccine Doubt,” “Vaccine Injury Lawyers,” and “Vaxfreekids.” When users click on those pages, the recommendation rabbit hole continues.

“In terms of the recommendations, there’s something in Facebook’s policy that’s broken,” said Fadi Quran, a campaign director who studies social media misinformation for Avaaz, a nonprofit advocacy group. “Facebook’s algorithm learns to recommend content to people that is in many cases inflammatory and misinformation, even when the platform says it wants to do the opposite.”

Shifting Policies

Over the past couple of years, Facebook has struggled over what to do with anti-vaccine content on its platform, often changing its approach on how to handle it. During the pandemic, the company’s policy shifts have become even more frequent.

Originally, Facebook said it would stop recommending groups that contained vaccine misinformation, in March 2019. Then, last September, the company said it would stop recommending health groups to users as a way to cut down on misinformation. In October, it announced it would ban vaccine misinformation in ads. And in November it said it would take down some of the biggest accounts peddling such content. In February came the announcement of the site-wide purge.

With that pledge, the company said it worked with public health agencies to create a list of dozens of false claims surrounding the coronavirus, including the idea that COVID-19 is man-made and that vaccines aren’t effective at protecting against the virus. The company said it would begin enforcing the policy immediately. In March, Facebook CEO Mark Zuckerberg submitted written testimony to Congress saying, “[W]e have made fighting misinformation and providing people with authoritative information a priority for the company.”

Every time Facebook puts out a policy, it’s exhausting.

Madelyn Webb, Media Matters

Facebook spokesperson McAlister said the company has removed more than “18 million pieces of content” globally for violating its rules on vaccine misinformation. It also applied “false” warning labels to more than “167 million pieces of COVID-19 content” that he said were rated by independent fact-checkers. “We’re continuing to work with health experts to keep pace with the evolving nature of the pandemic and update our policies as new facts and trends emerge,” McAlister added.

“Every time Facebook puts out a policy, it’s exhausting,” said Madelyn Webb, a senior researcher who focuses on social media and misinformation at Media Matters. “You can’t just take down a piece of content and expect it to be gone; you have to look at the networks. It’s silly to claim that these actions are addressing misinformation in any way.”

As recently as late April, Webb and her colleagues found 117 active groups on Facebook that they identified as anti-vaccine. Combined, those groups had roughly 275,000 members. Many of the groups explicitly called themselves “anti-vaxx” or “anti-vaccine,” according to Webb.

With health groups overall, Facebook’s vow to stop recommendations has been particularly confusing.

“Facebook Groups, including health groups, can be a positive space for giving and receiving support during difficult life circumstances,” Tom Alison, Facebook’s vice president of engineering, wrote in the September blog post. But “to prioritize connecting people with accurate health information, we are starting to no longer show health groups in recommendations.”

Still, some Facebook users, including reporters for The Markup, see a “health” category when looking to find new groups to join through the site’s “Discover” tab. And a total of 1,159 Citizen Browser panelists—well over a third of the panel—received recommendations for groups that contained one of our health keywords, such as “health,” “COVID,” or “vaccine.”

A brief scan of these recommended groups shows that many appear to be dedicated to topics like healthy living or fitness routines. Some seem explicitly contrary to the sorts of health discussion that Alison referred to in his post, like “Liver and Gallbladder Flush for Optimal Health,” “Open Heart Surgery Support Group,” and “Panniculectomy Recovery.” The “Whipple Surgery Survivor Group” says it’s for people familiar with the surgery typically done to treat pancreatic cancer. The “About” section of the page states that people should contact their doctors for medical advice and shouldn’t change their medical routines without first consulting their personal physicians.

Other groups recommended to our panelists tout remedies that could run counter to medical professionals’ opinions. For example, several promote alternative medicine, such as “Psychedelics, Sacred Medicines, Purpose & Business” and “Herbal Recovery From Opiates.” Others encourage plastic surgery or 1,200-calorie-and-less diets. One group, “Meat Health,” provides information and support around a “carnivore” diet.

When asked why Facebook is still recommending health groups, despite its stated policy, McAlister said, “We use machine learning to remove groups that post a significant amount of health or medical advice from our recommendation surfaces. Groups with a focus on positive health topics including fitness, wellness or personal development are still permitted.”

The Biggest Groups

Experts say groups that look like healthy lifestyle forums can sometimes harbor anti-vaccine motives, and for anti-vaccine activists that health focus can have a two-fold effect. First, it brings in members who might not otherwise join anti-vaccination groups; and second, it makes it easier for anti-vaccination groups to camouflage themselves within the health category and hide from Facebook’s moderators.

“What Facebook does is kind of a surface clean,” said Naomi Smith, a sociologist at Federation University Australia who studies vaccine misinformation on social media. “Users are smart. They can figure out how to get around these things. Even if you have a public page, if you make it about natural health, then they’ll probably leave you alone.”

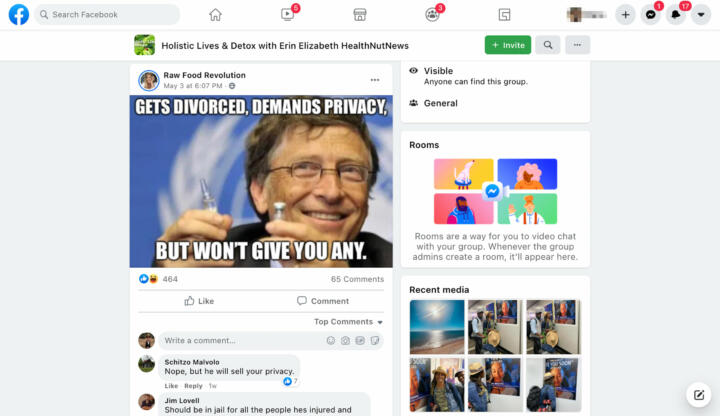

That tactic seemed to work for two of the biggest anti-vaccine groups recommended to users in the Citizen Browser panel, “Health Freedom Group” and “Holistic Lives,” which had more than 73,000 members and more than 65,000 members, respectively.

Up until last Thursday, the two groups were active, posting around a dozen times per day. Both groups listed Erin Elizabeth as an admin. Elizabeth, a self-described “health nut,” posted about things like mental health being related to a “leaky gut” and how raw food is the solution to good health. Interspersed were conspiracies about Microsoft co-founder Bill Gates nefariously pushing vaccines, alleged deaths from COVID-19 vaccines, and how Elizabeth was constantly under threat of being censored by Facebook.

On Thursday, Facebook deleted all of Elizabeth’s accounts, including her personal account. It also stopped allowing anyone to post links from her business website.

“They’ve just done another misinfo purge today, in what has to be one of the slowest course corrections ever,” Smith wrote in an email to The Markup last Thursday.

Facebook’s McAlister told The Markup that Elizabeth’s pages have been “permanently removed” because they violated company policies. He didn’t specify which policies the pages violated.

Elizabeth didn’t respond to a request for comment.

Like in other anti-vaccination groups on Facebook, many of which remain active, Elizabeth and other group administrators used simple cloaked language to avoid triggering Facebook’s algorithm. “Rona” was often used for the coronavirus, and “V” was code for vaccine.

In a May 4 post, Elizabeth uploaded a screenshot of a New York Times article about vaccinating adolescents over the age of 12. In the screenshot she scrubbed the words “vaccine” and “vaccination.” She added a message to the post that said, “They’re coming for the kids… If you wanna discuss this join me over it [sic] censorship proof Telegram.”

Similarly to other anti-vaccine proponents, Elizabeth often used her Facebook groups to build her social media channels on Telegram, Gab, and TikTok, and to amass new subscribers for her email newsletter. She also encouraged members to visit her website, where they could buy products like personalized vials of “nano hemp skin serum” for $89.99.

Since disappearing from Facebook, Elizabeth has continued to be active on Telegram, which has become popular with extremists of all kinds due to its lax content moderation.

And despite removing Elizabeth’s groups, Facebook has resisted taking down the accounts of other notable anti-vaccine activists.

In March, the Center for Countering Digital Hate (CCDH) published a report that pinpointed 12 leaders in the anti-vaccine movement who produce high volumes of vaccine misinformation and have seen rapid growth in their social media accounts over the last few months. Among those leaders were well-known vaccine opponent Robert F. Kennedy Jr., alternative health e-commerce guru Joseph Mercola, and Elizabeth, who happens to be Mercola’s longtime girlfriend and often refers to him as “my better half.”

Facebook’s McAlister said several accounts associated with those mentioned in the report have been removed or face other restrictions for violating company policies, but he didn’t specify which accounts.

“CCDH is a political group of censorship extremists funded by dark money,” Mercola wrote in an email to The Markup, adding that the campaign was allegedly led by pharmaceutical public relations firms. “Their reason for their campaign to deplatform me is because I suggest that COVID was lab engineered in China and was anticipated by global elites. The evidence supporting the Wuhan lab leak is overwhelming.”

CCDH did not respond to a request for comment.

… despite these promises, Facebook’s enforcement of its own policies is consistently and demonstrably insufficient.

Sen. Mark Warner, in a letter to Facebook

When asked for comment on the CCDH report, Kennedy said in a phone call with The Markup, “They accuse me of spreading vaccine misinformation but have yet to point to a single item where there is an erroneous factual assertion.” Citing First Amendment protections, he added that “Americans should be able to criticize our government, and they should be able to criticize pharmaceutical products.”

On the heels of the report, a coalition of 12 state attorneys general called on Facebook to “take immediate steps” to enforce its policies around vaccine misinformation. Facebook also got a letter from Sens. Amy Klobuchar (D-MN) and Ben Ray Luján (D-NM) saying it and other social media companies have “failed to adequately protect Americans.” Sen. Mark Warner (D-VA) wrote Facebook too, detailing his concerns about anti-vaccination groups on its site.

“Facebook has previously committed to reducing the spread of misinformation on its platforms,” Warner wrote. “However, despite these promises, Facebook’s enforcement of its own policies is consistently and demonstrably insufficient.”

Even with the government backlash and Mercola and Kennedy being public figures in the anti-vaccine world, Facebook still hasn’t barred their accounts—although it did shutter Kennedy’s Instagram account in February. And in the case of Elizabeth, even though Facebook pulled her “Health Freedom Group” and “Holistic Lives” group, according to our Citizen Browser project it still actively recommended them to people as recently as Dec. 19 and Jan. 15, respectively.