This article was coreported with STAT, a national publication that delivers trusted and authoritative journalism about health, medicine, and the life sciences. Sign up for its health tech newsletter, delivered Tuesday and Thursday mornings, here: https://www.statnews.com/signup/health-tech/.

Open the website of WorkIt Health, and the path to treatment starts with a simple intake form: Are you in danger of harming yourself or others? If not, what’s your current opioid and alcohol use? How much methadone do you use?

Within minutes, patients looking for online treatment for opioid use and other addictions can complete the assessment and book a video visit with a provider licensed to prescribe suboxone and other drugs.

But what patients probably don’t know is that WorkIt was sending their delicate, even intimate, answers about drug use and self-harm to Facebook.

A joint investigation by STAT and The Markup of 50 direct-to-consumer telehealth companies like WorkIt found that quick, online access to medications often comes with a hidden cost for patients: Virtual care websites were leaking sensitive medical information they collect to the world’s largest advertising platforms.

On 13 of the 50 websites, we documented at least one tracker—from Meta, Google, TikTok, Bing, Snap, Twitter, LinkedIn, or Pinterest—that collected patients’ answers to medical intake questions. Trackers on 25 sites, including those run by industry leaders Hims & Hers, Ro, and Thirty Madison, told at least one big tech platform that the user had added an item like a prescription medication to their cart, or checked out with a subscription for a treatment plan.

The trackers that STAT and The Markup were able to detect, and what information they sent, is a floor, not a ceiling. Companies choose where to install trackers on their websites and how to configure them. Different pages of a company’s website can have different trackers, and we did not test every page on each company’s site.

See our data here.

All but one website examined sent URLs users visited on the site and their IP addresses—akin to a mailing address for a computer, which can be used to link information to a specific patient or household—to at least one tech company. The only telehealth platform that we didn’t observe sharing data with outside tech giants was Amazon Clinic, a platform recently launched by Amazon.

Health privacy experts and former regulators said sharing such sensitive medical information with the world’s largest advertising platforms threatens patient privacy and trust and could run afoul of unfair business practices laws. They also emphasized that privacy regulations like the Health Insurance Portability and Accountability Act (HIPAA) were not built for telehealth. That leaves “ethical and moral gray areas” that allow for the legal sharing of health-related data, said Andrew Mahler, a former investigator at the U.S. Department of Health and Human Services’ Office for Civil Rights.

“I thought I was at this point hard to shock,” said Ari Friedman, an emergency medicine physician at the University of Pennsylvania who researches digital health privacy. “And I find this particularly shocking.”

In October and November, STAT and The Markup signed up for accounts and completed onboarding forms on 50 telehealth sites using a fictional identity with dummy email and social media accounts. To determine what data was being shared by the telehealth sites as we completed their forms, we examined the network traffic between trackers using Chrome DevTools, a tool built into Google’s Chrome browser.

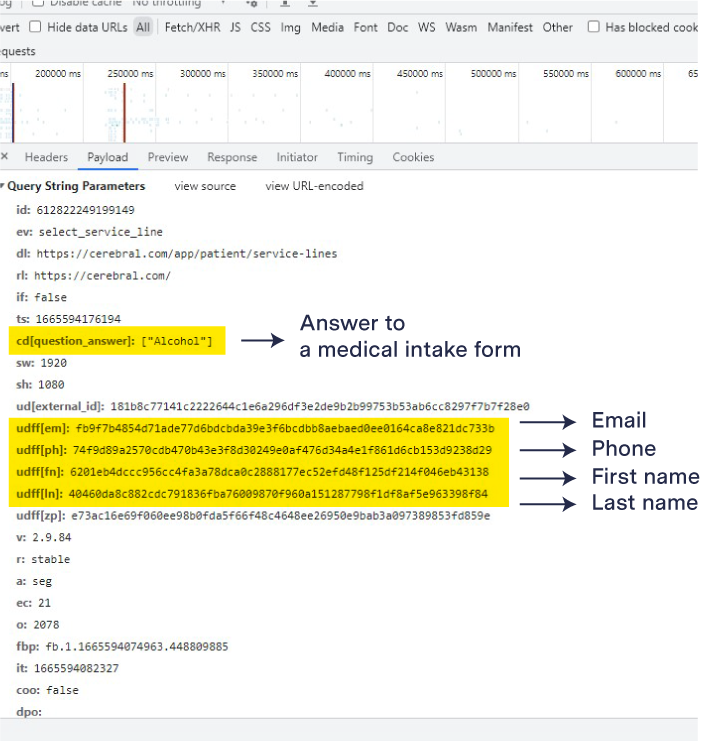

On WorkIt’s site, for example, we found that a piece of code Meta calls a pixel sent our responses about self-harm, drug and alcohol use, and our personal information—including first name, email address, and phone number—to Facebook.

We found trackers collecting information on websites that sell everything from addiction treatments and antidepressants to pills for weight loss and migraines. Despite efforts to trace the data using the tech companies’ own transparency tools, STAT and The Markup couldn’t independently confirm how or whether Meta and the other tech companies used the data they collected.

After STAT and The Markup shared detailed findings with all 50 companies, Workit said it had changed its use of trackers. When we tested the website again on Dec. 7, we found no evidence of tech platform trackers during the company’s intake or checkout process.

“Workit Health takes the privacy of our members seriously,” Kali Lux, a spokesperson for the company, wrote in an email. “Out of an abundance of caution, we elected to adjust the usage of a number of pixels for now as we continue to evaluate the issue.”

“Advertisers should not send sensitive information about people through our Business Tools,” Dale Hogan, a spokesperson for Meta, wrote in an email.

Patients may assume that health-related data is always protected by privacy regulations including HIPAA. WorkIt, for one, begins its intake form with a promise that “all of the information you share is kept private and is protected by our HIPAA-compliant software.”

“The very reason why people pursue some of these services online is that they’re seeking privacy,” said David Grande, a digital health privacy researcher at the University of Pennsylvania.

But the reality online is more complex, making it all but impossible for the average user to know whether the company they’re entrusting with their data is obligated to protect it. “Individually, we have a sense that this information should be protected,” said Mahler, who is now vice president of privacy and compliance at Cynergistek, a health care risk auditing company. “But then from a legal and a regulatory perspective, you have organizations saying … technically, we don’t have to.”

Rather than providing care themselves, telehealth companies often act as middlemen connecting patients to affiliated providers covered by HIPAA. As a result, information collected during a telehealth company’s intake may not be protected by HIPAA, while the same information given to the provider would be.

“All the privacy risks are there, with the mistaken but entirely reasonable illusion of security,” said Matthew McCoy, a medical ethics and health policy researcher at the University of Pennsylvania. “That’s a really dangerous combination of things to force the average consumer to deal with.”

The very reason why people pursue some of these services online is that they’re seeking privacy.

David Grande, University of Pennsylvania

In response to questions for this story, representatives of Meta, Google, TikTok, Bing, Snap, and Pinterest said advertisers are responsible for ensuring they aren’t sending sensitive information via the tools. Twitter did not respond to requests for comment.

“Doing so is against our policies and we educate advertisers on properly setting up Business tools to prevent this from occurring,” wrote Meta’s Hogan. “Our system is designed to filter out potentially sensitive data it is able to detect.”

LinkedIn’s tracker “collects URL information which we immediately encrypt when it reaches our servers, delete within 7 days and do not add to a profile,” Leonna Spilman, a spokesperson for the company, wrote in an email.

Nevertheless, three of the seven big tech companies also said they had taken action to investigate or stop the data sharing.

Google is “currently investigating the accounts” in question, spokesperson Elijah Lawal wrote in an email.

“In response to this new information, we have paused data collection from these advertisers’ sites while we investigate,” Snap spokesperson Peter Boogaard wrote in an email.

Pinterest “offboarded the companies in question,” spokesperson Crystal Espinosa wrote in an email.

A Boom Industry on the Edge of the Law

Together, the companies in this analysis reflect an increasingly competitive—and lucrative—direct-to-consumer health care market. The promise of a streamlined, private prescription process has helped telehealth startups raise billions as they seek to capitalize on a pandemic-driven boom in virtual care.

Hims & Hers, one of the largest players in the space, is now a publicly traded company valued at more than $1 billion; competitor Ro has raised $1 billion since its founding in 2017, with investors valuing the company at $7 billion. Thirty Madison, which operates several telehealth companies focused on different medical needs, is valued at more than $1 billion.

The industry’s rapid growth has been enhanced by its ability to use data from tools like pixels to target advertisements to increasingly specific patient populations and to put ads in front of users who have visited their site before. The companies we analyzed mostly provide care and prescriptions for conditions like migraines, sexual health, or mental health disorders rather than comprehensive primary or urgent care—making browsing their websites inherently sensitive.

In the same way visiting an opioid use disorder treatment center can identify an individual as an addiction patient, data about someone visiting a telehealth site that treats only one condition or provides only one medication can give advertisers a clear window into that person’s health. Direct answers to onboarding forms could be even more valuable because they’re more detailed and specific, said McCoy. “And it’s more insidious because I think it would be all that much more surprising to the average person that information that you put in a form wouldn’t be protected. It’s both worse and more unexpected.”

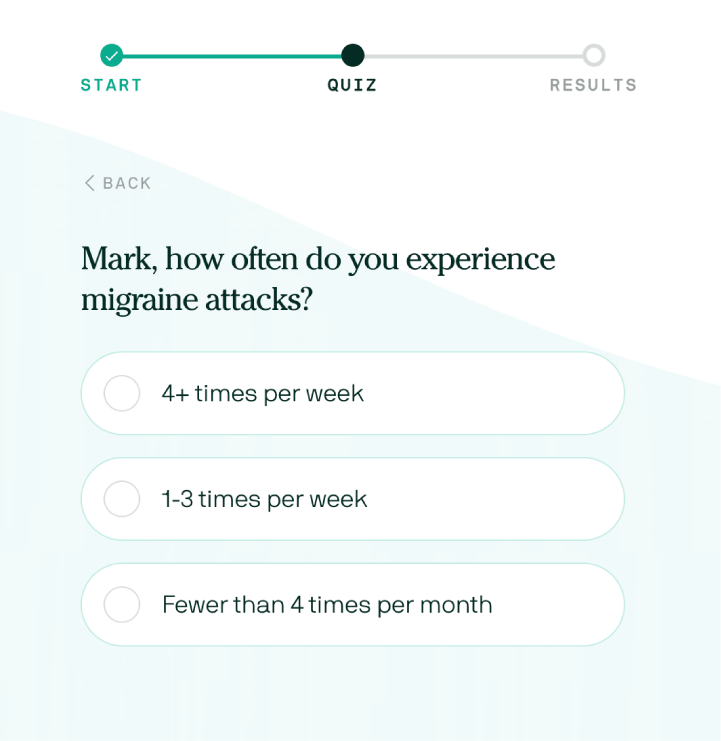

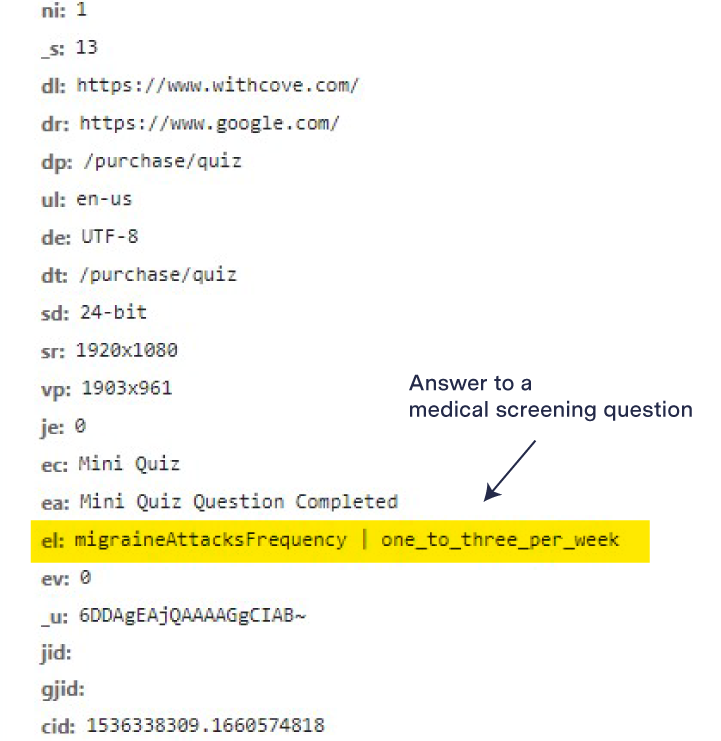

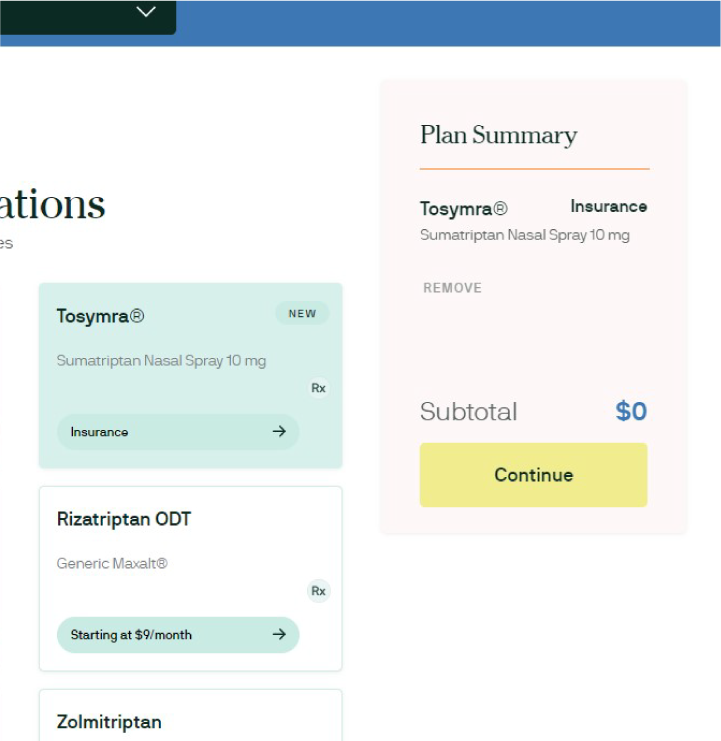

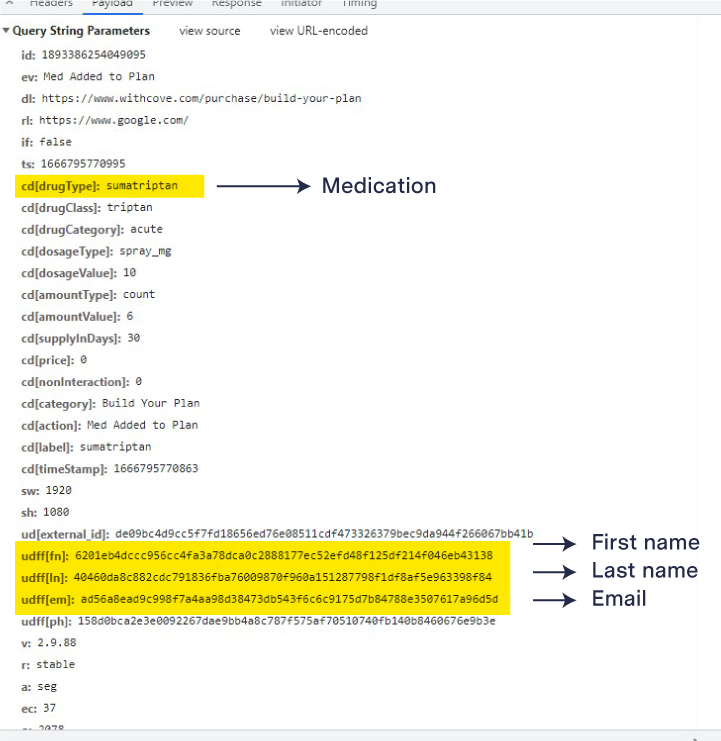

Consider the form for Thirty Madison’s Cove, which offers migraine medications. It prompts visitors to share details about their migraines, past diagnoses, and family history—and during our testing sent the answers to Facebook and Google. If a user added a medication to the cart, detailed information about the purchase, including the drug’s name, dose, and price, were also sent to Facebook, along with the user’s hashed full name, email, and phone number.

While hashing obscures those details into a string of letters and numbers, it does not prevent tech platforms from linking them to a specific person’s profile, which Facebook explicitly says it does before discarding the hashed data.

“It’s a pure monetization play,” said Eric Perakslis, chief science and digital officer at the Duke Clinical Research Institute. “And yes, everybody else is doing it, it’s the way the internet works.… But I think that it’s out of step with medical ethics, clearly.”

In particular, experts worry that health data could be used to target patients in need with ads for services and therapies that are unnecessary or even harmful.

The big tech platforms that responded for this story say they do not allow targeted advertising based on specific health conditions, and some telehealth companies said they only use the data collected to measure the success of their advertising. However, as The Markup has previously reported, advertisers may still be able to target ads on Facebook using terms that are close proxies for health conditions.

On 35 of the 50 websites, we found trackers sending individually identifying information to at least one tech company, including names, email addresses, and phone numbers.

That presents patients with a Catch-22. “It requires anyone that wants to take advantage of telehealth … to expose a lot of the same information that they would reveal within a protected health care relationship,” said Woodrow Hartzog, a privacy and technology law professor at Boston University—but without the same protections.

In recent months, regulators have begun cracking down on the indiscriminate collection and sale of personal health data.

Pixel Hunt

Facebook Is Receiving Sensitive Medical Information from Hospital Websites

Experts say some hospitals’ use of an ad tracking tool may violate a federal law protecting health information

After issuing a warning to businesses about selling health information in July, the Federal Trade Commission sued data broker Kochava, alleging that the company put consumers at risk by failing to protect location data that could reveal sensitive details about people’s health, such as a visit to a reproductive health clinic or addiction recovery center. Kochava has asked for the case to be dismissed and countersued the FTC.

Meta has also come under significant scrutiny, including congressional questioning, following a Markup investigation that found its pixels sending patient data from hospitals’ websites. Meta is also facing a large class action lawsuit over the breaches.

The increased attention reflects growing fears about how health data may be used once it enters the black boxes of corporate data warehouses—whether it originates from a hospital, a location tracker, or a telehealth website.

“The health data market just continues to kind of spiral out of control, as you’re seeing here,” said Perakslis.

But thanks to their business structures, many of the companies behind telehealth websites appear to be operating on the outskirts of health privacy regulations.

“It Does Seem Deceptive”

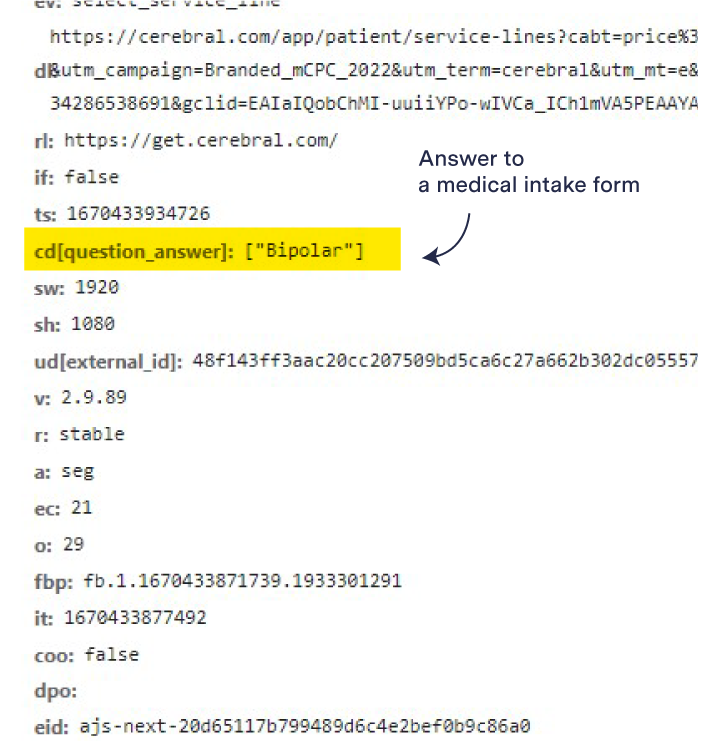

When users visit Cerebral, a mental health company whose prescribing and business practices came under federal investigation this year, they are required to answer a series of “clinically tested questions” that can cover a wide range of conditions, including depression, anxiety, bipolar disorder, and insomnia. During testing, with every response — such as clicking a button to indicate feeling depressed “more than half the days” over the last two weeks — a pixel sent Facebook the text of the answer button, the specific URL the user was visiting when clicking the button, and the user’s hashed name, email address, phone number.

At a doctor’s office, that kind of detail collected on an intake form would likely be subject to HIPAA. But as with most of the telehealth companies in this analysis, Cerebral Inc. itself doesn’t provide care; its website connects patients with providers like those employed by Cerebral Medical Group, P.A. and others. While those medical groups are HIPAA-covered entities that cannot share protected health information with third parties except under narrow circumstances, Cerebral claims in its privacy policy to be a go-between that is not covered by HIPAA—except in limited cases when it acts as a business associate of a medical group, pharmacy, or lab.

Cerebral did not answer detailed questions that would clarify what these cases might be. But in a Nov. 30 email, spokesperson Chris Savarese said the company would adjust its use of tracking tools. “We are removing any personally identifiable information, including name, date of birth, and zip code from being collected by the Meta Pixel,” he wrote.

However, when we tested Cerebral’s website again on Dec. 7, we found that a Meta Pixel was still sending answers to some intake questions and hashed names to Facebook, and trackers from Snap and Pinterest were also collecting hashed email addresses.

The telehealth companies that responded to our detailed queries said their data-sharing practices adhered to their privacy policies. Those kinds of policies commonly include notice that some—but not all—health data shared with the site is subject to HIPAA. Many companies responded that they were careful to ensure that data shared via third-party tools was not considered protected health information.

But the structure of those companies’ businesses—and the inscrutable language in their privacy policies and terms of use—make it difficult for consumers to know what data would qualify as protected, and when.

“There is so much intransparency, and that makes it complex and maybe even deceptive for consumers,” said Sara Gerke, a professor of health law and policy at Penn State Dickinson Law.

Several telehealth companies claimed that the information collected from their websites was not personally identifiable because it was hashed. HIPAA allows health information to be shared when it has been de-identified. However, hashing does not anonymize data for the tech platforms that receive it and match it to user profiles. And every data packet sent by a tech company’s tracker includes the user’s IP address, which is one of several unique identifiers that explicitly qualify health data for protection under HIPAA.

Further complicating decisions for patients, at least 12 of the direct-to-consumer companies we examined promise on their websites that they are “HIPAA-compliant.” That could encourage users to think all the data they share is protected and lead them to divulge more, said Hartzog. Yet the regulations apply to the websites’ data use only in limited cases.

It’s a pure monetization play.

Eric Perakslis, Duke Clinical Research Institute

Monument, a site that offers alcohol treatment, starts its intake form by saying, “Any information you enter with Monument is 100% confidential, secure, and HIPAA compliant.” Yet in its responses to STAT and The Markup, it said that it does not consider information transmitted to third parties from that form—including answers to questions like “In the past year, have you continued to drink even though it was making you feel depressed or anxious or adding to another health problem? or after having had a memory blackout?”—to be protected health information under HIPAA.

“If they’re not covered by HIPAA and they have a HIPAA-compliant badge, that seems like a case the FTC could bring,” said Justin Brookman, the director of technology policy for Consumer Reports and former policy director with the FTC, which has previously charged companies for deceptive use of HIPAA-compliant badges. “There’s an implication there that you’re regulated in certain ways, that your data is protected, and so it does seem deceptive.”

Such data sharing could be particularly damaging to patients seeking care for substance use disorders, said Jacqueline Seitz, senior staff attorney for health privacy at the Legal Action Center—especially if it enters opaque data brokerages where it can be resold and repurposed indefinitely.

Several companies in this analysis are capitalizing on federal waivers activated during the pandemic that allow controlled substances like suboxone, which is used to treat opioid use disorder, to be prescribed virtually. Under federal law, qualifying addiction treatment providers—including those that prescribe suboxone—are held to patient privacy standards even stricter than HIPAA. For example, WorkIt’s physician group states it is forbidden from acknowledging “to anyone outside of the program that you are a patient or disclos[ing] any information identifying you as a substance use disorder patient” except in narrow situations.

Nonetheless, we found that WorkIt and other telehealth companies—in their role connecting patients to providers—share information that identifies a user as someone seeking addiction treatment. On Boulder Care’s website, a pixel sent Facebook our name and email when we joined a suboxone treatment program waitlist. And trackers on the website of Bicycle Health, another online suboxone provider, notified Google and Bing that our email address had been entered on an “enrollment confirmation” URL.

Boulder Care chief operating officer Rose Bromka said the company had started improving its “website hygiene” before being contacted for this article, and restricted the information sent by the Meta pixel after reviewing our findings.

However, Bromka added that Boulder still tracks some information about website visitors to guide its advertising.

“We are always looking to balance ensuring we are able to get the word out about options with holding to our value set,” she said.

Corporate Black Boxes

Meta, Google, TikTok, Bing, LinkedIn, Snap, and Pinterest say they have policies against using sensitive health data to help target advertisements.

“We clearly instruct advertisers not to share certain data with us and we continuously work with our partners to avoid inadvertent transmission of such data,” TikTok spokesperson Kate Amery wrote in an email, adding, “[W]e also have a policy against targeting users based on their individual health status.”

Meta and Google claim to have algorithmic filters that identify and block sensitive health information from entering their advertising systems. But the companies did not explain how those systems work or their effectiveness. By Facebook’s own admission to investigators from the New York Department of Financial Services in 2021, its system was “not yet operating with complete accuracy.”

To trace what happened to data collected by trackers, we created dummy accounts logged into Facebook, TikTok, and Twitter while we tested the telehealth websites. We then used the platforms’ “download your data” tools in an attempt to determine whether any health information the trackers collected was added to our profiles.

The information provided by those tools was so limited, however, that we couldn’t confirm how or whether the sensitive health information was used.

For example, a Meta Pixel on RexMD, which prescribes erectile dysfunction drugs, collected the name of the medication in our cart, our email, gender, and date of birth. Facebook’s transparency tool, however, only showed 10 “interactions” on RexMD’s website, with generic descriptions like “ADD_TO_CART.” It did not provide details about the specific data Facebook ingested during those interactions. A TikTok pixel collected some of that same information from RexMD, but TikTok’s report on our “usage data from third-party apps and websites” had just one line: “You have no data in this section.”

Our Twitter data showed that the company knew we had selected a product on RexMD’s website and the exact URL on which we selected that product.

On some websites, users’ data was also being collected by “custom events,” meaning that a website owner deliberately created a custom tracking label that could have a phrase such as “checkout” in it but wouldn’t necessarily show up in the tech platforms’ transparency tools.

Only four companies answered whether they had ever been notified by Facebook of potentially sensitive health information. Monument and Favor had data flagged but said they determined it wasn’t sensitive. Lemonaid received a notification in error related to a promotional code, and Boulder Care had received none.

Telehealth websites should be held accountable for the trackers they install, said Hartzog, the Boston University law professor. But “big platforms that are deploying these surveillance technologies also need to be held accountable, because they’re able to vacuum up every ounce of personal data on the internet in the absence of a rule that tells them not to.”

The companies we analyzed said their services fill an important need. “The makeup of the traditional health care system has in many cases prevented people from accessing treatment for conditions that should be easy to treat,” Scott Coriell, a spokesperson for Hims & Hers, wrote in an email. Companies that serve patients with mental health or substance use disorders emphasized that long wait times to see in-person providers, and the stigma associated with seeking care, made virtual services especially valuable.

Marketing supported by third-party tracking is part of making that care accessible, some argued. “Monument uses online advertising platforms to raise awareness of our evidence-based treatment for alcohol use disorder, and get people the support and relief they deserve,” wrote CEO Michael Russell. “We transmit the minimum amount of data required to allow us to track the effectiveness of our advertising campaigns.” Favor spokesperson Sarah Abboud argued that calling standard industry practices into question could threaten trust in those services.

But health privacy and policy experts see a disconnect between the industry’s stated emphasis on privacy and its data-sharing practices. “Telemedicine providers should have realized from the get-go that if their entire business model is to seamlessly move people from marketing to care and the care will be online, then there’s going to be more personal identifiable information submitted and thus more privacy risk and thus more privacy liability,” said Christopher Robertson, a health law and policy professor at Boston University.

One problem may be that marketing teams don’t fully understand privacy regulations, and legal teams don’t have a handle on how the marketing tools work.

Sara Juster, privacy officer for the weight-loss telehealth company Calibrate, wrote in an email that the company doesn’t “send any health information collected in our eligibility flow back to platforms.” But a Meta Pixel on its site sent data including height, weight, BMI, and other diagnoses, like diabetes, to Facebook. Juster then clarified the pixel was a duplicate that should have been removed in a tracking audit earlier this year.

However, as of Dec. 7, a Meta Pixel was still present on the site and sharing hashed identifiers and checkout events with Facebook. The pixel appeared to have been reconfigured, though, to send less information than it had during our original testing.

Without updated laws and regulations, experts said patients are left to the whims of rapidly evolving telehealth companies and tech platforms, who may choose to change their privacy policies or alter their trackers at any time.

“It doesn’t make any sense that right now, we only have protections for sensitive health information generated in certain settings,” said McCoy, “but not what can be equally sensitive health information generated in your navigation of a website, or your filling out of a very detailed form about your history and your prescription use.”

Update Dec. 22, 2022

The table in this article has been updated to add a response from Brightside that came in after publication.