Major universities are using their students’ race, among other variables, to predict how likely they are to drop out of school. Documents obtained by The Markup through public records requests show that some schools are using education research company EAB’s Navigate advising software to incorporate students’ race as what the company calls a “high-impact predictor” of student success—a practice experts worry could be pushing Black and other minority students into “easier” classes and majors.

The documents, called “predictive model reports,” describe how each university’s risk algorithm is tailored to fit the needs of its population. At least four out of seven schools from which The Markup obtained such documents incorporate race as a predictor, and two of those describe race as a “high impact predictor.” Two schools did not disclose the variables fed into their models.

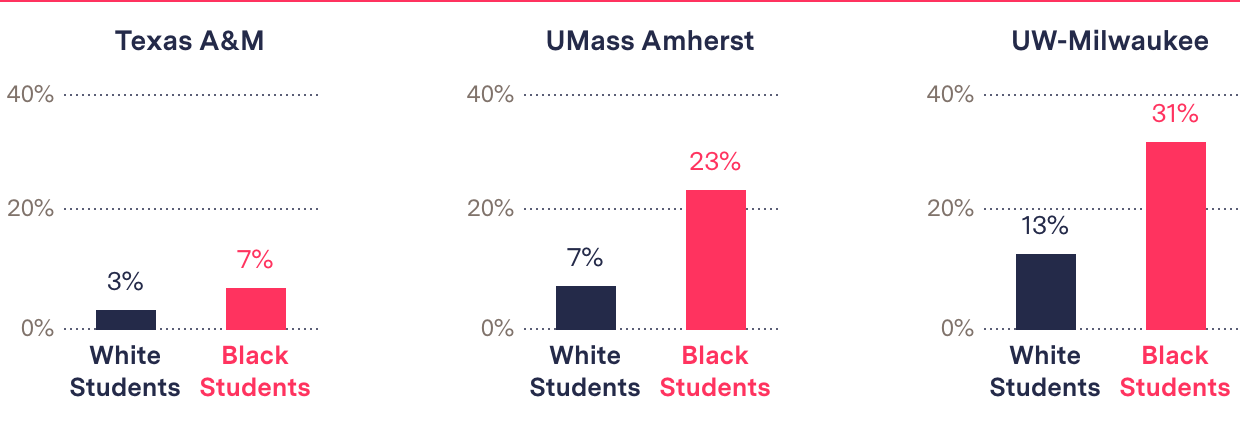

More than 500 universities across the country use Navigate’s “risk” algorithms to evaluate their students. In addition to documents on how the models work, the Markup obtained aggregate student risk data from four large public universities—the University of Massachusetts Amherst, the University of Wisconsin–Milwaukee, the University of Houston, and Texas A&M University—for the fall 2020 semester. We found large disparities in how the software treats students of different races, and the disparity is particularly stark for Black students, who were deemed high risk at as much as quadruple the rate of their White peers.

At the University of Massachusetts Amherst, for example, Black women are 2.8 times as likely to be labeled high risk as White women, and Black men are 3.9 times as likely to be labeled high risk as White men. At the University of Wisconsin–Milwaukee, the algorithms label Black women high risk at 2.2 times the rate of White women, and Black men at 2.9 times the rate of White men. And at Texas A&M University, they label Black women high risk at 2.4 times the rate of White women, and Black men at 2.3 times the rate of White men.

Latinx students were also assigned high risk scores at substantially higher rates than their White peers at the schools The Markup examined, although not to the same degree as Black students. The algorithms labeled Asian students high risk at similar or lower rates than White students.

Black students are regularly labeled a higher risk for failure than White students

Percentage of student body labeled as “high risk” to not graduate within their selected major

Put another way, Black students made up less than 5 percent of UMass Amherst’s undergraduate student body, but they accounted for more than 14 percent of students deemed high risk for the fall 2020 semester.

“This opens the door to even more educational steering,” said Ruha Benjamin, a professor of African American studies at Princeton and author of “Race After Technology,” after reviewing EAB’s documents. “College advisors tell Black, Latinx, and indigenous students not to aim for certain majors. But now these gatekeepers are armed with ‘complex’ math.”

The scores, which are one of the first things a professor or administrator may see when pulling up a list of students, can leave advisers with an immediate and potentially life-changing impression of students and their prospects within a given major.

“You can easily find situations where there are two students who have similar low GPAs and different risk scores, and there’s no obvious explanation for why that’s the case,” said Maryclare Griffin, a statistics professor at UMass Amherst. “I’ve looked at several examples of students in STEM that have a moderate risk score; they are underrepresented in their major, and the Major Explorer [a function within the software] is saying you should push them to another major where their risk score is lower.”

Navigate’s racially influenced risk scores “reflect the underlying equity disparities that are already present on these campuses and have been for a long time,” Ed Venit, who manages student success research for EAB, wrote in an email. “What we are trying to do with our analytics is highlight these disparities and prod schools to take action to break the pattern.” In an interview he added that EAB provides its clients with as much as 200 hours of training and consultation per year, some of it devoted to avoiding bias.

Students of color at the EAB client schools that The Markup examined say they were never told they were being judged by algorithms and that they don’t see the company’s altruistic depiction of the scores playing out.

“I don’t think UMass gives enough resources for students of color regardless, and I absolutely don’t think this score would help in that respect,” said Fabiellie Mendoza, a senior at UMass Amherst, which has used EAB’s software since 2013. “You should have a blank slate. If you’re coming in and you already have a low score because of your background, your demographic, or where you’re from, it’s just very disheartening.”

Aileen Flores, a junior at Texas A&M University, was also unnerved to learn she and her peers were being scored by algorithms. “We all work just as hard as each other,” she said. “We all deserve to be respected and seen as one and not judged off of our risk score, or skin tone, or background.”

The Sales Pitch

The rise of predictive analytics has coincided with significant cuts in government funding for public colleges and universities. In 2018, 41 states contributed less money to higher education—on average 13 percent less per student—than they did in 2008.

EAB has aggressively marketed its Navigate software as a potential solution. Boosting retention is “not just the right thing to do for students but (is) a financial imperative to preserve these investments,” the company wrote in a presentation prepared for Kansas State University.

The proposed answer: Identify the high-risk students and target them with “high-touch support.”

EAB representatives told The Markup that its tools should be used to identify big-picture solutions and create resources for groups of similarly situated students. But those kinds of changes can be expensive, and actually using the software to make institutional changes, rather than individual advising recommendations, seems to be the exception, not the rule at universities The Markup examined.

In its marketing literature, EAB’s proof of concept is often Georgia State University. It was one of the earliest schools to partner with the company, beginning in 2012, and its success improving retention and graduation rates over the subsequent years is impressive. From the 2010–11 school year to the 2019–20 school year, Georgia State increased the number of degrees it awarded by 83 percent, and the groups that saw the largest improvement were Pell Grant recipients and Black and Latinx students. The school has also seen a 32 percent drop in students changing majors after their first year, according to Tim Renick, executive director of Georgia State’s National Institute for Student Success.

But close examination reveals important differences between Georgia State’s predictive models and retention efforts and those at other EAB schools. Perhaps most significant: Georgia State does not include race in its models, while almost all the schools from which The Markup obtained model documentation do. And many of them incorporate race as a “high-impact predictor.”

High-impact predictors “are responsible for more than 5 percent of the variance in scores across all of the students” who have earned similar amounts of credits, according to the report EAB prepared for the University of Wisconsin–Milwaukee. “This may mean that the variable has a moderate impact on the scores of many students, or a high impact on the scores of just a few students.”

Venit, from EAB, said it’s up to each school to decide which variables to use.

Predictive algorithms that are explicitly influenced by a student’s race are particularly concerning to experts.

“Using race for any kind of system, even if it’s in a very narrow context of trying to end racial disparities in higher education … you can go into that with the best of intentions and then it takes very, very few steps to get you in place where you’re doing further harm,” said Hannah Quay-de la Vallee, a senior technologist who studies algorithms in education at the Center for Democracy and Technology.

In Georgia State’s case, Renick said, race, ethnicity, and income level are not factors the school considers valuable for incorporation into a risk score.

“If somebody is at risk because they’re … from a racial background, that’s of no use to the academic adviser” because the adviser can’t change it, Renick said.

Something that is potentially fixable, like a student’s financial struggles, will come up during conversations with advisers, he said, which is where another key difference between Georgia State and other EAB schools comes into play. The school spent $318,000 on its EAB subscription for 2020, but it has also invested heavily elsewhere in order to gain its reputation as a national role model for student retention, spending millions of dollars each year on additional resources like tutors and micro-loans, and about $3 million since 2012 hiring more student advisers, Renick said.

Georgia State now has a student-to-adviser ratio of about 350 to 1. Compare that to the University of Wisconsin–Milwaukee, a similarly sized public institution that also has large minority and low-income student populations and accepts nearly every student who applies. UW-Milwaukee, which does use race as a high-impact predictor, has used Navigate since 2014 and has expressly said it aims to replicate Georgia State’s successes. Its student-to-adviser ratio is as high as 700 to 1, according to Jeremy Page, the assistant dean for student services in the school of education, who oversees the partnership with EAB.

Intention vs. Reality

The Markup reviewed documents describing the predictive models used at five EAB client schools. Four of them—UW-Milwaukee, Texas A&M, Texas Tech University, and South Dakota State University—included race as a risk factor. Only Kansas State University did not.

Texas Tech and South Dakota State did not respond by press time to questions about why they chose to incorporate race as a variable.

UMass Amherst and the University of Houston, two of the schools from which The Markup obtained aggregate data showing racial disparities in students’ risk scores, either rejected public records requests for their predictive model summaries or heavily redacted their response.

Administrators at some EAB client schools said they were not aware of the disproportionate rates at which EAB’s algorithms label students of color high risk or that race was even a factor in how the scores are calculated.

“I certainly haven’t had a lot of information from behind the proprietary algorithms,” Carolyn Bassett, associate provost for student success at UMass Amherst told The Markup.

“What I learned from EAB, over and over, about the process was that the algorithm to determine risk was proprietary and protected,” Tim Scott, associate provost for academic affairs and student success at Texas A&M, told The Markup. Advisers at the university do view the risk scores but “Quite frankly, we have wondered and thought about whether we should turn it off completely.” Texas A&M’s contract with EAB runs through 2022.

Page said he was aware that UW-Milwaukee’s model incorporates race but not how heavily it is weighed. The Navigate dashboard, which also includes information like a student’s GPA, is designed to give overworked advisers a 30-second snapshot of a student’s progress, he said, but “there are other times when the model score might feel off, and that’s just the nature of working with predictive models.”

EAB’s algorithms also use other information to predict student success, such as SAT or ACT scores, high school percentile, credits attempted versus completed, and “estimated skills.” Notably among the schools The Markup reviewed, only Kansas State University’s model, which doesn’t include race, considers any factors related to a student’s financial stability, despite the cost of college being one of the primary reasons students drop out.

The company’s models are also trained on historic student data, anywhere from two to 10 or more years of student outcomes from each individual client school, another aspect of the software experts find problematic.

“When you use old data and base decisions you make on that now, you’re necessarily encoding lots of racist and discriminatory practices that go uninvestigated,” said Chris Gilliard, a Harvard Shorenstein Center research fellow who studies digital redlining in education.

Once the software produces a risk score, it’s generally up to the schools themselves to decide what to do with them, but professors who serve as student advisers say they’re given little guidance on how to use the risk scores.

Are we behaving in a certain way right off the bat because we’re looking at the risk factor?

Carol Barr, UMass Amherst

“At no point in required training did they really explain what this [risk score] really is,” Griffin, from UMass Amherst, said.

Others worry that seeing a student labeled “high risk” may improperly influence a teacher in guiding his or her students.

“Are we behaving in a certain way right off the bat because we’re looking at the risk factor?” Carol Barr, senior vice provost for academic affairs for UMass Amherst, said. “We’ll be talking about that.”

Particularly, experts worry, professors may guide their students towards less “risky” majors, from which they’re considered more likely to stay enrolled and graduate.

One aspect of the Navigate software’s design in particular seems to encourage this: the Major Explorer function, which shows a student’s predicted risk score in a variety of majors. In a document prepared for Rutgers University, EAB says that advisers should not tell all high risk students to switch to a major in which they would immediately become low risk.

But they should “explain to students their risk score to create a sense of urgency for resistant students” to “explore major options” and which courses to take.

“While it may be intended to be a supportive move, we know that labels can be stigmatizing,” said Roxana Marachi, a professor at San José State University who studies big data and predictive analytics in education. “The challenging part is that it’s marketed to be an indicator to provide resources and supports, but it can also be weaponized and used against students.”

LaToya White, a senior director at EAB, said that the company has been trying to move away from using phrases like “high risk” in training sessions because of how they can be misinterpreted. “There are human biases that we all have and we all bring to any situation,” she said. “Something that we have been introducing is that you need to be talking about these biases.”

There is scant research into the efficacy of these tools, but some research suggests that the more one interacts with students, the less valuable predictive risk scoring appears. A 2018 study by the Community College Resource Center at Columbia University found that school administrators thought about tools like EAB’s Navigate positively, while “the more closely participants were involved with using predictive analytics on the ground with students, and the more advanced the associated institution was with its implementation, the more critical the participants were.” The primary concerns raised by advisers and faculty: They didn’t believe in the validity of the risk scores, they thought the scores depersonalized their interactions with students, and they didn’t understand how the scores were calculated.

Setting the Precedent

None of the universities The Markup contacted for this article are currently using EAB’s risk scoring algorithms in their admission process, although they do assign students risk scores before they’ve ever taken a class. But the financial pressure on public universities from years of state austerity and the declining rate at which high schoolers are choosing to go to college has some educators worried that predictive analytics will soon become prescriptive analytics—the deciding factor in who does or doesn’t get accepted.

There are already indications that some schools are looking to these kinds of metrics to streamline their student bodies. In 2015, the president of Mount St. Mary’s University, Simon Newman, proposed using student data to cull a portion of the incoming class deemed unlikely to succeed. Speaking to a group of faculty and administrators, he reportedly said, “You think of the students as cuddly bunnies, but you can’t. You just have to drown the bunnies … put a Glock to their heads.” Newman resigned shortly after the student newspaper published his comments.