When George Floyd was killed in May after a police officer pressed a knee into the man’s neck, millions of Americans protested, demanding civic leaders dismantle long-standing police practices and fundamentally reimagine public safety.

A chorus of CEOs and companies soon joined in. Police technology providers big and small, from predictive policing company PredPol and gunshot alert firm Shotspotter to Amazon’s Jeff Bezos, voiced support for racial justice.

PredPol’s marketing manager Emmy Rey called the tragedy in Minneapolis a “breakdown of the social contract between the protector and the protected.” And she offered PredPol’s crime-predicting technology as a solution.

PredPol was founded on the audacious premise that we could help make the practice of policing better in America.

Emmy Rey, PredPol marketing manager

“PredPol was founded on the audacious premise that we could help make the practice of policing better in America. By ‘better’ we mean providing less bias, more transparency, and more accountability,” Rey wrote in a company blog post in June.

PredpPol is one of a slate of predictive policing technologies—another, called Hunchlab, was acquired by Shotspotter, and IBM, Microsoft, and Palantir have developed their own tools, as have some police departments. They use a variety of techniques to try to prevent crime.

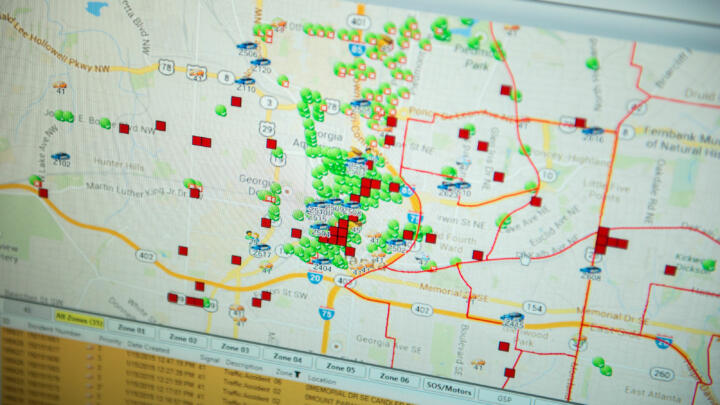

Some enable officers to create a list of people they determine are at high risk for committing or being victims of crime. Others, like PredPol, take local crime report data and feed it into an algorithm to generate predictions of future crime hotspots so that agencies patrol those areas and, ideally, prevent crime before it starts. The company has been tapped by police departments across the country, including in Los Angeles, Birmingham, Ala., and Hagerstown, Md.

“No crime means no victim, no investigation and arrest, and nobody to put through the criminal justice system,” PredPol’s Rey wrote. “Everyone in society benefits.”

Early versions of data-driven policing were used in the 1990s, but it has grown more popular and the technology more sophisticated over the last decade. By 2017, about a third of large agencies were using predictive analytics software, according to a report funded by the U.S. Department of Justice. Even more planned to adopt it.

The technology was sold to law enforcement agencies as objective, but a growing body of research suggests that it may only further entrench biased policing.

This summer, more than 1,400 mathematicians signed a letter boycotting predictive policing efforts and suggesting others do the same: “Given the structural racism and brutality in US policing, we do not believe that mathematicians should be collaborating with police departments in this manner. It is simply too easy to create a ‘scientific’ veneer for racism.”

Some police departments have stopped using these methods after efficacy concerns were raised.

“Dirty Data” Makes for Biased Predictions

The technology is “perpetuating and, in some cases, concealing some of the biased practices in the police department,” said Rashida Richardson, director of policy research at AI Now, a New York University institute exploring the effect of artificial intelligence on society, which has looked at predictive policing programs around the country.

“Dirty data,” she said, makes for bad predictions.

1,400+

Number of mathematicians who signed a letter urging their colleagues not to work with police on predictive policing algorithms.

Police data is open to error by omission, she said. Witnesses who distrust the police may be reluctant to report shots fired, and rape or domestic violence victims may never report their abusers.

Because it is based on crime reports, the data fed into the software may be less an objective picture of crime than it is a mirror reflecting a given police department’s priorities. Law enforcement may crack down on minor property crime while hardly scratching the surface of white-collar criminal enterprises, for instance. Officers may intensify drug arrests around public housing while ignoring drug use on college campuses.

Recently, Richardson and her colleagues Jason Schultz and Kate Crawford examined law enforcement agencies that use a variety of predictive programs.

They looked at police departments, including in Chicago, New Orleans, and Maricopa County, Ariz., that have had problems with controversial policing practices, such as stop and frisk, or evidence of civil rights violations, including allegations of racial profiling.

They found that since “these systems are built on data produced during documented periods of flawed, racially biased, and sometimes unlawful practices and policies,” it raised “the risk of creating inaccurate, skewed, or systemically biased data.”

A seminal study from a few years earlier shows how this could play out with a specific type of crime—in this case, drug crime.

Using a simulation, researchers Kristian Lum, now at the University of Pennsylvania, and William Isaac, now at DeepMind, an artificial intelligence company, looked at whether PredPol’s predictions would disproportionately steer officers into minority neighborhoods in Oakland, Calif.

Lum and Issac had estimated drug use in the city based on the results of a national survey and found it would likely be fairly evenly distributed across the diverse city.

Over-policing imposes real costs on these communities.

Researchers Kristian Lum and William Isaac in a 2016 report

Yet when they fed arrest data into PredPol’s publicly available algorithm, they found the hotspots it would have predicted were not at all evenly distributed.

The researchers found the algorithm would have sent police to Black neighborhoods at roughly twice the rate of White neighborhoods. The tool spat out risk areas where minorities lived—based not on drug use, but rather on places with prior police-reported drug crimes.

“Over-policing imposes real costs on these communities,” Lum and Issac concluded in their 2016 report. “Increased police scrutiny and surveillance have been linked to worsening mental and physical health; and, in the extreme, additional police contact will create additional opportunities for police violence in over-policed areas.”

Companies Say Claims of Bias Need Careful Attention

PredPol did not respond to requests for comment. But the company’s founders, UCLA anthropology professor Jeffrey Brantingham and George Mohler, a computer science professor now at Indiana University–Purdue University Indianapolis, have done their own study, which they say indicates their methods do not result in higher minority arrest rates.

In 2018, they published a paper that began with acknowledging their critics, including Lum and Issac: “Though all of these studies deal with hypothetical scenarios or thought experiments, they succeed in demonstrating that careful attention needs to be paid to whether predictive policing produces biased arrests.”

Brantingham, Mohler, and their co-author, Matthew Valasik, conducted a real-life experiment with the Los Angeles Police Department.

They compared the results between two groups: officers sent to PredPol-predicted crime hotspots and officers sent to hotspots picked by crime analysts, which served as a control group. They varied the groups each officer was assigned to day to day.

Brantingham, Mohler, and Valasik found the rate of minority arrests was about the same whether the software or analysts made the predictions. What changed was the number of arrests in the PredPol hotspots. That number was higher.

The increase in arrests, they wrote, is “perhaps understandable given that algorithmic crime predictions are more accurate.”

They acknowledged, however, that their findings “do not provide any guidance on whether arrests are themselves systemically biased” and called for “careful policy development” to prevent bias in predictive policing.

Neither Brantingham nor Mohler responded to requests for comment.

Robert Cheetham, the CEO of Azavea, which developed Hunchlab, said it’s important to recognize the bias in the data, and that their software sought to geographically space out predictions so police patrols wouldn’t be so concentrated on one particular neighborhood. He said Hunchlab also included information beyond crime reports, such as lighting, major events, school schedules, and locations of bars—which all can relate to crime but are not based on a single source of potentially police-biased data.

The product also avoided forecasting certain crimes. Drug crimes and prostitution were out, as they are more heavily influenced by whom police choose to target. And they didn’t think the tools could be used for rape, which is often not reported and which is unlikely to be deterred by police patrol.

It’s unclear if this approach has led to more equitable patrol.

Some Cities Have Scrapped Programs

Beyond complaints about racism, some police watchdogs have another critique: The software may not be helpful.

Earlier this year, Chicago’s inspector general published an investigation into the in-house predictive policing models, the Strategic Subject List and the Crime and Victimization Risk Model, which are both designed to predict an individual’s risk of being a shooting victim or offender.

An earlier investigation of the Strategic Subject List by the Chicago Sun-Times had found that 85 percent of people with the highest score were African American men and some had no violent criminal record whatsoever.

The Chicago Police Department told the paper that the scores were based on a variety of factors, including the number of times the person had been shot or assaulted and arrests for violence or drugs and gang membership, and that overall the risk scores helped police prioritize whom to issue warnings or offer aid to.

But the inspector general found the risk scores were unreliable and could potentially lead to harsher charging decisions by prosecutors.

Chicago ended up scrapping the program.

Unfortunately, the data was kind of messed up.

Mark Smith, LAPD Police Commission’s inspector general

The LAPD also ended one of its predictive policing efforts this year after its own internal review cast doubt on its efficacy.

The LAPD was the earliest adopter of PredPol, partnering with researchers a decade ago to help develop the technology. But the police commission’s inspector general, Mark Smith, found the data related to the program so unreliable it was difficult to draw conclusions.

“Unfortunately, the data was kind of messed up,” Smith told The Markup. When they went to measure if PredPol worked, he said, “we really couldn’t tell.”

A year after the report was released, the department announced it would be dropping PredPol. The announcement came mere weeks before the nation would erupt in protest after the killing of Floyd.

“The cost projections of hundreds of thousands of dollars to spend on that right now versus finding that money and directing that money to other more central activities is what I have to do,” police chief Michael Moore said.