Last week, Google and Apple announced that they were working together to develop privacy-protecting technology that could enable COVID-19 contact-tracing apps.

The idea is elegant in its simplicity: Google and Apple phones would quietly in the background create a database of other phones that have been in Bluetooth range—about 100 to 200 feet—over a rolling two-week time period. When users find out that they are infected, they can send an alert to all the phones that were recently in their proximity.

The broadcast would not identify the infected person, it would just alert the recipient that someone in his or her recent orbit had been infected. And, importantly, the companies say they are not collecting data on people’s identities and infection status. Nearly all of the data and communication would be stored on users’ phones.

Either you keep this anonymous and potentially somewhat exploitable, or you just go full Black Mirror.

Samy Kamkar, chief security officer of Openpath

But building a data set of people who have been in the same room together—data that would likely be extremely valuable to both marketers and law enforcement—is not without risk of exploitation, even stored on people’s phones, security and privacy experts said in interviews.

“Either you keep this anonymous and potentially somewhat exploitable, or you just go full Black Mirror and tie it to people and identities,” said Samy Kamkar, a security researcher who is cofounder and chief security officer of access control company Openpath, in an interview with The Markup.

So far, the companies have only submitted framework proposals for a service they say will roll out in May, and many details remain to be worked out. The companies appear not to be building their own apps; they say they are building the underlying technology that can be used in apps from public health authorities. Based on what information the companies have shared publicly so far, here is a look at the pros and cons.

The Advantages

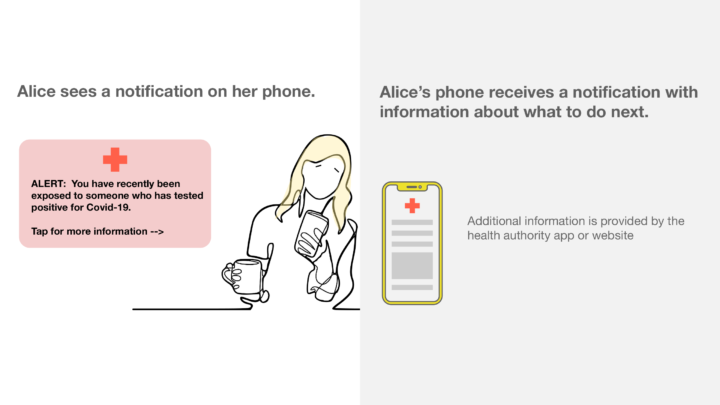

It’s opt-in. Users can choose whether or not they want to alert the people they’ve been in close contact with that they’ve tested positive for the virus.

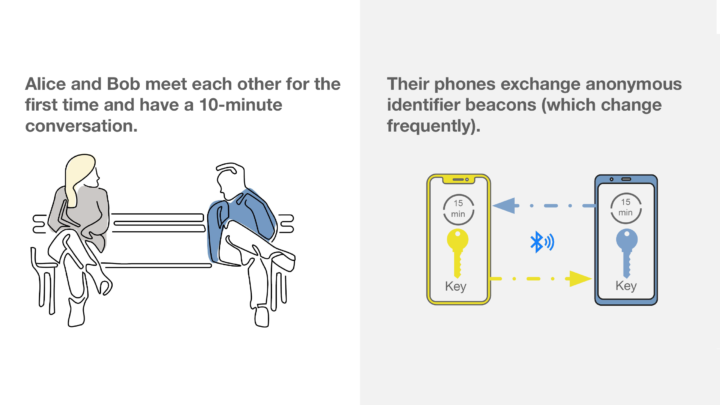

It’s anonymous. Using some clever cryptography, the information that users’ phones transmit to other phones is not tied to their identity. Rather, the phone transmits what the companies are calling a “rolling proximity identifier” that changes every 15 minutes. Each phone stores a local database of all the rolling identifiers it has seen.

When users choose to identify themselves as infected, their phones transmit a “diagnosis key” that allows other phones to identify the associated rolling identifiers in their local database.

It’s mostly decentralized. Most of the activity—sending and storing identifiers—takes place on a user’s phone. Only one central server receives the diagnosis key and the associated day that it was uploaded. That server does not contain any user identity or precise location information.

No personal information is required for use. Google said in public documents that no personally identifiable information will be collected. (This new service is not to be confused with a Google website that was launched to help California residents find places to get tested, which requires users to create a Google account.)

The system will create and verify a user’s identity with a secret key called the “tracing key,” which is created and stored on a user’s device and never revealed. The tracing key is used to generate the other keys that are broadcast—the rolling identifiers and diagnosis keys.

The Disadvantages

It’s vulnerable to trolls. Because there is no verification of user identity or whether a user is actually infected, people could use this service to fake being contagious.

Renowned University of Cambridge security researcher Ross Anderson captures the threat perfectly in his critique of the proposal:

The performance art people will tie a phone to a dog and let it run around the park; the Russians will use the app to run service-denial attacks and spread panic; and little Johnny will self-report symptoms to get the whole school sent home.

It’s vulnerable to spoofing. Bluetooth signals are broadcast over open channels and therefore can be captured by anyone who is listening for them. That means that someone could grab a user’s rolling identifier and blast it out in some other location, making it seem that the user was in two different locations simultaneously.

The lack of a central location database means it would be difficult to guard against such spoofing, said security researcher Kamkar. “You could make a botnet out of this,” he said. But he added that he thinks spoofing may be an advantage from a privacy point of view because it could make the data difficult for law enforcement to use as legal proof of proximity. (Cops could get the data when they take your phone when investigating a crime.)

False alerts could weaken trust in the system. If spoofing and trolling become prevalent, users will mistrust and ignore the alerts that they receive, making the system useless.

It’s also not clear how the system will calculate the amount of exposure to an infected person who triggers an alert. If the algorithm is trigger-happy, it could send alerts for every person you passed for a few seconds on the sidewalk. If it’s too cautious, it could fail to send an alert for the lady who sneezed when she was standing behind you in line at the grocery store for just a minute.

Other apps may try to grab the data. Many users don’t realize that a significant number of apps on their phone are sending their location to third parties, who then aggregate it into large databases that they sell to hedge funds, advertisers, and others. That’s how we got all those maps of people traveling despite the warnings to stay home.

Similarly, other apps on a user’s phone could seek to receive and store the identifiers being broadcast by nearby phones. After all, many apps are already using Bluetooth signals for what they call “proximity marketing.”

Moxie Marlinspike, creator of the Signal encrypted messaging app, said that overall he thinks the contact-tracing framework is “nice” but that when users choose to reveal their presence history, they may not realize that the advertising industry is also likely listening. “It’s just kind of unfortunate that there’s a whole industry (ad tech) that is already ‘in proximity and watching’ for different reasons,” he said in a text exchange.

Some location information may be exposed to the central server. In its public documents, Google said it doesn’t collect any user location data.

But in practice it seems that some location information may be used, security experts say. After all, users in Brazil won’t need or want to download information about users who have tested positive in Norway. And so to save users from having to download a massive database of the entire world’s keys, some location data will likely have to be attached to the central database.

In public documents, Google describes how a user will periodically download “the broadcast keys of everyone who has tested positive for COVID-19 in her region.” Google did not respond to a request for comment.

Anonymous data is always vulnerable to being re-identified. Decades of research shows that large supposedly anonymous data sets can often be deanonymized by combining information from other sources to reveal sensitive information about individual people.

Cryptographer Serge Vaudenay, of the École Polytechnique Fédérale de Lausanne, describes ways that people could be re-identified through contact-tracing apps in his critique of the Pan-European Privacy-Preserving Proximity Tracing proposal:

It is actually surprising that decentralization creates more privacy threats than it solves. Indeed, sick people who are anonymously reported can be deanonymized, private encounter[s] can be uncovered, and people may be coerced to reveal their private data.

It’s not clear how apps using the service will be vetted. In its public documents, Google said the service will “only be used for contact tracing by public health authorities for COVID-19 pandemic management.”

But it’s not clear how the system can retain its anonymity if it’s also tied to public health authorities who are perhaps seeking to collect data for other purposes. It’s also not clear how closely Google and Apple will be vetting apps health departments build using the technology. Historically Google has been much more permissive about apps it allows on the Android platform than Apple has been about letting apps onto the iPhone.

It relies on testing, which is currently inadequate. And the elephant in the room is that developing an effective contact tracing system may require a level of COVID-19 testing that is not currently available in the United States, where tests are currently being heavily rationed. (The Markup has filed public records requests in all 50 states, New York City, and Washington, D.C., seeking their algorithms for determining who can get tested.)