Hello, friends,

Honestly, it was kind of hard to imagine that Facebook’s image could get more tarnished. After all, the company’s been mired in negative press for the past five years, ever since the Cambridge Analytica scandal and the Russian disinformation campaign fueled Trump’s election in 2016.

But, amazingly, public relations has gotten even worse for Facebook this month. The precipitating event was the emergence of a Snowden-style trove of documents—“The Facebook Files”—that appear to have been leaked to The Wall Street Journal reporter Jeff Horwitz.

In a five-part series, The Wall Street Journal used those documents to reveal that not only was Facebook fueling teenage self-harm and enabling human trafficking, but that Facebook itself also knew that its platform contributed to those problems and chose to ignore it.

The series revealed that:

- Facebook’s secret program, XCheck, allows millions of VIPs to be exempt from the company’s normal content moderation rules preventing hate speech, harassment, and incitements to violence. A 2019 internal review of the program declared that its double standard was a “breach of trust.”

- Facebook’s own research found that Instagram makes one-third of teenage girls feel worse about their bodies.

- Facebook tweaked its algorithms to “increase meaningful social interactions” but found that the shift actually boosted “misinformation, toxicity, and violent content.”

- Facebook has been lethargic about removing dangerous content in developing countries including human trafficking and incitements to ethnic violence—even when the content was flagged internally.

- Facebook was slow to address a content moderation loophole that allowed anti-vaccine advocates to flood Facebook with anti-vax comments, despite internal cries for a fix.

In many of these arenas, it’s been documented in the past that Facebook was enabling harm. What’s new is proof that Facebook has long understood its ills but has repeatedly failed to address them adequately. As the Journal put it, “Facebook knows, in acute detail, that its platforms are riddled with flaws that cause harm, often in ways only the company fully understands.”

Facebook responded that the Journal was “cherry-picking” anecdotes that mischaracterized its actions. “The fact that not every idea that a researcher raises is acted upon doesn’t mean Facebook teams are not continually considering a range of different improvements,” Nick Clegg, Facebook’s vice president of global affairs, wrote in a blog post.

At the same time, new evidence of harm enabled by Facebook continued to accumulate in other publications, including The Markup. The New York Times reported that Facebook had rolled out a feature that was intended to promote positive news about the social network in users’ feeds in part to bury negative press about itself. And ProPublica reported that Facebook Marketplace was riddled with fraudulent and scam listings.

And, this week, The Markup Citizen Browser project manager Angie Waller and reporter Colin Lecher revealed that Facebook has been disproportionately amplifying Germany’s far-right political party during the run-up to tomorrow’s parliamentary elections.

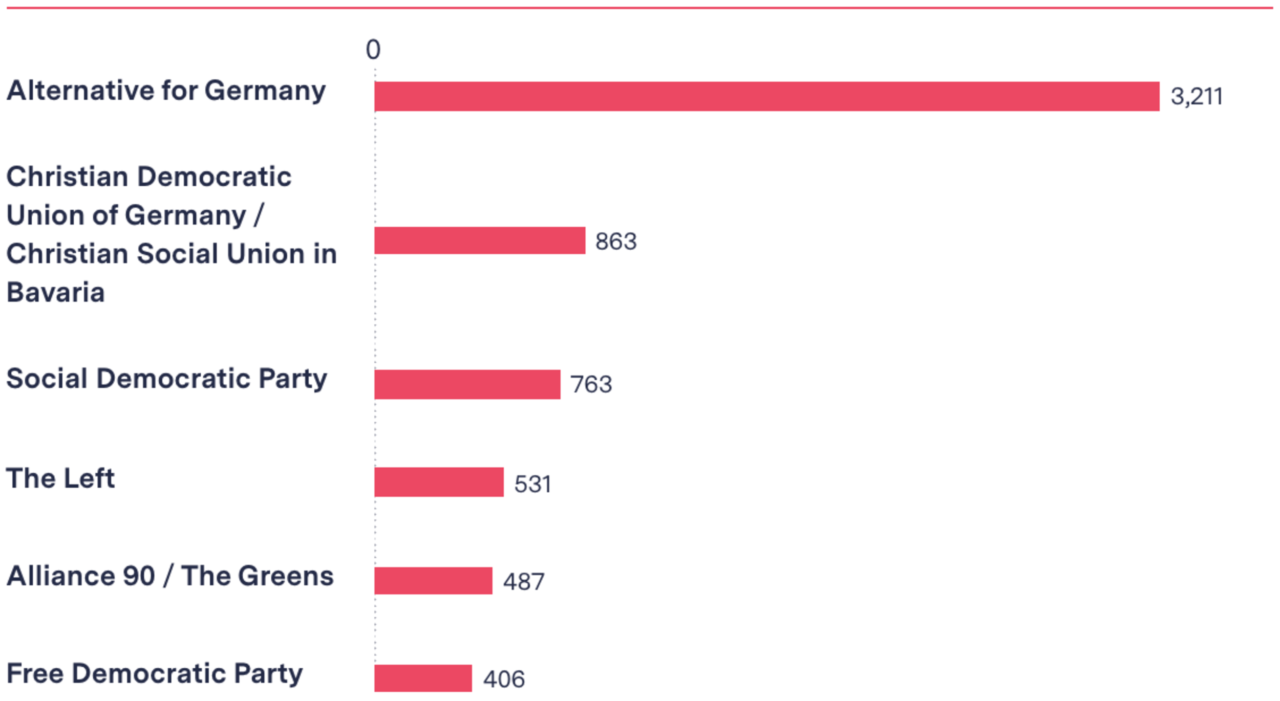

Although the political party Alternative for Germany (Alternative für Deutschland), or the AfD, is relatively small (it won just 12 percent of the vote in the 2017 parliamentary elections), its supporters’ anti-immigrant and anti-pandemic-restrictions posts were displayed more than three times as often as posts from much larger rival political parties and their supporters in our German panelists’ news feeds.

AfD and Its Supporters Peppered Our Panelists’ News Feeds with Posts

AfD-related pages’ posts appeared more than three times as often as posts form pages supporting any other party

The data, obtained through The Markup’s Citizen Browser project, in partnership with Germany’s Süddeutsche Zeitung, came from a diverse panel of 473 German Facebook users who agreed to share their Facebook feeds with us.

Facebook objected that our sample was too small to be accurate. “Given its very limited number of participants, data from The Markup’s ‘Citizen Browser’ is simply not an accurate reflection of the content people see on Facebook,” Facebook spokesperson Basak Tezcan said in an emailed statement.

But German political researchers said our findings do reflect just how well the AfD has mastered the type of sensational content that performs well in Facebook’s algorithmic feeds. Consider an AfD post displayed in our dataset that complained about “climate hysteria” and led to more than 5,000 “angry” reactions on Facebook. “They [AfD] trigger anger, fear—I would say anarchic or basic emotions,” Isabelle Borucki, an interim professor at the University of Siegen who studies German political parties, told The Markup.

Meanwhile, Facebook also took steps that effectively blocked research like our Citizen Browser panel. The Markup’s Corin Faife reported this week that Facebook has rolled out changes to its website code that foil automated data collection by jumbling some code in accessibility features used by visually impaired users.

Facebook responded that it did not make the code changes to thwart researchers and that it was looking into whether the change impacts visually impaired readers.

But given Facebook’s history of going after watchdogs—such as its recent shutdown of the accounts of researchers at the NYU Ad Observatory—Facebook’s move was greeted with skepticism.

Laura Edelson of the NYU project said it’s “unfortunate that Facebook is continuing to fight with researchers rather than work with them.” And U.S. senator Ron Wyden told The Markup, “It is contemptible that Facebook would misuse accessibility features for users with disabilities just to foil legitimate research and journalism.”

All the while, it’s worth remembering that Facebook continues to be wildly profitable. In 2020, Facebook had revenue of $85.97 billion and net income of $29.15 billion—which means it generated a very healthy profit margin of 34 percent.

And one way that it stays so profitable is by under-investing in harm mitigation. At the end of 2020, Facebook said it had 2.8 billion monthly active users and a global workforce of 58,604. That means that even if each Facebook worker were engaged in policing harm (which they are not, since many are focused on building technology or other things), each worker would be responsible for monitoring 47,778 Facebook user accounts, not to mention all of the comments and groups and marketplace postings associated with those users.

In other words, the mathematics of running a wildly profitable social network loved by Wall Street is not particularly conducive to running a police force that keeps activities on the platform in check.

And so, until that calculation changes, journalists like us at The Markup and The Wall Street Journal will keep doing our best to point out the public harms of this under-policed global speech platform.

As always, thanks for reading.

Best,

Julia Angwin

Editor-in-Chief

The Markup