Good morning. Our Hello World newsletter today includes an interview that we think will be of interest to our Citizen Browser subscribers as well. It’s reproduced below.

Hello, friends,

This week we published two stories about big tech companies failing to enforce their own rules aimed at stopping people from using their platforms to spread extremist content and organize violence.

On Tuesday, we revealed that Facebook had not lived up to the promise it made to Congress—and then reiterated in January—to stop recommending political groups during the period of the election and transfer of power. Using our Citizen Browser national panel data, reporters Leon Yin and Alfred Ng found that 12 of the top 100 groups recommended to our panelists in December were political.

Disturbingly, Facebook pushed partisan groups most often to the Trump voters on our panel—including groups whose discussions included conspiracy theories, calls to violence against public officials, and plans for attending the rally that preceded the Capitol riot.

In response to The Markup’s findings, Facebook spokesperson Kevin McAlister said, “We have a clear policy against recommending civic or political groups on our platforms and are investigating why these were recommended in the first place.” In response to a follow-up query for this newsletter, McAlister said there were no updates.

And on Thursday, reporter Jeremy B. Merrill revealed that Facebook and Google had sold ads peddling merchandise affiliated with a far-right militia group, despite both companies having policies banning militia content. The ads promoted shirts with logos for the militia and slogans like “Give Violence a Chance”—products that Jeremy also found listed for sale on Amazon, despite its policy against offensive materials.

All three companies removed the militia ads and product listings after we contacted them.

Jeremy found the Facebook militia merch ad in a database run by the Online Political Transparency Project at NYU, which enables participants to contribute ads they see on their news feed. (Facebook, which does not publicly disclose its targeting choices, has repeatedly sought to shut that tool down.)

It’s not a coincidence that both of these stories relied on a novel journalistic approach—panels of people who use technical tools to share data with reporters. These technical tools give journalists something we’ve never had before: a dashboard that allows us to monitor the spread of extremist content in real time.

As big tech platforms gain more power over our discourse, I believe that persistent monitoring tools like these—while expensive to build—are going to be a key strategy in holding accountable the constantly changing algorithms that govern our lives.

To understand how we got here and where we might be going, I interviewed The Markup’s very own Surya Mattu, who has built two persistent monitoring tools for us and first introduced me to the concept. Surya is an engineer by training who previously worked as a data reporter at Gizmodo’s Special Projects Desk and as a contributing researcher at ProPublica. He has also worked as a researcher at Bell Labs, Data & Society, and the MIT Media Lab.

The interview is below, edited for brevity.

Angwin: You introduced me to the concept of persistent monitoring as a journalistic goal. Remind me, when did you first think of it?

Mattu: This started from our work building a browser extension at ProPublica that allowed readers to see the ad categories that they were assigned to on Facebook. That led to the discovery that Facebook was allowing housing advertisers to racially discriminate. And I noticed that this story kept coming up again as they claimed to fix it and then didn’t.

What I realized is, when we do these stories, people don’t realize that things don’t change. So we needed a structure to continue to monitor the harm we found and show that they haven’t changed their platform to account for that, even though they now know about it.

Angwin: From my point of view, constant monitoring is sort of an obvious point but one that journalists don’t often think about. Does it come from your engineering perspective?

Mattu: When I started working with journalists, I realized that journalists are really focused on showing the harm. In pre-algorithmic systems, when you found that harm, you usually had a person to blame for it, and they would have to be held accountable.

But what’s different with the tech industry is their algorithms allow them to skirt accountability. My engineering background enabled me to see in the code, on the platforms, when they ignored what we had shown in our stories. I wanted to highlight it at the level of the tool and the engineering, what their negligence was enabling.

Angwin: What was the first persistent monitoring tool that you actually built?

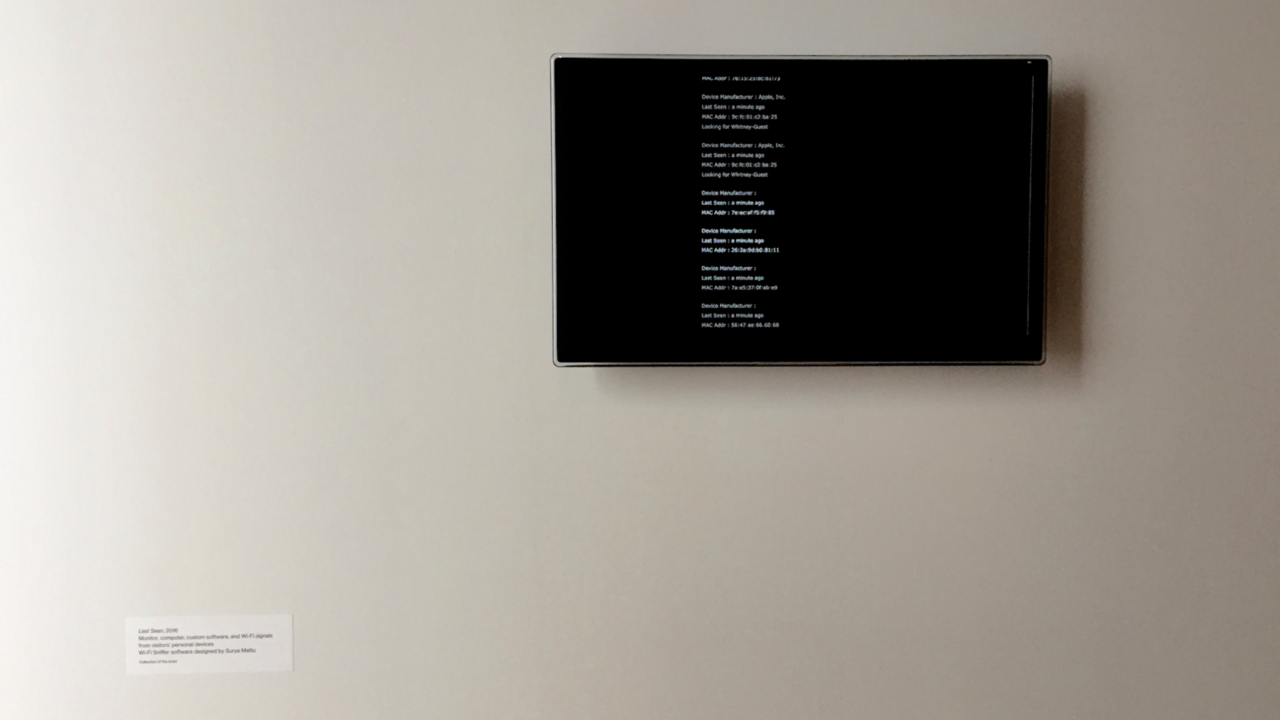

Mattu: In 2014, I wrote a program to reveal to people the fact that their phones had this personal history of all the Wi-Fi networks they had ever connected to. So if you’ve been to a conference, a business class lounge, a hotel, all that information was being broadcast from people’s phones, and they had no idea. And advertisers were using this data to target people.

When I read about it in an engineering publication, it was described as a small glitch in the network protocol. I would tell people about it, and their eyes would glaze over. But when I showed them the networks that their phone was sharing, their reaction would completely change—they would be like, “How do you know that? This is my ex-partner’s network!”

This project ended up as a part of Laura Poitras’s show “Last Seen” at the Whitney Museum. It persistently monitored the visitors to the Whitney show and showed them what their phones were revealing about them.

Angwin: Blacklight was the first persistent monitoring tool you built for journalism. As I recall, I first suggested it to you as an internal tool that we would use to do a privacy census across the web. But you pushed for it to be a real-time forensics tool. Explain your thinking.

Mattu: What I learned from the Wi-Fi project was that people need a narrative to see what is happening in their own lives. I realized that a census was just not personal.

I wanted to up the ante on how to make this feel fresh for users in a way that they would want to engage with the subject again. That’s where my motto to provide readers with “agency not apathy” comes in.

Angwin: What is the technical challenge of taking something from a one-off tool to a persistent monitoring tool?

Mattu: It basically means you age five years in one year! [Laughs.]

But seriously, it was a really interesting process of building technology like a journalism story. Normally an engineer would build a product to focus on the function, but in this case the function was emerging as Aaron Sankin was reporting out the story that would accompany the tool.

Sometimes I would have to throw out a lot of code based on where the editorial process was taking us. For example, when Aaron started talking to website operators, they were really surprised that we had found that their website was setting DoubleClick cookies. They thought they just had Google Analytics.

So we realized we needed to have a test in the tool for Google Analytics being used for retargeting [which is when Google Analytics sets a DoubleClick cookie allowing Google to track users across the web]. From an engineering perspective, monitoring that cookie was the same as monitoring all the other cookies, but from an editorial perspective, monitoring that cookie was enough to make it a separate test.

Angwin: Now let’s talk about Citizen Browser as a persistent monitoring tool. When you and I first started talking about building a panel, I was thinking about a browser extension. And you argued we needed to build a browser because browsers are really good at running persistently in the background.

Mattu: It was basically because I wanted to treat it like actual evidence gathering and in that sense it couldn’t be as opportunistic as a browser extension. From my previous experience—and yours—we knew that people didn’t really use Facebook on their desktop anymore. So building this allowed us to capture data without people having to use their desktop computers to access Facebook.

We wanted to persistently check how things changed on Facebook over time. We were not trying to just build a survey or a census of what is happening on Facebook. We are trying to show anecdotally with real people’s lives what is the difference in how people experience this platform. And to do that, we need to capture a meaningful amount of data over time.

Angwin: What were the challenges of building Citizen Browser?

Mattu: It was difficult to find a way to collect this data in a way that is responsible and first and foremost respecting the privacy of the people sharing data with us. The way to do that was to do some really insane engineering.

We did probably twice the amount of work to ensure we were following all the best practices we could. And we have really constrained ourselves on what we could use because we know that if we don’t do that from the start, we will leak data.

We basically put a guardrail up that we won’t even look at stuff that we can’t report on. You had clarity from the start that we would only focus on what Facebook was recommending. We weren’t interested in how people were using the platform. We were interested in how Facebook was providing information to users.

Angwin: Do you think we will need more persistent monitoring tools in the future?

Mattu: I often think about something you said to us in a call once about how important it is in the digital age to have receipts for the truth. In a world where there is declining trust in journalism, you need to have the evidence to back up the claims you are making.

The reason persistent monitoring is important is that these platforms are constantly changing. Building persistent monitoring tools is the only way to know if the harm you are focusing on is still taking place.

Best,

Julia Angwin

Editor-in-Chief

The Markup