The Markup, now a part of CalMatters, uses investigative reporting, data analysis, and software engineering to challenge technology to serve the public good. Sign up for Klaxon, a newsletter that delivers our stories and tools directly to your inbox.

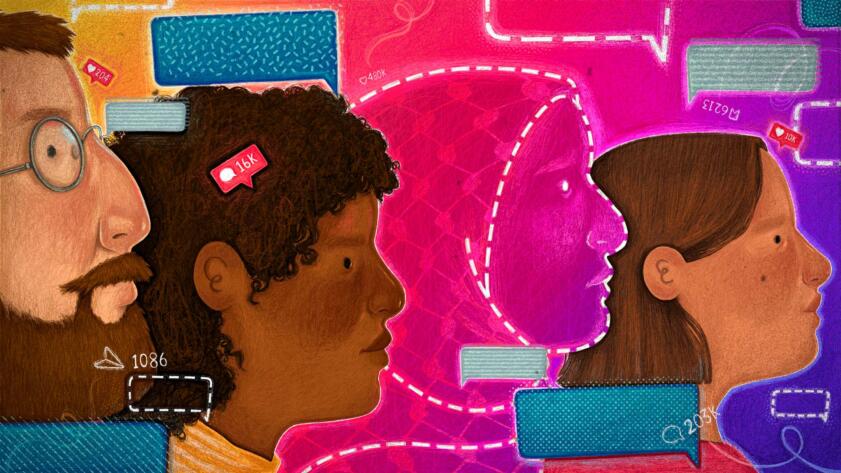

When social media platforms get as big as Instagram—more than 2 billion monthly active users—a huge majority of content moderation is automated. Earlier this week, The Markup published an investigation into how Instagram’s moderation system demoted images of the Israel–Hamas war and denied users the option to appeal, as well as a piece on what someone can do if they think they’ve been shadowbanned.

But how do these automated systems work in the first place, and what do we know about them?

How does any platform moderate billions of posts quickly?

To moderate billions of posts, many social media platforms first compress posts into bite-sized pieces of text that algorithms can process quickly. These compact blurbs, called “hashes,” look like a short combination of letters and numbers, but one hash can represent a user’s entire post.

For example, a hash for the paragraph you just read is:

And a hash for our entire investigation into shadowbanning on Instagram—a little over 3,200 words—is:

You can play around with generating hashes yourself by using one of many free tools online. (We selected the “SHA-256” algorithm to generate ours.)

In many ways, hashes operate like fingerprints, and content moderation algorithms search through their database of existing fingerprints to flag any matches to posts they already know they want to remove. Dani Lever, a spokesperson at Meta, Instagram’s parent company, confirmed that Instagram uses hashes to “catch known violating content.”

Even if someone edits images or videos after posting, platforms can still identify similar posts by using “perceptual hashing,” which creates something like a partial fingerprint based on parts of the content that can survive alteration, a process described in a 2020 paper about algorithmic content moderation. Perceptual hashing is likely how YouTube can identify snippets of copyrighted music or video in an upload and proactively block or monetize it.

Robert Gorwa, a postdoctoral researcher at the Berlin Social Science Center and lead author of the paper, told The Markup that regulators have been pressuring the biggest social media companies to be more proactive about sharing knowledge and resources on content moderation since the 2010s. In 2017, Facebook, Microsoft, Twitter, and YouTube came together to form the Global Internet Forum to Counter Terrorism, an initiative that, among other things, administers a database of hashes of content from or supporting terrorist and violent extremist groups. Organizations like the National Center for Missing and Exploited Children operate similar hash-sharing platforms for online child sexual abuse material.

In his paper, Gorwa said that Facebook used this technique to automatically block 80% of the 1.5 million re-uploads of the 2019 live-streamed video of the Christchurch mosque mass shooting. But checking uploads against hashes doesn’t help platforms evaluate whether brand new posts are violating standards or have the potential to get them in trouble with their users, advertisers, and regulators.

How are new posts moderated in real time?

That’s where machine learning algorithms come in. To proactively flag problematic content, Meta trains machine learning models on enormous amounts of data: a continuously refreshed pool of text, images, audio, and video that users and human content moderators have reported as inappropriate. As the model processes more impermissible content, it gets more efficient at flagging new uploads.

Long Fei, who until 2021 worked at YouTube as a technical lead managing its Trust and Safety Infrastructure Team, told The Markup that, behind the scenes, specialized models scan what users post to the site. According to Fei, these specialized models have different jobs. Some look for patterns and signals within the posts, while others weigh those signals and decide what to do with the content.

For example, Fei said, “there may be models looking for signals of guns and blood, while there may be other models using the signals and determining whether the video contains gun violence.” While the example itself is oversimplified, Fei said, it’s a good way to think about how models work together.

Instagram says it builds “machine learning models that can do things like recognize what’s in a photo or analyze the text of a post. … models may be built to learn whether a piece of content contains nudity or graphic content. Those models may then determine whether to take action on the content, such as removing it from the platform or reducing its distribution.”

What can human moderators see?

Platforms also have people who manually moderate content, of course—their work is used to train and check the machine learning algorithms. The human point of view is also necessary to resolve questions requiring sensitivity or diplomacy, and to review user appeals of moderation decisions.

Although platforms have their own internal teams, much of the actual review work is outsourced to contractors that employ people in the U.S. and around the world, often at low wages. Recently, content moderators have started to organize for better pay and mental health services to help with the trauma of flagging the internet’s darkest content.

There isn’t a lot of transparency around what information human moderators have access to. A new California law that requires large social media companies to disclose how they moderate content is currently being challenged in court by X, formerly known as Twitter.

Joshua Sklar, a former Facebook content moderator, told The Markup that human moderators often don’t have the frame of reference needed to make informed decisions. The team he worked on specifically looked at moderating individual Facebook posts, but Sklar said he could barely see any information other than the post itself.

“You’re pretty much viewing these things out of context,” Sklar said. “Say someone did a series of posts of images that spelled out a racial slur. You wouldn’t be able to tell [as a moderator].”

Gorwa has heard similar accounts. “Human moderators typically have limited context about the person posting and the context in which the content they’re reviewing was posted. The full thread in which the post appeared is usually not available,” he said.

Meta’s transparency center describes how review teams work, but does not describe what review teams actually see about users and what they posted.

When cases need context, Meta says it will, “send the post to review teams that have the right subject matter and language expertise for further review. … When necessary, we also provide reviewers additional information from the reported content. For example, words that are historically used as racial slurs might be used as hate speech by one person but can also be a form of self-empowerment when shared by another person, in a different context. In some cases, we may provide additional context about such words to reviewers to help them apply our policies and decide whether the post should be left up or taken down.”

Meta declined to comment on what Instagram’s human moderators can see when reviewing content.

How is artificial intelligence being used?

Robyn Caplan, an assistant professor at Duke University’s Sanford School of Public Policy who researches platform governance and content moderation, said that previously, “it was thought that there were certain types of content that were not going to be done through automation, things like hate speech that require a lot of context. That is increasingly not the case. Platforms have been moving towards increased automation in those areas.”

Automated Censorship

What to Do If You Think You’ve Been Shadowbanned on Instagram

How to appeal, check violations, and more

In 2020, Facebook wrote about how it “uses super-efficient AI models to detect hate speech.” Now, in 2024, Meta said it has “started testing Large Language Models (LLMs) by training them on our Community Standards to help determine whether a piece of content violates our policies. These initial tests suggest the LLMs can perform better than existing machine learning models.” The company has also created AI tools to help improve performance of its existing AI models.

“There’s hype about using LLMs in content moderation. There’s some early indications that it could yield good results, but I’m skeptical,” Gorwa said.

Despite investing in AI tools, Meta has had some high-profile stumbles along the way, such as when Instagram associated posts about the Al-Aqsa Mosque with terrorist organizations in 2021.

More transparency, please

Our reporting has shown that platforms often say they are constantly building new moderation tools and tweaking the rules for what is permissible on their site. But that isn’t the whole story.

Platforms are overwhelmed with the flood of content they encourage—and depend on—from their users. They make efforts to root out bad actors, but often find themselves sanctioning accounts or posts made by people who are expressing themselves in good faith. As we’ve seen over and over again, the results are often disproportionate, with the views of a single group suffering far more than others.

It’s a difficult problem—there is truth to the statement that Lever, Meta’s spokesperson, made in our story earlier this week: her company is indeed operating massive platforms in “a fast-moving, highly polarized” environment.

Shifting societal norms, technological advances, and the chaos of world events mean we may never reach an equilibrium where content moderation is solved.

“What we’ve really learned out of the debate over content moderation over the last several years is that [it] has been implied that there is a solution to a lot of these problems that is going to satisfy everyone, and there might not be,” Caplan said.

But as long as tech companies refuse to be more forthcoming about how they police content, everyone on the outside, including users, will be left trying to piece together what they can about these systems—while having no choice but to live with the results.