Hello!

Welcome to The Markup’s third week of publishing!

I hope you are taking care of yourself in the face of the spreading coronavirus. In order to do our part to slow the spread of the disease, we have temporarily shut down our office and are all working remotely until the end of March, when we will reassess.

As health care systems stretch and strain to handle a global pandemic, they must constantly triage who gets care, how quickly, and at which level. This is something emergency departments have always done.

But the health care system is increasingly relying on algorithms to make decisions about who gets health care and how much, so I thought it would be a good time to discuss this growing trend.

Algorithms, of course, are just recipes for decision-making. And they have long been an important tool for triaging patients and providing medical staff with consistent rules to determine which need to be treated first.

But given the staggeringly high cost of health care in the United States, algorithms are also creeping into other aspects of the system—from diagnosing patients to deciding how much care a person will get.

China is, of course, ahead of the curve on this front, requiring citizens to install an app on their smartphones that assigns them a color code—green, yellow or red—rating them on their level of alleged coronavirus risk using a black box algorithm. Intrepid reporting from The New York Times reveals that the app also sends users’ locations to the police—allowing law enforcement to monitor citizens’ movements in an unprecedented fashion.

The U.S. is nowhere near that level of surveillance and automated decision-making—particularly given that we are currently suffering from a nationwide shortage of tests and we have a culture of free expression that would likely reject such invasive surveillance.

But we are still moving toward algorithmic health care systems. Last week, The Markup reporter Colin Lecher assessed the current state of automated health-decision-making in our feature called “Ask The Markup,” where we aim to provide the best available evidence to answer your questions.

Colin found that several states have been pushing algorithms to determine how much help to provide to sick people on government programs. In 2016, Arkansas began using an algorithm to allocate in-home visits for people with disabilities. This led to drastic cuts in care hours for many people.

After Legal Aid of Arkansas filed a lawsuit, it emerged that some people lost needed help because the vendor had failed to properly account for diabetes and cerebral palsy in the algorithm. Without the lawsuit, those who lost care would have had little evidence that they could use in the appeals process to challenge the algorithm’s decisions.

A similar fiasco happened when Idaho implemented an algorithm to determine individualized costs for home care. Under the system, the state cut the home care services to some households by as much as 42 percent, drastically limiting caretakers’ visits to certain patients. The ACLU of Idaho sued, and the suit revealed that the state had relied on flawed data. A judge ordered the program to be overhauled.

And, of course, some algorithmic tools have been found to be biased against vulnerable groups. Last year, researchers found that a widely used algorithm that predicts which patients will benefit from extra medical care was dramatically underestimating the needs of the sickest black patients.

Unfortunately these algorithms were exposed only after they had been implemented—and people’s lives were affected. One of our top goals here at The Markup is to make sure that we hold as many algorithms accountable as we can.

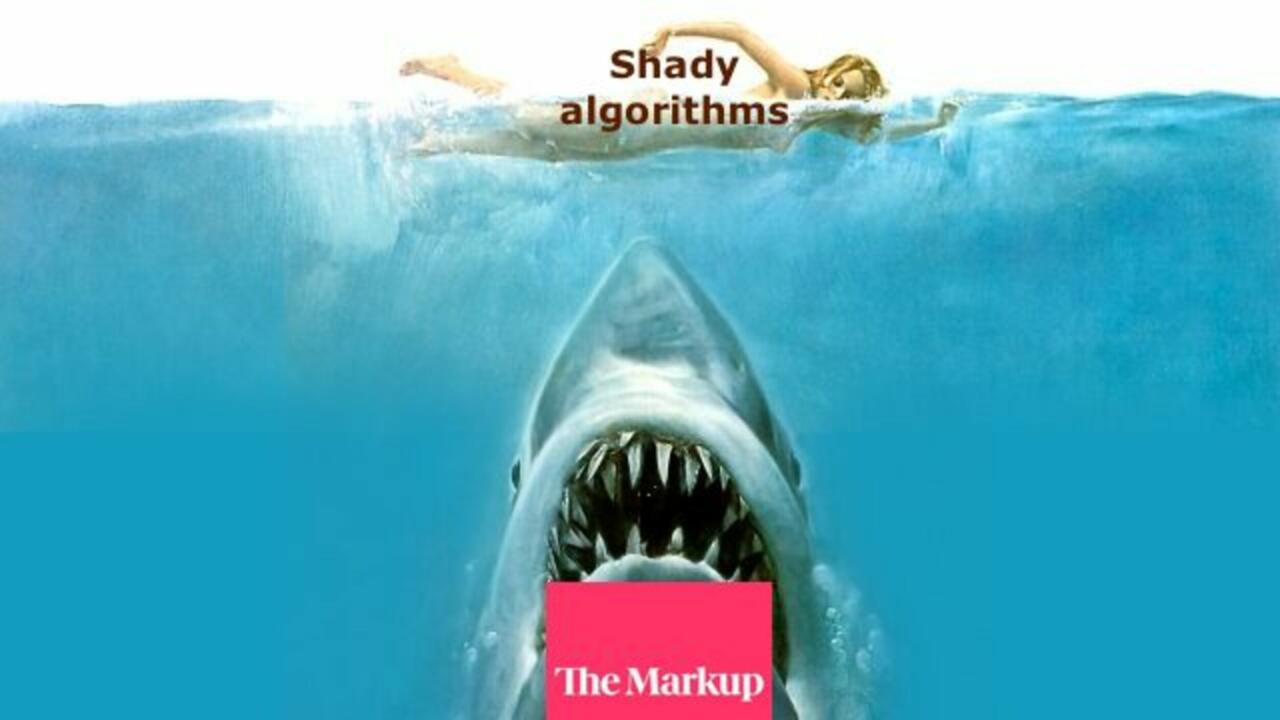

Special thanks to reader Felipe De La Hoz, who made a meme that we feel perfectly encapsulates what we are trying to do:

In a world in which people are drowning in information, we hope that our “Ask The Markup” series gives you meaningful answers to important questions. We would love to hear questions you have about how algorithms and technology could be affecting your life. Please write to us with your suggested questions at ask@themarkup.org.

Thanks for reading!

Best,

Julia Angwin

Editor-in-Chief

The Markup