Subscribe to Hello World

Hello World is a weekly newsletter—delivered every Saturday morning—that goes deep into our original reporting and the questions we put to big thinkers in the field. Browse the archive here.

Everyone’s favorite punching bag, Section 230 of the Communications Decency Act of 1996, made its way to the Supreme Court this week in Gonzalez v. Google. The Gonzalez case confronts the scope of Section 230, and in particular examines whether platforms are liable for algorithms that target users and recommend content. The stakes are high: Recommendation algorithms underpin much of our experience online (search! social media! online commerce!)—so the case spawned dozens of friend-of-the-court briefs, hundreds of webinars and tweet threads, and new wrinkles for people who love the internet. So basically, it’s my idea of a good time.

On that note: Hi! I’m Nabiha Syed, CEO of The Markup and a media lawyer by training. I like unanswered questions and moments where we confront the messiness of our lives as mediated by technology.

The sprawling two hour and 41 minute oral argument in Gonzalez pulled a lot of threads, some of which were clarifying and others that were, well, about thumbnails and rice pilaf. (Seriously.) My takeaway is that the court is unlikely to gut Section 230, but it might nibble at its scope in chaotic ways. To help make sense of it all, I turned to two of my favorite legal scholars and old colleagues of mine from the Yale Information Society Project: James Grimmelmann, professor at Cornell Law School and Cornell Tech, and Kate Klonick, law professor at St. John’s University and fellow at the Berkman Klein Center at Harvard.

Here’s what they had to share, edited for brevity and clarity.

Nabiha: Let’s take a minute to revisit the history and legislative intent of Section 230: What was it meant to incentivize?

James: It seems paradoxical, but Section 230 was intended to encourage internet platforms to moderate the content they carry. Several court decisions in the years before Section 230 was enacted had created a perverse legal regime in which the more effort a platform put into moderating harmful content, the stricter a standard it would be held to. By creating a blanket immunity, Section 230 ensured that platforms can remove the harmful content that they find without worrying that they will be held liable for the harmful content that they miss.

In the years since then, the consensus about Section 230’s purpose has shifted a bit. The leading theory now is that Section 230 prevents “collateral censorship.” Without some kind of immunity for user-posted content, platforms will overmoderate. Anytime content has even the slightest whiff of harmfulness or illegality, platforms will take it down to avoid legal risk. Lots of socially valuable content will be taken down along with the actually harmful stuff, throwing the baby out with the bathwater. On this theory, Section 230 reflects a belief that there is more good content out there than bad content, and that platforms will settle on moderation policies that keep their users happy even without the threat of liability.

Kate: It is a constant surprise to me that Section 230 has become this black hole for big tech agita, and I think it’s most constructive to focus on its history and legislative intent.

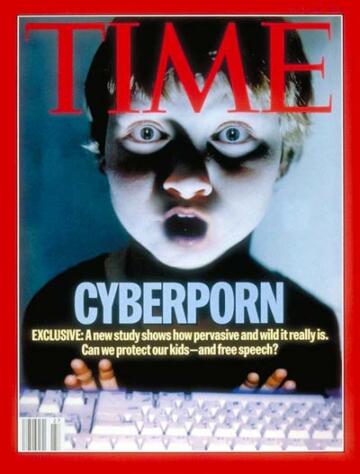

In the mid-1990s, there was a bit of a moral panic over the amount of pornography that was available on the internet, fueled by a (since debunked) article in the Georgetown Law Journal and things like this classic Time magazine cover:

That caused Congress to start what would be called in modern-terms a “Make the Internet Decent Again” campaign. The result was the Communications Decency Act (CDA)—which was a very broad piece of legislation that tried to bring “decency” to the internet.

At the same time, courts heard different types of tort claims against internet companies for speech posted on their sites—and the rulings are all over the place. One decision essentially told online intermediaries that they were liable, and another one said that they weren’t. The result was a lot of confusion. If platform providers were liable for anything people said on their platforms, they should just take down everything but the sweetest of cat photos. And if they weren’t liable for anything people said on their platforms, then why even bother spending resources taking down swastikas or other offensive content?

Fast-forward a bit: The CDA is passed (including Section 230, which essentially makes online intermediaries immune from tort liability), and it is almost immediately challenged on First Amendment grounds. That case, Reno v. ACLU, was heard by the Supreme Court in 1997, and it struck down all of the CDA for First Amendment reasons except for Section 230, which survived. Shortly thereafter, in Zeran v. AOL, the 4th Circuit broadly construed the immunity for platforms—which became precedent for courts all over the United States for the last 25 years. So that’s the story of how we got Section 230, kept Section 230, and then strengthened Section 230.

Nabiha: The Gonzalez argument revealed some confusion over what counts as publishing—namely, whether it includes recommending, endorsing, organizing, prioritizing, or presenting information. Why is it so important to get this scope right? And what does this mean for search?

James: If I post a video to YouTube, where other people view it, it is obvious that is the kind of activity that Section 230 protects. But on Tuesday the justices went around in circles for two and a half hours trying to figure out what else fits in that category. They seemed to agree that a straightforward search engine, like Google or Bing, should also be covered: It provides a filtered list of websites that are relevant to the user’s query. They had a harder time with when a site makes recommendations, which also provide a filtered list of relevant content.

The problem in both cases is that without giving some kind of immunity, it is not clear that the feature could exist. It might be possible to imagine a YouTube without recommendations; it is not possible to imagine a Google Search without search results. Even worse, there’s not a sharp dividing line between search and recommendation; Google and Bing use lots of signals besides the user’s query (e.g., geographic location and search history). I think the goal of legal policy in this area should be to help users find what they want, but the arguments on Tuesday focused more on what platforms do than on what users want.

Without giving some kind of immunity, it is not clear that [filtering features] could exist. It might be possible to imagine a YouTube without recommendations; it is not possible to imagine a Google Search without search results.

James Grimmelmann

Kate: The plain meaning of Section 230’s publishing definition is already given in the statute, specifically, 47 USC § 230(f)(4)—and full disclosure, I was on an amicus brief centered on this point. It literally includes the words “pick, choose, analyze, or digest content.” Did they know in 1996 that we’d be contemplating the scale of targeting that modern algorithms allow? No, of course not. But they explicitly considered that something would exist to play that curation role of picking, choosing, analyzing, or digesting content. And this isn’t a constitutional issue. If that meaning has changed, it’s not the court’s job to change it. That’s Congress’s job.

Nabiha: At various points, the justices danced with the idea of “neutral” algorithms and “neutrality” as a way to approach the question presented. I find the idea of a neutral algorithm slightly absurd, but you do see an analysis of “neutral tools” pop up in Section 230 jurisprudence. What’s going on here?

James: There are definitely contexts in which strict neutrality is coherent and defensible. Offline, public accommodation laws require neutral, even-handed treatment: Madison Square Garden can’t kick you out of a Mariah Carey concert because of your race or because you work for a law firm the owner doesn’t like. Online, network neutrality laws (where they exist) require ISPs to treat traffic from different sites identically. These work because there’s a clear definition of what neutrality means: Anyone can buy a ticket and be seated.

At the content layer, strict neutrality is often either incoherent or unimaginably awful. I happen to like a chronological feed, but if you follow thousands of people on Twitter, a strictly chronological feed is impossible to keep up with. A truly neutral search engine would have to rank every website the same, which is impossible, or use some kind of completely arbitrary criterion, like page size or alphabetical order. That doesn’t work.

When people—including Supreme Court justices and tech billionaires who genuinely should know better—float this idea of “neutrality,” I think one of two things is going on. Sometimes they’re reasoning by analogy from offline contexts, without realizing how pervasive indispensable algorithmic ranking is online. And sometimes they have in mind a weaker notion of neutrality, one in which the platform doing the ranking is viewpoint-neutral: It doesn’t try to press any particular agenda on its users. Unfortunately, while viewpoint neutrality can be an appealing goal when it comes to First Amendment law and speech regulation by governments, it doesn’t work in the content-moderation space because there are viewpoints embedded in almost every widely used content moderation practice. “Death threats are bad” is a viewpoint. And even viewpoint-neutral moderation policies, like “don’t send unsolicited fundraising emails that users didn’t sign up for and don’t want” can look discriminatory in application if it turns out that one of the two major political parties disproportionately sends spammy fundraising emails.

Kate: Your instinct is right, and I’m going to cheat and borrow from what professor Eric Goldman wrote, summarizing the Gonzalez oral arguments: “Algorithms are never neutral and always discriminate.” Eric is exactly right here, and I hope these questions start to deconstruct some of the technology myths that we’ve been suffering under for the last 20 years:

- Describing technology as the origin of intent. “AI is biased”—no, humans who build it contain bias and the human data it trains on contains bias. The AI just reflects that back to us.

- Technology is objective or neutral. “Neutral tools” is exactly this idea.

- We can fix algorithms with transparency. This reminds me a lot about the brief hope in the early 2000s as fMRIs came into regular use that we could “solve” questions of intent or culpability in the law by being able to see how a brain “worked”—of course we can’t, even seeing how a brain works or that a tumor “made” someone do something doesn’t mean we don’t have to ask the hard philosophical questions about what to do about it.

Nabiha: The magic (and the mayhem) of Section 230 is that it redirects liability to an individual speaker, rather than a third party who is amplifying that speech. In the absence of 230, do you foresee courts limiting third-party liability on First Amendment grounds?

James: In the absence of Section 230, two things would happen.

First, courts would have to ask whether the First Amendment does some of the same work that Section 230 does. While the full breadth of Section 230 probably isn’t constitutionally required, some degree of protection probably is. For the same reason that New York Times v. Sullivan requires actual malice in defamation claims brought by public figures, it’s hard to see how strict liability against platforms could be constitutional. Unless they have some fair reason to know that particular content is illegal, it strains basic notions of justice to hold them liable for it.

Second, courts would also have to work through the details of specific crimes and torts. Defamation, invasion of privacy, wire fraud, promotion of unregistered securities, the practice of medicine without a license … you name it, the courts would have to figure out how it applies to platforms. The existence of Section 230 has prevented the development of substantive law of platform liability. If Section 230 goes away, the courts would have to invent it, in a hurry. In a lot of cases, they might well end up with rules that protect platforms much of the time. The same policy reasons that support Section 230 currently would still be good policy reasons not to hold platforms liable for every harmful piece of content users post. You can interpret that as meaning that Section 230 isn’t indispensable; it could be repealed without breaking the internet. But I think it would be more accurate to say that Section 230 is a load-bearing wall. If it’s demolished, something else will need to hold up that weight.

Nabiha: It’s 2023, so of course AI has entered the chat. What are the implications of this case for generative AI?

James: If the Supreme Court holds that algorithmic recommendations aren’t protected by Section 230, then generative AI is likely to be categorically ineligible for Section 230. That has to be a scary prospect for the companies currently giving users access to chatbots that meet the DSM-5 criteria for multiple serious mental disorders. If the Supreme Court rules for Google, matters will be more complicated. In some ways these AIs simply replicate patterns in their training data; in other ways, they synthesize outputs that can’t be traced back to specific third-party training data. It wouldn’t be a bad thing if the courts had to work this out, one case at a time, over the course of several years. Generative AI is really complicated, and we barely understand how it works and how it will be used. Taking time to work out the legal rules gives us more of a chance of getting them right.

Nabiha: Predictions are a fool’s game, but what’s your gut say about the future of Section 230—in one emoji?

Kate: 💬

James: 🤞

The fun doesn’t stop here. The Supreme Court will rule on the Gonzalez case by this summer. And if there’s one thing we can bet on, the coming months will see more twists and turns in the laws that affect the internet. If getting into the nerdy, legal bits of technology is something you’re interested in, and you have additional questions you’d like answered, send them to me at newsletter@themarkup.org. If we get enough questions, we’ll answer them in a future issue. And you can always reach out to me at nabiha@themarkup.org.

Finally, if you’re interested in diving deeper into what Kate and James have worked on, Kate writes a newsletter called The Klonickles, which you can subscribe to if you like reading about free speech, law, and technology. And James literally wrote the textbook on internet law, which you can get here.

Thanks for reading!

Always,

Nabiha Syed

Chief Executive Officer

The Markup