Subscribe to Hello World

Hello World is a weekly newsletter—delivered every Saturday morning—that goes deep into our original reporting and the questions we put to big thinkers in the field. Browse the archive here.

Hello, friends,

I’m sad to inform you that this is my last missive to you. After founding The Markup five years ago, I am departing this newsroom to pursue other projects. It’s been an honor to correspond with all of you, dear readers, and I am so humbled by all of you who supported my vision. Please stay in touch with me through Twitter, Mastodon, or my personal newsletter.

Before I go, I wanted to share the lessons I learned building a newsroom that integrated engineers with journalists and sought to use a new model for accountability journalism: the scientific method.

I founded The Markup with the idea that striving for vague concepts like “objectivity” or “fairness” can lead to false equivalents. A better approach, I believe, is for journalists to seek a hypothesis and assemble evidence to test it.

At The Markup we pioneered an array of scientifically inspired methods that used automation and computational power to supercharge our journalism. Reflecting on our work, I came up with 10 of the most important lessons I’ve learned using this approach.

1) Important ≠ secret

In a resource-constrained world, choosing a topic to investigate is the most important decision a newsroom makes.

At The Markup, we developed an investigative checklist that reporters filled out before embarking on a project. Top of the checklist was not novelty, but scale—how many people were affected by the problem we were investigating. In other words, we chose to tackle things that were important but not secret.

For instance, anyone using Google has probably noticed that Google takes up a lot of the search result page for its own properties. Nevertheless, we decided to invest nearly a year into quantifying how much Google was boosting its own products over direct links to source material because the quality of Google search results affects nearly everyone in the world.

This type of work has an impact. The European Union has now passed a law banning tech platforms from this type of self-preferencing, and there is legislation pending in Congress to do the same.

2) Hypothesis first, data second

It is extremely tempting for data-driven journalists to jump into a dataset looking for a story, but this is almost never a good way to assess accountability. Instead, it often results in what I like to call “Huh, that’s interesting” stories.

The best accountability stories, data-driven or not, start out with a tip or a hunch, which you report out and develop into a hypothesis you can test.

Hypotheses must be crafted carefully. The statement “Facebook is irredeemably bad” is not a testable hypothesis. It is a hot take. A hypothesis is something provable, such as: Facebook did not live up to its promise to stop recommending political groups during the U.S. presidential election. (Spoiler: We checked; it did not).

3) Data is political

Data is powerful. Whoever gathers it has the power to decide what is noticed and what is ignored. People and institutions who have the money to build massive datasets rarely have any incentive to assemble information that could be used to challenge their power.

That’s why we journalists often need to collect our own data and why I built a newsroom that had the engineering talent and social science expertise needed to collect original data at scale.

4) Choose a sample size

The days when journalists could interview three people in a diner and declare a trend are thankfully over. The public is demanding more persuasive evidence from the media.

At the same time, however, not every proof requires big data. It only took one secret court document, provided by whistleblower Edward Snowden, to prove that U.S. intelligence agencies were secretly collecting the call logs of every single American.

The beauty of statistics is that even when you are examining a large system, you often need only a relatively small sample size. When we wanted to investigate Facebook’s recommendation algorithms, Markup reporter Surya Mattu assembled a panel of more than 1,000 people who shared their Facebook data with us. Even though that was a drop in the bucket of Facebook’s more than two billion users, it was still a representative sample to test some hypotheses.

5) Embrace the odds

If you’re lucky, sometimes a dataset reveals its truths without your having to do any hard math. But for large data sets, statistics is usually the best way to extract meaning. This can mean embracing some wonky-sounding probabilistic findings.

Consider our investigation into whether Amazon placed its own brands at the top of search results. We scraped thousands of searches and found that Amazon disproportionately gave its brands the top slot: Amazon brands and exclusives were only 5.8 percent of products in our sample but got the number one spot 19.5 percent of the time.

The problem was that the proportions alone don’t tell you if Amazon won that spot fair and square. Maybe its products were actually better than all the others? To dig deeper, we wanted to see how Amazon brands fared against products with high star ratings or large numbers of reviews.

To do that, investigative data journalist Leon Yin used a statistical technique called a random forest analysis that allowed him to identify that being an Amazon brand was the most important factor in predicting if a product won the top slot—far more than any of the other potential factors combined. The odds—even though they were a bit complicated to explain—made our finding far more robust.

6) Yes, you need a narrative

Data is necessary but not sufficient to persuade readers. Humans are wired to tell, share, and remember stories.

The statistical finding is what is known in journalism as the “nut graf” of the story. You still need a human voice to be the spine. This is where the old-school reporting skills of knocking on doors and interviewing tons of people are still incredibly valuable. It is where word choice and talented editors make all the difference in crafting a compelling article.

7) Expertise matters

Journalists are generalists. Even specialists like me who have covered a single topic—technology—for decades have to dive into new topics daily.

That’s why I believe in seeking expert reviews of statistical work. Over the years, I developed a process similar to academic peer review, in which I shared my methodologies with statisticians and domain experts in whatever field I was writing about.

I never share the narrative article before publication—which would be a fireable offense in most newsrooms. But sharing the statistical methodology allows me to bulletproof my work and find mistakes.

No one is more incentivized to find mistakes than the subject of an investigation, so at The Markup we shared data, code, and analyses with the subjects before publication in a process I call “adversarial review.” This gives them an opportunity to engage with the work meaningfully and provide a thoughtful response.

8) Objectivity is dead. Long live limitations

One of the best parts about using the scientific method as a guide is that it moves us beyond the endless debates about whether journalism is “fair” or “objective.”

Rather than focus on fairness, it’s better to focus on what you know and what you don’t know. When he reported on the hidden bias in mortgage approval algorithms, Markup reporter Emmanuel Martinez could not obtain applicants’ credit scores because the government doesn’t release them. So he noted their absence in the limitations section of his methodology.

His analysis was still robust enough to be cited by three federal agencies when announcing a new plan to combat mortgage discrimination.

9) Show your work

Journalists have a trust problem. Now that everyone in the world can publish, journalists must work harder to prove that their accounting of the truth is the most credible version.

I have found that showing my work, sharing entire datasets, the code used to analyze the data, and an extensive methodology, builds trust with readers. As an added bonus, the methodologies often get more website traffic over time than the narrative articles.

10) Never give up

Journalists are outnumbered. There are six public relations professionals for every one journalist in the United States, according to the Bureau of Labor Statistics.

That means we have to use every possible tool at our disposal to hold power to account. One way to do that is to build tools that allow our work to continue beyond the day the article was published.

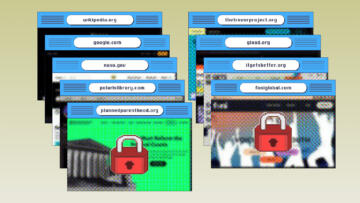

Consider the real-time forensic privacy scanner, Blacklight, that Surya Mattu built at The Markup. It runs a series of real-time privacy tests on any website.

Reporters can use Blacklight anytime there is a privacy-related news story. ProPublica, for instance, recently used Blacklight to reveal that online pharmacies selling abortion pills were sharing sensitive data with Google and other third parties.

I will continue to pursue these principles in my upcoming projects. Thank you for sharing the journey with me. It’s been an honor.

Best,

Julia Angwin

The Markup

Editor’s Note:

Dear readers,

I’ve been a fan of Julia’s work since 2013, when she joined ProPublica. In our time there together, I saw firsthand how much Julia valued the partnership between journalists and engineers and the incredible work that could emerge: everything from investigations into how Facebook allowed discriminatory advertising to a landmark project that revealed how criminal risk scores were biased against Black defendants.

I could not be more grateful to Julia for her vision to start The Markup and for the work she put into building a high-impact newsroom. As Hello World readers, you know this best. During her tenure, The Markup’s journalism has spurred companies to stop selling precise location data, Congress to introduce bills to outlaw self-preferencing, regulators to combat digital redlining practices where algorithms decide who gets a mortgage, and so much more. We can’t wait to see Julia’s announcement of what’s next.

I’m also excited to share that this newsletter, Hello World, isn’t going anywhere. It’s a place where we get to show our work another way: by going deep into our original reporting. It’s also a place for us to ask important questions while highlighting the voices of people who think critically about technology and its impact on society. Looking forward, we want to keep developing Hello World by incorporating your thoughts and feedback.

Over the next two weeks, we’d love to hear from you. What issues of Hello World did you enjoy most, what’s stuck with you, or what would you like to see more of? What you share will help us decide where the newsletter will go next—whether that’s answering more of your questions, breaking down our investigations and how they impact you, or talking to experts. Share your thoughts by Saturday, Feb. 18, by replying to this email directly.

—Sisi Wei,

Editor-in-Chief,

The Markup