As users drifted through Facebook in the aftermath of the presidential election, they may have run across a satirical article about the Nashville bombing in December. Playing off conspiracies about COVID-19 death diagnoses, a viral photo jokingly suggested the bomber had “died from COVID-19 shortly after blowing himself up.”

In the beginning of January, a New York woman was shown the photo—shared by a friend—in her news feed. Facebook appended a note over the top: The information was false.

But four days earlier, as a woman in Texas looked at Facebook, she saw the same post—shared to her feed by a conservative media personality with about two million followers. That post only had a “related articles” note appended to the bottom that directed users to a fact-check article, making it much less obvious that the post was untrue.

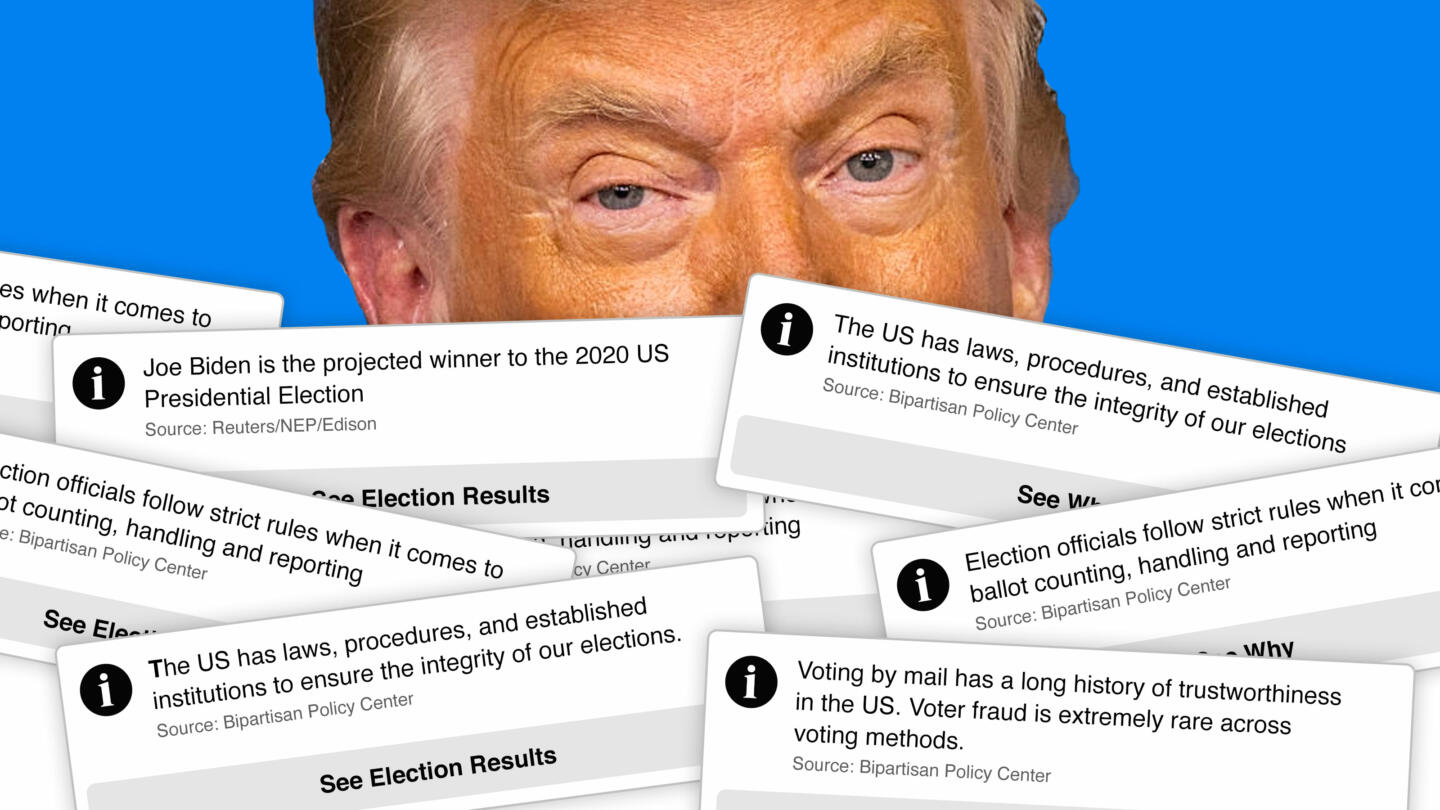

In August, as the election approached and misinformation about COVID-19 spread, Facebook announced it would give new fact-checking labels to posts, including more nuanced options than simply “false.” But data from The Markup’s Citizen Browser project, which tracks a nationwide panel of Facebook users’ feeds, shows how unevenly those labels were applied: Posts were rarely called “false,” even when they contained debunked conspiracy theories. And posts by Donald Trump were treated with the less direct flags, even when they contained lies.

The Markup shared the underlying data for this story with Facebook.

“We don’t comment on data that we can’t validate, but we are looking into the examples shared,” Facebook spokesperson Katie Derkits said in a statement.

Overall, we gathered Facebook feed data from more than 2,200 people and examined how often those users saw flagged posts on the platform in December and January. We found more than 330 users in the sample who saw posts that were flagged because they were false, devoid of context, or related to an especially controversial issue, like the presidential election. But Facebook and its partners used the “false” label sparingly—only 12 times.

Our panelists saw 682 flagged posts, with labels added either to the bottom or directly over the posts.

-

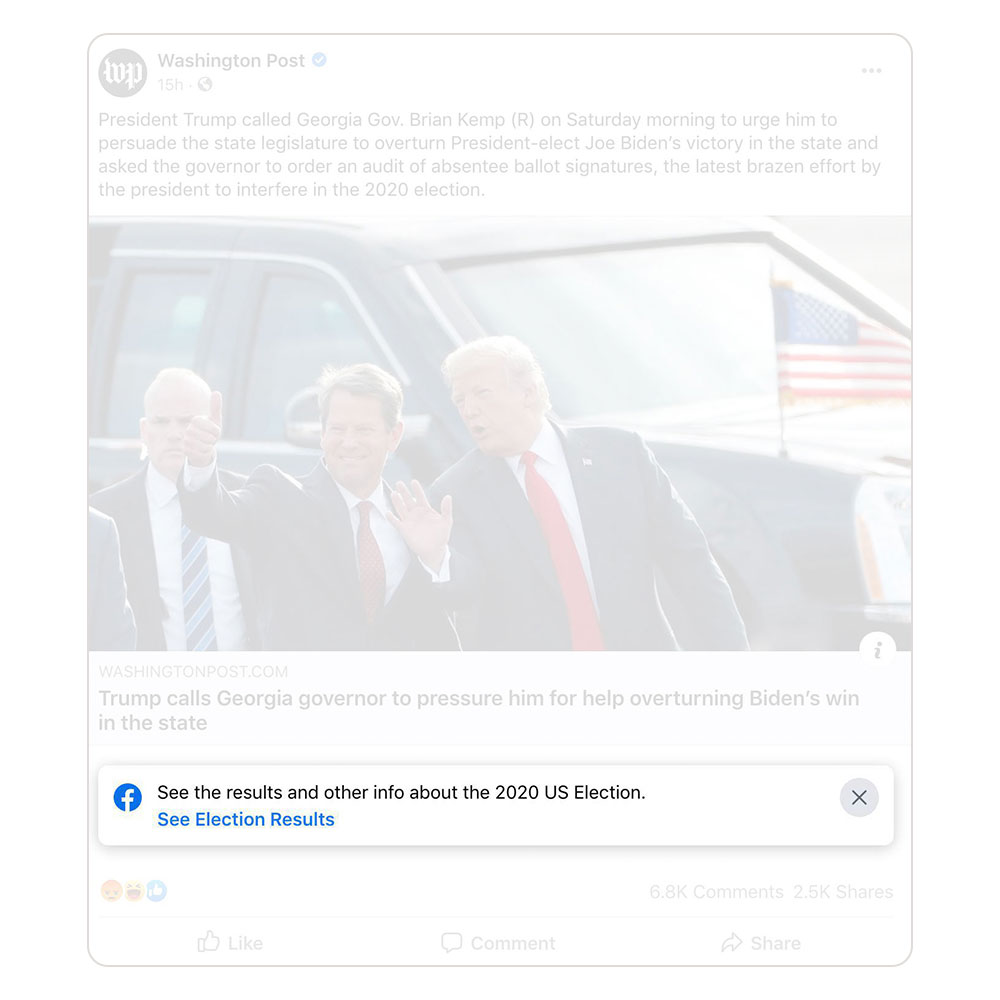

The most popular flag, directing users to election info, appeared 588 times and seemed to be automatically added to anything election-related. Because it was so ubiquitous, we excluded this flag from our statistical analysis.

An image of a Facebook post by The Washington Post sharing their story "Trump calls Georgia governor to pressure him for help overturning Biden's win in the state". Facebook flagged this post with a seemingly automated flag that reads "See the results and other info about the 2020 US Election" -

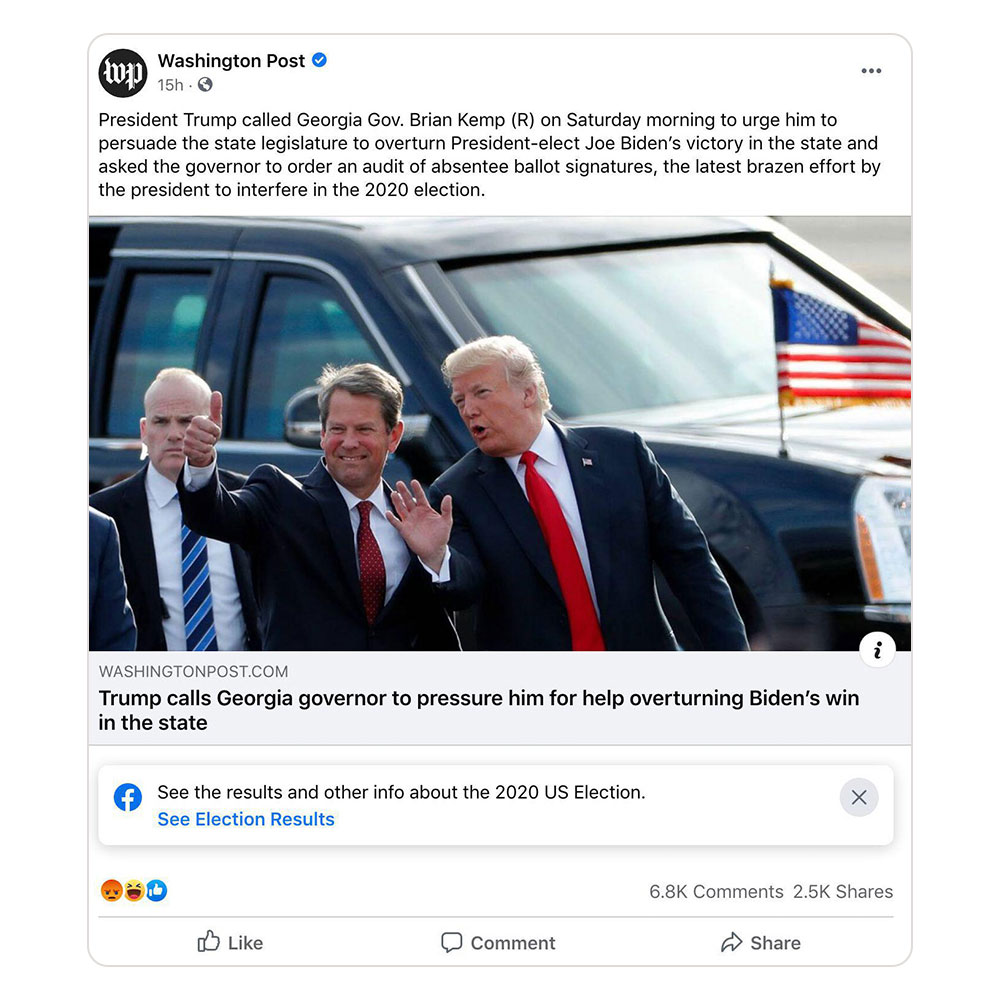

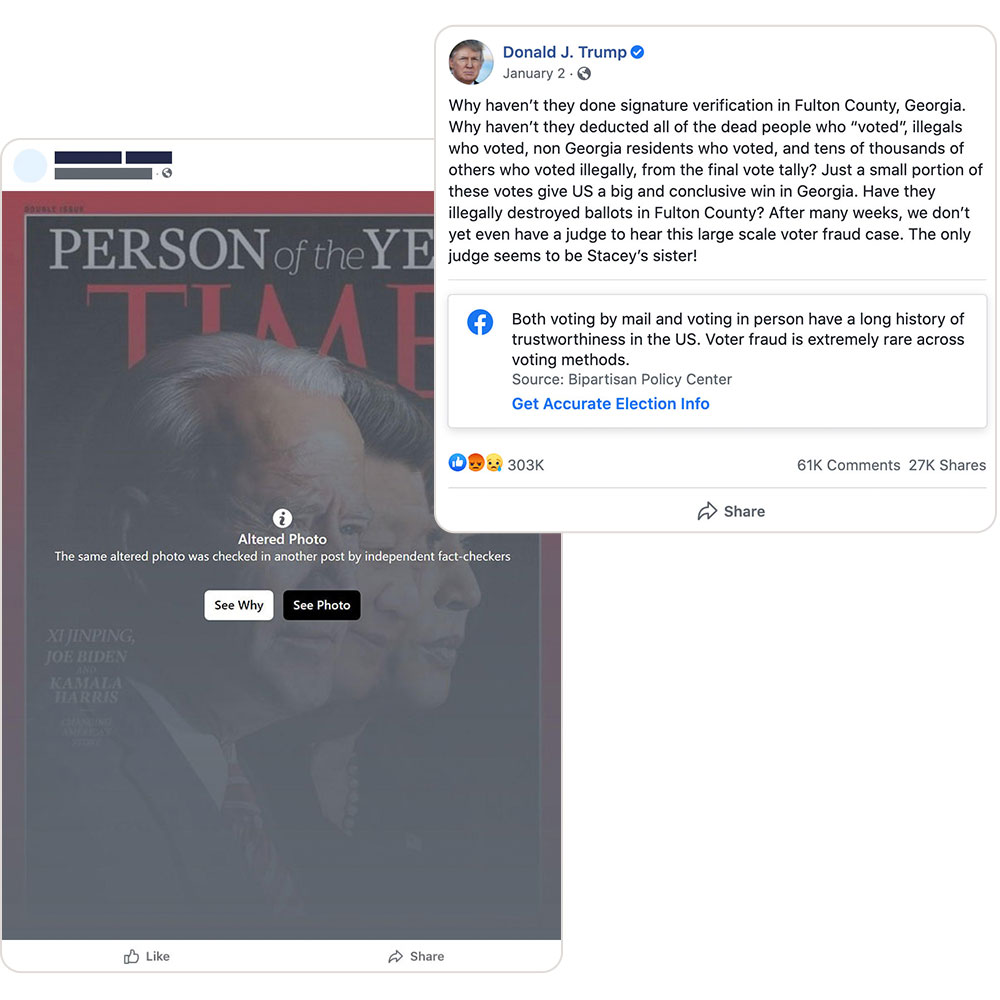

The top spreader of posts that were given flags? Trump. His posts, however, were never called “false” or “misleading,” even when they contained inflammatory lies. When Trump shared a mid-December post claiming it was “statistically impossible” for Joe Biden to have won, for example, Facebook simply noted the United States “has laws, procedures and established institutions to ensure the integrity of our elections.”

In contrast, Joe Biden’s posts were given several flags as well, but they were limited to notes simply saying he had won the election. The flag was placed on even innocuous content, like an obituary for Chuck Yeager.

An image of two contrasting Facebook posts. One by Trump where he shares a supposed report that alleges election fraud that would swing the victory to Trump. Facebook flagged this with "The US has laws, procedures, and established institutions to ensure the intergrity of our elections". While Biden shared an obituary for Chuck Yeager that was flaged "Joe Biden is the President of the United States. Kamala Harris is the Vice President". -

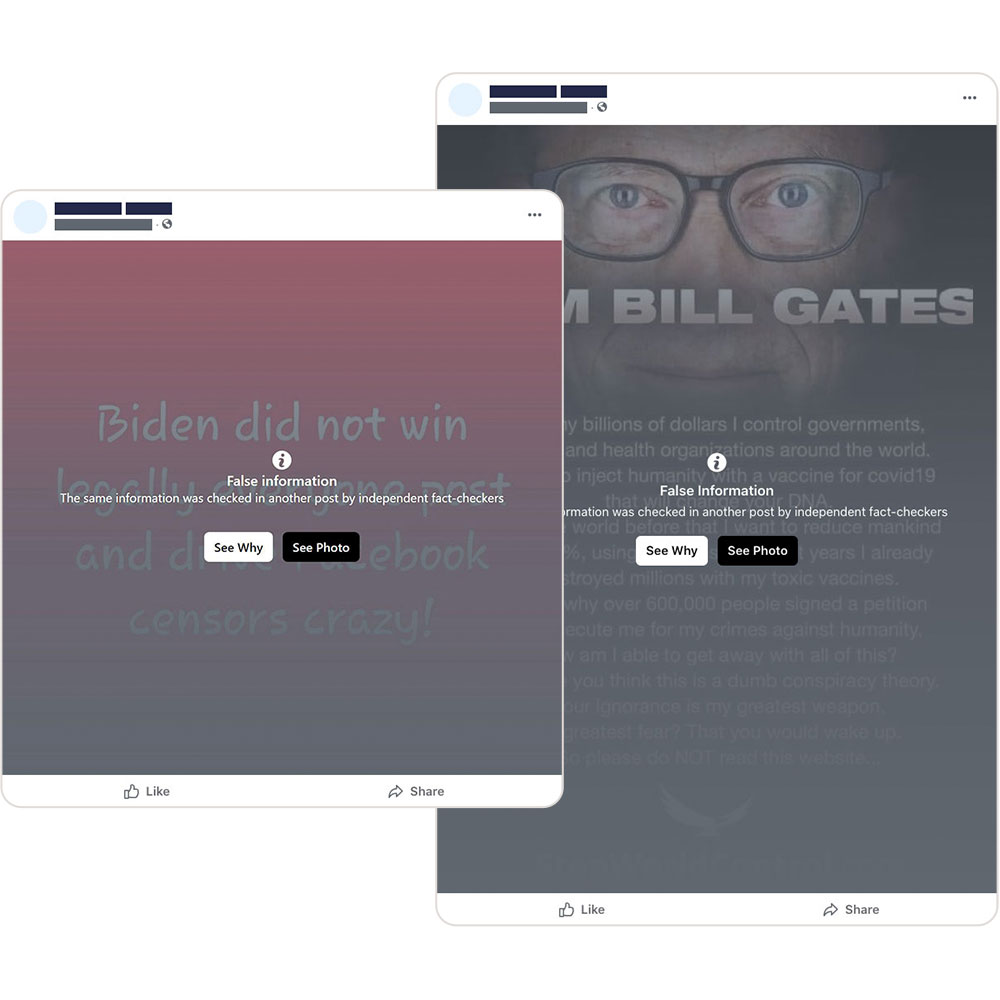

The bar to calling content “false” was high and Facebook applied that label only to posts like one linking Bill Gates to a world domination plot, or one that said “Biden did not win legally.”

In general, the company says, it reduces the distribution of posts that have been marked as false and warns users who attempt to share false information. But we were able to share the fact-checked post about Bill Gates to personal accounts without seeing any additional warning. After we asked Facebook about this, an additional warning was added.

An image of two constrasting Facebook posts that were both flagged by Facebook with the message of "False Information: The same information was checked in another post by independant fact-checkers". -

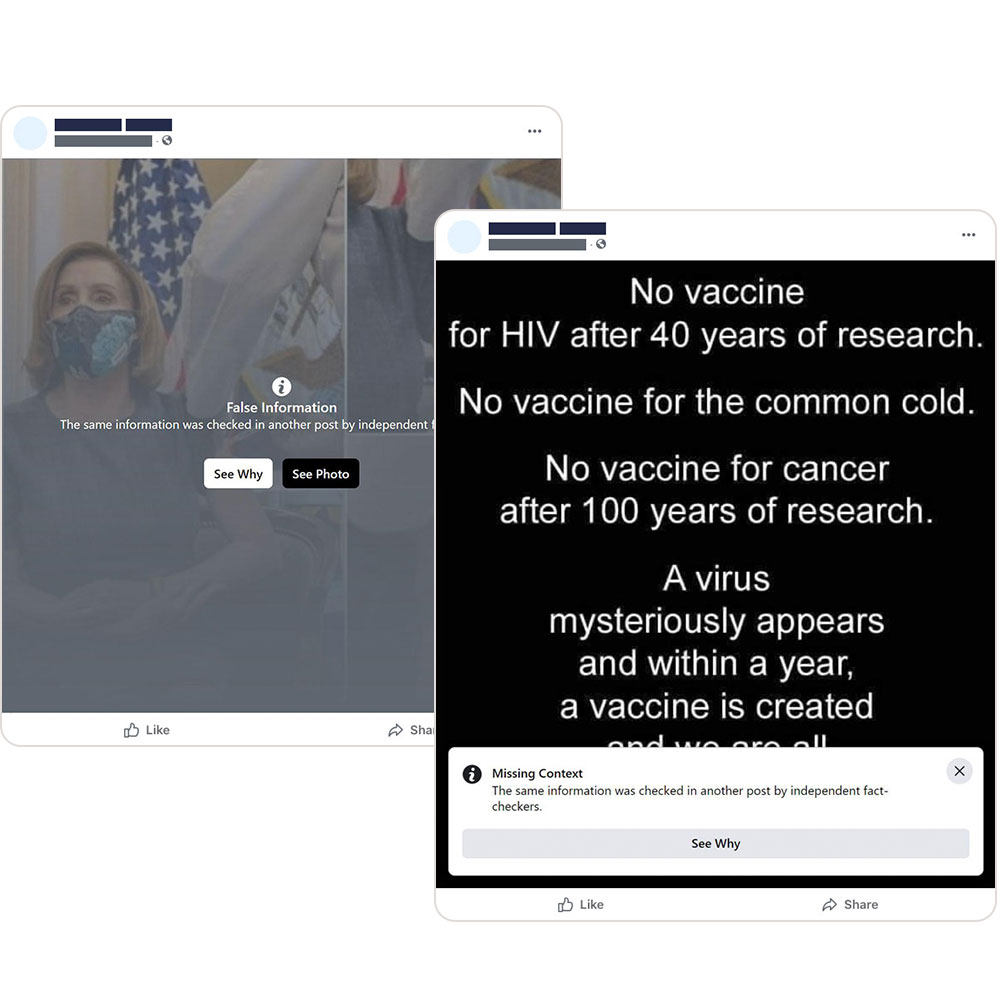

Facebook instead used flags for “missing context” relatively freely—about 38 times in our sample. But the line between false and missing context was fuzzy, with posts getting a “missing context” label even when they endorsed an underlying conspiracy theory.

For example, this video from conservative pundit Tomi Lahren, which claims there were “many election irregularities that just don’t add up and need to be questioned,” was given the “missing context” label, as were posts that pointed to political crowds to suggest the election was rigged.

An image of two constrasting Facebook posts that were both flagged by Facebook with the message of "Missing context". -

The use of the “missing context” label led to some odd distinctions about what is false. A post suggesting House Speaker Nancy Pelosi may have staged her COVID-19 vaccinations was rated as “false,” while a post suggesting it was impossible to create a safe COVID-19 vaccine in a year was merely rated as “missing context.”

An image of two constrasting Facebook posts that were both flagged by Facebook. -

In total, we saw 18 different flags, from the labels for “false” and “missing context” to other warnings for graphic content and altered photos—many of which appeared on only one or two posts. At least one flag, an assurance that voting by mail is safe, was only seen by our panelists on a single Trump post.

An image of two constrasting Facebook posts that were given less common flags. One is a fake Time Magazine Person of the Year cover with Joe Biden, Xi Jinping and Kamala Harris supposedly winning the award, this was flagged as an Altered Photo by Facebook. The other post is by Donald Trump where he suggests there was widespread voter fraud. Facebook labelled this "Both voting by mail and voting in person have a long history of trustworthiness in the US. Voter fraud is extremely rare across voting methods.".

Facebook has spent years grappling with how to fact-check content—especially when the posts come from politicians. In a 2019 blog post, the company argued that it wasn’t “an appropriate role for us to referee political debates and prevent a politician’s speech from reaching its audience.”

“My guess would be that Facebook doesn’t fact-check Donald Trump not because of a concern for free speech or democracy, but because of a concern for their bottom line,” said Ethan Porter, an assistant professor at George Washington University who has researched false information on the platform.

After years of controversy, Facebook indefinitely suspended Trump, who had 150 million followers, after the riot at the United States Capitol on Jan. 6, saying the risks of keeping him on the service were now too great. But that could change. The Facebook Oversight Board, a body created by Facebook to review and possibly overturn its decisions, will determine whether to reinstate the former president’s account.

The company says it removes at least some outright false posts, and our analysis can’t account for how many false posts our panel would have seen without that action. Our sample is also small relative to Facebook’s universe of billions of users who may have seen additional flags on their feeds.

Within our panel, however, a clear trend emerged: Fueled by Trump’s false claims, our data showed, more flagged content overall went to Trump voters. Among our panelists who voted for Trump, 9.3 percent had flagged posts appear on their feeds, as opposed to only 2.4 percent of Biden voters. Older Americans in our sample were also more likely to encounter the flagged posts.

Porter said Facebook has been too passive in how it handles lies—a flaw that undermines the entire platform.

“They’ve chosen not to aggressively fact-check,” Porter said. “As a result, more people believe false things than would otherwise, full stop.”